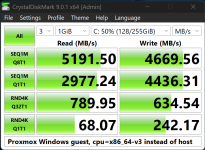

I've been setting up a new Windows 11 guest and struggling to debug poor I/O performance within the guest. Qualitatively, "real usage" I/O performance in the guest seems sluggish, with apps launching slowly and operations that are snappy on similarly spec'd non-virtualized Windows devices seeming to have noticeable latency. Quantitatively I used CrystalDiskMark within the guest to get some basic measurements of performance, and it appears that high queue depth random read performance (RND4K QD32T1) is where the guest is struggling in particular.

I've tried a number of different configurations now in an attempt to isolate the most relevant variable:

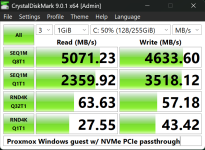

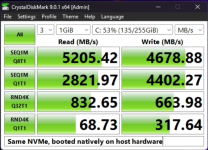

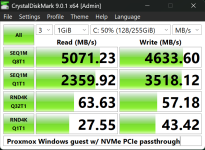

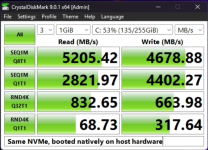

Since at this point I had the Windows boot drive cloned over to a physical NVMe drive (the Corsair P3 Plus I'd had spare), the next test was to boot the same drive used within the Proxmox guest on the host hardware natively. That test is the first one to show a significant difference on the RND32 QD32T1 test, and a massive difference at that; on reads, ~60-70 MB/s within the guest and ~800MB/s for the same Windows install booted natively on the same hardware. Sequential reads and writes show similar results between native and guest, no worse than I might expect from virtualization overhead, but random read and write performance is horrible within the guest.

Note that this test was without Virtio, ZFS/LVM, or any of that in the path to the drive; the entire NVMe drive is passed through and using the default Windows NVMe driver. (That said, these guest results are about the same as previous tests using Virtio SCSI with a raw image either in a ZFS Zvol or an LVM LV on the same NVMe drive.) The Windows guest run was performed with all other guests stopped and with all 28 vCPUs available to the Windows guest. The native test was performed immediately after the guest test by simply shutting down the guest and Proxmox and then booting into the same drive natively. No changes other than what Windows might perform automatically due to detecting hardware changes, but this was not the first time booting the drive natively and it did not seem to make any changes in between these runs like it did on the first native boot.

This seems to imply that something about the virtualization itself is impacting the random read I/O performance of the Windows guest. I haven't seen this noted clearly as expected behavior anywhere else (though there are of course lots of scattered reports of poor I/O performance that often lack details). While I might expect some performance impact due to the inherent overhead of virtualization, this difference seems much too large to be that alone...right?

Is this at all expected? Any tips on what to try next? I'm stumped for how to diagnose this further. I'm just a prosumer homelab type user with flexible needs, but this is still significant enough that it may rule out virtualizing Windows completely for me. It's hard for me to believe that people would be happy with such a drastic I/O performance hit or that this is typical of VM performance in 2025.

Config for Windows guest below. The two unused disks are old clones from previous tests, hostpci0 is an Nvidia GTX 1080 GPU that is passed through to the guest (irrelevant as far as I know but noted for completeness), and hostpci1 is the NVMe boot drive. The physical CPU is a single Intel i7-14700k. The one remaining significant difference between guest and native would be that the guest is configured with 24GiB of RAM and the native has the hardware's full 128GiB available, but it's hard to believe that's relevant when the guest has 24GiB and was booted shortly before this test.

I've tried a number of different configurations now in an attempt to isolate the most relevant variable:

- different NVMe drives, swapping out the Corsair P3 Plus QLC drive I'd initially repurposed for a Samsung 990 PRO, without significant improvement,

- VM images on ZFS vs LVM with both thin and thick provisioning, which made slight but not significant differences probably within measurement error,

- various guest settings other than the generally recommended Virtio SCSI Single w/ IO Thread, all of which were worse

Since at this point I had the Windows boot drive cloned over to a physical NVMe drive (the Corsair P3 Plus I'd had spare), the next test was to boot the same drive used within the Proxmox guest on the host hardware natively. That test is the first one to show a significant difference on the RND32 QD32T1 test, and a massive difference at that; on reads, ~60-70 MB/s within the guest and ~800MB/s for the same Windows install booted natively on the same hardware. Sequential reads and writes show similar results between native and guest, no worse than I might expect from virtualization overhead, but random read and write performance is horrible within the guest.

Note that this test was without Virtio, ZFS/LVM, or any of that in the path to the drive; the entire NVMe drive is passed through and using the default Windows NVMe driver. (That said, these guest results are about the same as previous tests using Virtio SCSI with a raw image either in a ZFS Zvol or an LVM LV on the same NVMe drive.) The Windows guest run was performed with all other guests stopped and with all 28 vCPUs available to the Windows guest. The native test was performed immediately after the guest test by simply shutting down the guest and Proxmox and then booting into the same drive natively. No changes other than what Windows might perform automatically due to detecting hardware changes, but this was not the first time booting the drive natively and it did not seem to make any changes in between these runs like it did on the first native boot.

This seems to imply that something about the virtualization itself is impacting the random read I/O performance of the Windows guest. I haven't seen this noted clearly as expected behavior anywhere else (though there are of course lots of scattered reports of poor I/O performance that often lack details). While I might expect some performance impact due to the inherent overhead of virtualization, this difference seems much too large to be that alone...right?

Is this at all expected? Any tips on what to try next? I'm stumped for how to diagnose this further. I'm just a prosumer homelab type user with flexible needs, but this is still significant enough that it may rule out virtualizing Windows completely for me. It's hard for me to believe that people would be happy with such a drastic I/O performance hit or that this is typical of VM performance in 2025.

Config for Windows guest below. The two unused disks are old clones from previous tests, hostpci0 is an Nvidia GTX 1080 GPU that is passed through to the guest (irrelevant as far as I know but noted for completeness), and hostpci1 is the NVMe boot drive. The physical CPU is a single Intel i7-14700k. The one remaining significant difference between guest and native would be that the guest is configured with 24GiB of RAM and the native has the hardware's full 128GiB available, but it's hard to believe that's relevant when the guest has 24GiB and was booted shortly before this test.

Code:

❯ qm config 103

agent: 1

bios: ovmf

boot: order=hostpci1

cores: 28

cpu: host

efidisk0: local-zfs:vm-103-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

hostpci0: 0000:01:00,pcie=1,x-vga=1

hostpci1: 0000:04:00,pcie=1

machine: pc-q35-9.2+pve1

memory: 24576

meta: creation-qemu=9.2.0,ctime=1750044503

name: WinVM

net0: e1000e=BC:24:11:73:12:E7,bridge=vmbr0

numa: 0

ostype: win11

scsihw: virtio-scsi-single

smbios1: uuid=1d966852-58ee-45dd-a184-08ca499493ae

sockets: 1

tpmstate0: local-zfs:vm-103-disk-2,size=4M,version=v2.0

unused0: local-zfs:vm-103-disk-3

unused1: local-lvm:vm-103-disk-0

vmgenid: c28ad20a-b7a4-49e8-9f7f-21aac8b41c78

Last edited: