Gilberto Ferreira

Renowned Member

I don't think so. At least I have installed this kernel 6.17 in a bunch o PVE 9 using no-sub repo.with subscriptions now

I don't think so. At least I have installed this kernel 6.17 in a bunch o PVE 9 using no-sub repo.with subscriptions now

Well somehow my licensed PVE enterprise servers are able to do this with the latest PVE9I don't think so. At least I have installed this kernel 6.17 in a bunch o PVE 9 using no-sub repo.

Well somehow my licensed PVE enterprise servers are able to do this with the latest PVE9

apt install proxmox-kernel-6.17

Perhaps I've goofed up a repo somewhere.

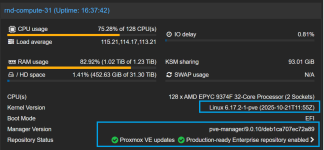

And I posted that I can do it without that step. Here's proof.View attachment 92466

We recently uploaded the 6.17 kernel to our repositories. The current default kernel for the Proxmox VE 9 series is still 6.14, but 6.17 is now an option.

We plan to use the 6.17 kernel as the new default for the Proxmox VE 9.1 release later in Q4.

This follows our tradition of upgrading the Proxmox VE kernel to match the current Ubuntu version until we reach an Ubuntu LTS release, at which point we will only provide newer kernels as an opt-in option. The 6.17 kernel is based on the Ubuntu 25.10 Questing release.

We have run this kernel on some of our test setups over the last few days...

- t.lamprecht

- kernel 6.17 linux 6.17 opt-in kernel

- Replies: 78

- Forum: Proxmox VE: Installation and configuration

very goodAnd I posted that I can do it without that step. Here's proof.

View attachment 92469

BIOS, Dell BOSS-S1 and the drives themselves were running the latest available from Dell. Its unlikely that anything new will be released in the future as all of this hardware is already pretty old.Any pending firmware /BIOS updates? Perhaps the disk firmware itself? I've had a couple of 'unique' errors caused between a bad mix of firmware / kernel versions.

nvidia/nv-acpi.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

compilation terminated.

nvidia/nv-cray.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

compilation terminated.

CC [M] nvidia/nv-procfs.o

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia/nv-acpi.o] Error 1

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia/nv-cray.o] Error 1

nvidia/nv-dma.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

compilation terminated.

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia/nv-dma.o] Error 1

CC [M] nvidia/nv-usermap.o

CC [M] nvidia/nv-vm.o

CC [M] nvidia/nv-vtophys.o

nvidia/nv-mmap.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

compilation terminated.

nvidia/nv-p2p.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

CC [M] nvidia/os-interface.o

compilation terminated.

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia/nv-mmap.o] Error 1

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia/nv-p2p.o] Error 1

nvidia/nv-pat.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

compilation terminated.

CC [M] nvidia/os-mlock.o

nvidia/nv-procfs.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

compilation terminated.

CC [M] nvidia/os-pci.o

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia/nv-pat.o] Error 1

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia/nv-procfs.o] Error 1

CC [M] nvidia/os-registry.o

nvidia/nv-usermap.c:26:10: fatal error: os-interface.h: No such file or directory

26 | #include "os-interface.h"

| ^~~~~~~~~~~~~~~~

compilation terminated.

Did you also make sure to install the optional 6.17-headers? (not exact pkg name)I am using vGPU unlock on an older Pascal series card (nVidia GeForce 1080 GTX). Updated all my rigs to 6.17.2-1 today and the one with the vGPU unlock fails building DKMS on any driver version attempted to install. This was working on 6.14.x. Any assistance to rectify this would be appreciated.

I have tried the following drivers:

NVIDIA-Linux-x86_64-550.144.02-vgpu-kvm-custom.run

NVIDIA-Linux-x86_64-550.163.02-vgpu-kvm-custom.run

NVIDIA-Linux-x86_64-550.54.10-vgpu-kvm-custom.run

NVIDIA-Linux-x86_64-550.54.16-vgpu-kvm-custom.run

NVIDIA-Linux-x86_64-550.90.05-vgpu-kvm-custom.run

NVIDIA-Linux-x86_64-570.133.10-vgpu-kvm-custom.run

NVIDIA-Linux-x86_64-570.148.06-vgpu-kvm-custom.run

NVIDIA-Linux-x86_64-570.158.02-vgpu-kvm-custom.run

Portion of the nvidia installer log is as follows:

Indeed, I did. That was the first thing I double checked. Spent the last 12 hours attempting to rectify this build issue with this kernel without success.Did you also make sure to install the optional 6.17-headers? (not exact pkg name)

It seems to complain about that part a lot.

I attempted patching and installing the 580.65.05Those drivers are too old for this kernel, grab the 580.x one.

CC [M] nvidia/linux_nvswitch.o

CC [M] nvidia/procfs_nvswitch.o

CC [M] nvidia/i2c_nvswitch.o

CC [M] nvidia-vgpu-vfio/nvidia-vgpu-vfio.o

CC [M] nvidia-vgpu-vfio/vgpu-vfio-mdev.o

CC [M] nvidia-vgpu-vfio/vgpu-devices.o

CC [M] nvidia-vgpu-vfio/vgpu-ctldev.o

CC [M] nvidia-vgpu-vfio/nv-pci-table.o

CC [M] nvidia-vgpu-vfio/vgpu-vfio-pci-core.o

CC [M] nvidia-vgpu-vfio/vgpu-egm.o

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c: In function 'gpu_instance_id_show':

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:375:17: warning: unused variable 'status' [-Wunused-variable]

375 | NV_STATUS status;

| ^~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c: At top level:

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:1941:6: warning: no previous prototype for 'nv_update_config_space' [-Wmissing-prototypes]

1941 | void nv_update_config_space(vgpu_dev_t *vgpu_dev, NvU64 offset,

| ^~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:1969:5: warning: no previous prototype for 'update_pci_config_bars_cache' [-Wmissing-prototypes]

1969 | int update_pci_config_bars_cache(vgpu_dev_t *vgpu_dev)

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:2602:18: warning: no previous prototype for 'find_bar1_node_parent' [-Wmissing-prototypes]

2602 | struct rb_node **find_bar1_node_parent(struct rb_node **parent, NvU64 guest_addr,

| ^~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c: In function 'vgpu_msix_set_vector_signal':

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:3421:50: error: 'struct irq_bypass_producer' has no member named 'token'

3421 | vgpu_dev->intr_info.msix[vector].producer.token = NULL;

| ^

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:3443:46: error: 'struct irq_bypass_producer' has no member named 'token'

3443 | vgpu_dev->intr_info.msix[vector].producer.token = trigger;

| ^

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:3449:15: error: too few arguments to function 'irq_bypass_register_producer'

3449 | ret = irq_bypass_register_producer(&vgpu_dev->intr_info.msix[vector].producer);

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~

In file included from /usr/src/linux-headers-6.17.2-1-pve/include/linux/kvm_host.h:27,

from nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:26:

/usr/src/linux-headers-6.17.2-1-pve/include/linux/irqbypass.h:86:5: note: declared here

86 | int irq_bypass_register_producer(struct irq_bypass_producer *producer,

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:3454:54: error: 'struct irq_bypass_producer' has no member named 'token'

3454 | vgpu_dev-

>intr_info.msix.producer.token = NULL;

| ^

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c: At top level:

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:4223:7: warning: no previous prototype for 'get_drm_format' [-Wmissing-prototypes]

4223 | NvU32 get_drm_format(uint32_t bpp)

| ^~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:4245:5: warning: no previous prototype for 'nv_vgpu_vfio_get_gfx_plane_info' [-Wmissing-prototypes]

4245 | int nv_vgpu_vfio_get_gfx_plane_info(vgpu_dev_t *vgpu_dev, struct vfio_device_gfx_plane_info *gfx_plane_info)

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/vgpu-vfio-mdev.c:121:5: warning: no previous prototype for 'nv_vgpu_vfio_mdev_destroy' [-Wmissing-prototypes]

121 | int nv_vgpu_vfio_mdev_destroy(vgpu_dev_t *vgpu_dev)

| ^~~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/vgpu-vfio-mdev.c:138:5: warning: no previous prototype for 'nv_vgpu_vfio_mdev_create' [-Wmissing-prototypes]

138 | int nv_vgpu_vfio_mdev_create(vgpu_dev_t *vgpu_dev)

| ^~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/vgpu-vfio-mdev.c:561:6: warning: no previous prototype for 'nv_vfio_mdev_set_mig_ops' [-Wmissing-prototypes]

561 | void nv_vfio_mdev_set_mig_ops(struct vfio_device *core_vdev, struct pci_dev *pdev)

| ^~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:4554:6: warning: no previous prototype for 'vgpu_del_phys_mapping' [-Wmissing-prototypes]

4554 | void vgpu_del_phys_mapping(vgpu_dev_t *vgpu_dev, unsigned long offset)

| ^~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:4645:6: warning: no previous prototype for 'vgpu_mmio_close' [-Wmissing-prototypes]

4645 | void vgpu_mmio_close(struct vm_area_struct *vma)

| ^~~~~~~~~~~~~~~

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c: In function 'nv_vgpu_rm_probe':

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:5876:9: warning: unused variable 'ret' [-Wunused-variable]

5876 | int ret;

| ^~~

make[4]: *** [/usr/src/linux-headers-6.17.2-1-pve/scripts/Makefile.build:287: nvidia-vgpu-vfio/nvidia-vgpu-vfio.o] Error 1

nvidia-vgpu-vfio/vgpu-devices.c: In function 'nv_vfio_vgpu_vf_reg_access_hw':

nvidia-vgpu-vfio/vgpu-devices.c:938:18: warning: the comparison will always evaluate as 'true' for the address of 'data' will never be NULL [-Waddress]

938 | if (!pdev || !pParams->data)

| ^

In file included from nvidia-vgpu-vfio/vgpu-devices.h:26,

from nvidia-vgpu-vfio/vgpu-devices.c:29:

nvidia-vgpu-vfio/nv-vgpu-ioctl.h:778:10: note: 'data' declared here

778 | NvU8 data[4];

| ^~~~

nvidia-vgpu-vfio/vgpu-vfio-pci-core.c:263:5: warning: no previous prototype for 'nv_vfio_pci_get_device_state' [-Wmissing-prototypes]

263 | int nv_vfio_pci_get_device_state(struct vfio_device *core_vdev,

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/vgpu-vfio-pci-core.c:457:6: warning: no previous prototype for 'nv_vfio_pci_set_mig_ops' [-Wmissing-prototypes]

457 | void nv_vfio_pci_set_mig_ops(struct vfio_device *core_vdev)

| ^~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/vgpu-vfio-pci-core.c:473:5: warning: no previous prototype for 'nv_vfio_pci_core_init_dev' [-Wmissing-prototypes]

473 | int nv_vfio_pci_core_init_dev(struct vfio_device *core_vdev)

| ^~~~~~~~~~~~~~~~~~~~~~~~~

nvidia-vgpu-vfio/vgpu-vfio-pci-core.c:495:6: warning: no previous prototype for 'nv_vfio_pci_core_release_dev' [-Wmissing-prototypes]

nvidia-vgpu-vfio/vgpu-ctldev.c: In function 'nv_vgpu_ctldev_ioctl':

nvidia-vgpu-vfio/vgpu-ctldev.c:185:1: warning: the frame size of 1648 bytes is larger than 1024 bytes [-Wframe-larger-than=]

185 | }

| ^

ld -r -o nvidia/nv-interface.o nvidia/nv-platform.o nvidia/nv-dsi-parse-panel-props.o nvidia/nv-bpmp.o nvidia/nv-gpio.o nvidia/nv-backlight.o nvidia/nv-imp.o nvidia/nv-platform-pm.o nvidia/nv-ipc-soc.o nvi>

bspdm_shash.o nvidia/libspdm_rsa.o nvidia/libspdm_aead_aes_gcm.o nvidia/libspdm_sha.o nvidia/libspdm_hmac_sha.o nvidia/libspdm_internal_crypt_lib.o nvidia/libspdm_hkdf_sha.o nvidia/libspdm_ec.o nvidia/libs>

LD [M] nvidia.o

nvidia-vgpu-vfio/vgpu-devices.c: In function 'nv_vfio_vgpu_get_attach_device':

nvidia-vgpu-vfio/vgpu-devices.c:799:1: warning: the frame size of 1032 bytes is larger than 1024 bytes [-Wframe-larger-than=]

799 | }

| ^

nvidia-vgpu-vfio/vgpu-devices.c: In function 'nv_vgpu_dev_ioctl':

nvidia-vgpu-vfio/vgpu-devices.c:391:1: warning: the frame size of 1136 bytes is larger than 1024 bytes [-Wframe-larger-than=]

391 | }

| ^

make[4]: Target './' not remade because of errors.

make[3]: *** [/usr/src/linux-headers-6.17.2-1-pve/Makefile:2016: .] Error 2

make[3]: Target 'modules' not remade because of errors.

make[2]: *** [/usr/src/linux-headers-6.17.2-1-pve/Makefile:248: __sub-make] Error 2

make[2]: Target 'modules' not remade because of errors.

make[2]: Leaving directory '/tmp/makeself.pd2uQKZP/NVIDIA-Linux-x86_64-580.65.05-vgpu-kvm-custom/kernel'

make[1]: *** [Makefile:248: __sub-make] Error 2

make[1]: Target 'modules' not remade because of errors.

make[1]: Leaving directory '/usr/src/linux-headers-6.17.2-1-pve'

make: *** [Makefile:138: modules] Error 2

-> Error.

ERROR: An error occurred while performing the step: "Building kernel modules". See /var/log/nvidia-installer.log for details.

-> The command `cd kernel; /usr/bin/make -k -j16 NV_EXCLUDE_KERNEL_MODULES="" SYSSRC="/lib/modules/6.17.2-1-pve/build" SYSOUT="/lib/modules/6.17.2-1-pve/build" ` failed with the following output:

make[1]: Entering directory '/usr/src/linux-headers-6.17.2-1-pve'

make[2]: Entering directory '/tmp/makeself.pd2uQKZP/NVIDIA-Linux-x86_64-580.65.05-vgpu-kvm-custom/kernel'

SYMLINK nvidia/nv-kernel.o

CONFTEST: set_pages_uc

CONFTEST: list_is_first

I attempted patching and installing the 580.65.05

DKMS install still fails to build close to 100%.

root@pve-bdr:~# apt install proxmox-headers-6.17

The following packages were automatically installed and are no longer required:

proxmox-headers-6.14.11-2-pve proxmox-headers-6.14.11-3-pve proxmox-kernel-6.14.11-1-pve-signed

Use 'apt autoremove' to remove them.

Installing:

proxmox-headers-6.17

Installing dependencies:

proxmox-headers-6.17.1-1-pve

Summary:

Upgrading: 0, Installing: 2, Removing: 0, Not Upgrading: 0

Download size...nvidia-installer log file '/var/log/nvidia-installer.log'

creation time: Thu Nov 13 18:37:06 2025

installer version: 580.105.06

nvidia-installer command line:

./nvidia-installer

--dkms

-m=kernel

Using: nvidia-installer ncurses v6 user interface

WARNING: The 'NVIDIA Proprietary' kernel modules are incompatible with the GPU(s) detected on this system. They will be installed anyway, b>

-> Detected 16 CPUs online; setting concurrency level to 16.

-> Scanning the initramfs with lsinitramfs...

-> Executing: /usr/bin/lsinitramfs -l /boot/initrd.img-6.17.2-1-pve

-> Installing NVIDIA driver version 580.105.06.

....

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c: In function 'vgpu_msix_set_vector_signal':

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:3421:50: error: 'struct irq_bypass_producer' has no member named 'token'

3421 | vgpu_dev->intr_info.msix[vector].producer.token = NULL;

| ^

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:3443:46: error: 'struct irq_bypass_producer' has no member named 'token'

3443 | vgpu_dev->intr_info.msix[vector].producer.token = trigger;

| ^

nvidia-vgpu-vfio/nvidia-vgpu-vfio.c:3449:15: error: too few arguments to function 'irq_bypass_register_producer'

3449 | ret = irq_bypass_register_producer(&vgpu_dev->intr_info.msix[vector].producer);We recently uploaded the 6.17 kernel to our repositories. The current default kernel for the Proxmox VE 9 series is still 6.14, but 6.17 is now an option.

We plan to use the 6.17 kernel as the new default for the Proxmox VE 9.1 release later in Q4.

This follows our tradition of upgrading the Proxmox VE kernel to match the current Ubuntu version until we reach an Ubuntu LTS release, at which point we will only provide newer kernels as an opt-in option. The 6.17 kernel is based on the Ubuntu 25.10 Questing release.

We have run this kernel on some of our test setups over the last few days without encountering any significant issues. However, for production setups, we strongly recommend either using the 6.14-based kernel or testing on similar hardware/setups before upgrading any production nodes to 6.17.

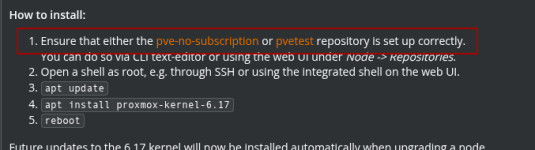

How to install:

Future updates to the 6.17 kernel will now be installed automatically when upgrading a node.

- Ensure that either the pve-no-subscription or pvetest repository is set up correctly.

You can do so via CLI text-editor or using the web UI under Node -> Repositories.- Open a shell as root, e.g. through SSH or using the integrated shell on the web UI.

apt updateapt install proxmox-kernel-6.17reboot

Please note:

- The current 6.14 kernel is still supported, and will stay the default kernel until further notice.

- There were many changes, for improved hardware support and performance improvements all over the place.

For a good overview of prominent changes, we recommend checking out the kernel-newbies site for 6.15, 6.16, and 6.17.- The kernel is also available on the test and no-subscription repositories of Proxmox Backup Server, Proxmox Mail Gateway, and in the test repo of Proxmox Datacenter Manager.

- The new 6.17 based opt-in kernel will not be made available for the previous Proxmox VE 8 release series.

- If you're unsure, we recommend continuing to use the 6.14-based kernel for now.

Feedback about how the new kernel performs in any of your setups is welcome!

Please provide basic details like CPU model, storage types used, ZFS as root file system, and the like, for both positive feedback or if you ran into some issues, where using the opt-in 6.17 kernel seems to be the likely cause.

HI there.So far so Good, Intel Arc Pro B50 is now working great with SR-IOV with 6 Virtual Functions (Currently).

AMD: 5950X

VM(s): 12 Total Mostly Linux but a few Windows VMs (Thin-Clients)

ZFS filesytem with 3 different pools including boot.

I'm not really sure to be honest, all of the VM"s I have that are utiilizing the B50 are windows VM's. I would start by installing the drivers for ubuntu from Intel, the newest ones and see where that gets me.HI there.

I'm trying to use SRIOV (ARC B50) with an Ubuntu VM in Proxmox. However, when I pass through the mapped b50 device I cannot get terminal nor certain other apps to run on the Ubuntu VM.

Any ideas how to resolve this?

I (think I) need to to enable the b50 driver instead of the virtual driver that Ubuntu VM is using inside of Proxmox.

I also have Win 11 VM successfully using the B50 in SRIOV mode

Thanks.

We use essential cookies to make this site work, and optional cookies to enhance your experience.