Hi everybody,

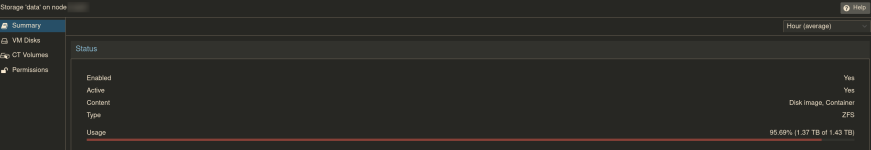

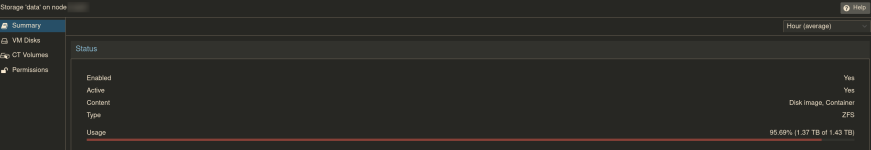

I have a zfs pool that is filling up but I can't understand why

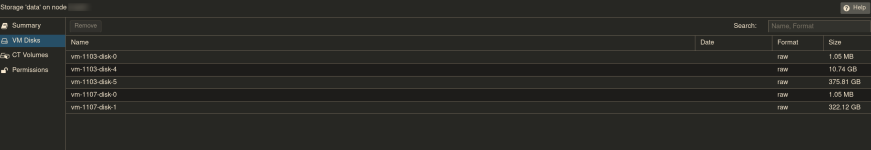

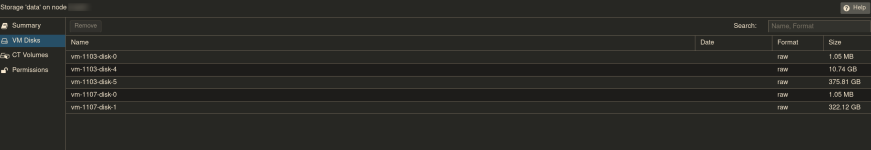

But the sum of all vm disks doesn't match with used space above

Only vm-1107 has snapshots

The pool/dataset is used only for vm, no zfs-direcory configured, I can't figure out what's eating up all the space.

Please kindly advice.

Thanks

I have a zfs pool that is filling up but I can't understand why

But the sum of all vm disks doesn't match with used space above

Only vm-1107 has snapshots

Code:

# zpool list rpoolData

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpoolData 2.27T 1.34T 945G - - 31% 59% 1.00x ONLINE -

Code:

# zfs list rpoolData

NAME USED AVAIL REFER MOUNTPOINT

rpoolData 1.24T 57.3G 170K /rpoolData

Code:

# zfs get used,usedbydataset,usedbysnapshots rpoolData/vm-1107-disk-0

NAME PROPERTY VALUE SOURCE

rpoolData/vm-1107-disk-0 used 4.13M -

rpoolData/vm-1107-disk-0 usedbydataset 817K -

rpoolData/vm-1107-disk-0 usedbysnapshots 0B -

# zfs get used,usedbydataset,usedbysnapshots rpoolData/vm-1107-disk-1

NAME PROPERTY VALUE SOURCE

rpoolData/vm-1107-disk-1 used 787G -

rpoolData/vm-1107-disk-1 usedbydataset 383G -

rpoolData/vm-1107-disk-1 usedbysnapshots 0B -The pool/dataset is used only for vm, no zfs-direcory configured, I can't figure out what's eating up all the space.

Please kindly advice.

Thanks