Hi everyone,

I’m working in a test lab environment with a 3-node Proxmox cluster (pve1, pve2, pve3).

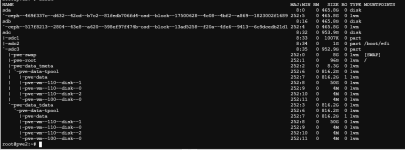

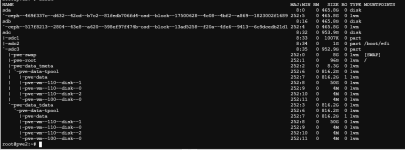

Each node has 2 local disks — except pve2, which has 3

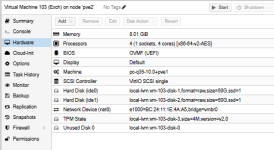

I had a local Exchange Server VM (ID 103, named “exch”) running on pve2.

The VM was fully installed (Windows + Exchange) and I was connected to the Exchange Admin Center when the issue happened.

I briefly left the environment running, and when I came back:

After the cluster recovered:

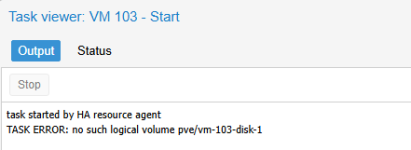

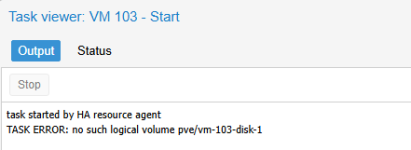

the only thing i see is this on the gui

Thanks in advance

I’m working in a test lab environment with a 3-node Proxmox cluster (pve1, pve2, pve3).

Each node has 2 local disks — except pve2, which has 3

I had a local Exchange Server VM (ID 103, named “exch”) running on pve2.

The VM was fully installed (Windows + Exchange) and I was connected to the Exchange Admin Center when the issue happened.

I briefly left the environment running, and when I came back:

- Two of my nodes (pve2 and pve3) had a red cross in the Proxmox web UI (most likely a temporary network issue).

- After checking the hosts, I managed to bring all nodes back online and visible again in the cluster.

After the cluster recovered:

- Both VM 103 (exch) and VM 110 (AD) were no longer responding.

- I rebooted both:

- AD (VM 110) came back fine.

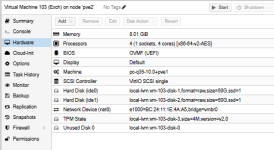

- Exchange (VM 103) failed to boot — the disk seems to be missing.

What I Checked

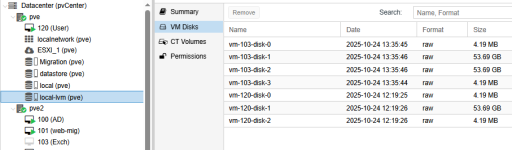

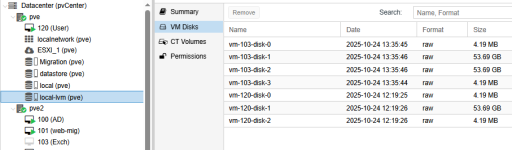

- Both VMs were originally created on pve2, using local LVM-Thin storage.

- They had been migrated between nodes before (via ProxLB VM balancing tool).

- When running lsblk on all nodes, I don’t see the Exchange VM’s disk anywhere.

- In the web GUI, the disk appears as missing (gray/unavailable).

- Removing the EFI disk reference didn’t change anything.

the only thing i see is this on the gui

What I’d Like to Know

- Is there any way to recover the missing VM disk (or data) from pve2?

- Or at least, how can I understand what happened — did Proxmox unmap or lose the LVM-thin volume after the network disruption?

- Any command or log I could check to confirm whether the LV still exists but is detached or corrupt?

Thanks in advance