We are experiencing a similar issue as described in that post; however, the proposed solution did not resolve our case. Can someone help?

Summary:

When reinstalling Proxmox VE (PVE) 9 with ZFS on a disk that has been wiped using wipefs or a quick zero operation, the installer displays a warning about an existing ZFS pool named 'rpool', despite the disk being wiped. This warning does not appear in PVE 8 under similar conditions or when reinstalling PVE 9 without wiping the disk.

Environment:

Proxmox VE Version: 9.0

Filesystem: ZFS

Hardware: Various (issue observed across multiple systems with different disk configurations)

Disk Wipe Tools: wipefs command or quick zero (e.g., dd zeroing first few MB of the disk)

Steps to Reproduce:

Expected Behavior

After wiping the disk with wipefs or a quick zero, the PVE 9 installer should detect no any residual ZFS pool metadata, as the disk is expected to be clean.

The installation should proceed without prompting about an existing 'rpool'.

Actual Behavior

The PVE 9 installer detects an existing ZFS pool named 'rpool' and displays the warning message.

Clicking "OK" renames the detected pool to 'pool-OLD-xxxxxxxx', and the installation completes successfully.

The installed PVE 9 system appears to function normally after the rename.

Additional Tests

PVE 9 → Wipe → PVE 8: No warning about existing ZFS pool.

PVE 8 → Wipe → PVE 9: No warning about existing ZFS pool.

PVE 8 → Wipe → PVE 8: No warning about existing ZFS pool.

PVE 9 → Reinstall PVE 9 (no wipe): No warning about existing ZFS pool.

These tests suggest the issue is specific to PVE 9’s installer when reinstalling on a wiped disk previously used for PVE 9 with ZFS.

Summary:

When reinstalling Proxmox VE (PVE) 9 with ZFS on a disk that has been wiped using wipefs or a quick zero operation, the installer displays a warning about an existing ZFS pool named 'rpool', despite the disk being wiped. This warning does not appear in PVE 8 under similar conditions or when reinstalling PVE 9 without wiping the disk.

Environment:

Proxmox VE Version: 9.0

Filesystem: ZFS

Hardware: Various (issue observed across multiple systems with different disk configurations)

Disk Wipe Tools: wipefs command or quick zero (e.g., dd zeroing first few MB of the disk)

Steps to Reproduce:

- Perform a fresh installation of PVE 9 with ZFS on a disk

- Wipe the disk using either: wipefs command or quick zero using software

- Reinstall PVE 9 with ZFS on the same disk.

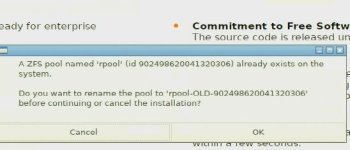

- Installer warning (attached screenshot): "A ZFS pool named 'rpool' (id xxxxxxxx) already exists on the system. Do you want to rename the pool to 'pool-OLD-xxxxxxxx' before continuing or cancel the installation?"

- Click "OK" to proceed with the installation.

Expected Behavior

After wiping the disk with wipefs or a quick zero, the PVE 9 installer should detect no any residual ZFS pool metadata, as the disk is expected to be clean.

The installation should proceed without prompting about an existing 'rpool'.

Actual Behavior

The PVE 9 installer detects an existing ZFS pool named 'rpool' and displays the warning message.

Clicking "OK" renames the detected pool to 'pool-OLD-xxxxxxxx', and the installation completes successfully.

The installed PVE 9 system appears to function normally after the rename.

Additional Tests

PVE 9 → Wipe → PVE 8: No warning about existing ZFS pool.

PVE 8 → Wipe → PVE 9: No warning about existing ZFS pool.

PVE 8 → Wipe → PVE 8: No warning about existing ZFS pool.

PVE 9 → Reinstall PVE 9 (no wipe): No warning about existing ZFS pool.

These tests suggest the issue is specific to PVE 9’s installer when reinstalling on a wiped disk previously used for PVE 9 with ZFS.