Hello everyone,

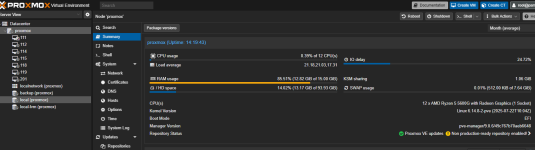

I have recently updated to Proxmox VE 9 with kernel 6.14.8-2-pve on an Intel N100 system and now, when mounting an NFSv4.2 share from a NAS server, performing write operations causes a massive increase in IO delay and eventually the entire system freezes, requiring a manual reboot.

Details:

Thanks in advance.

I have recently updated to Proxmox VE 9 with kernel 6.14.8-2-pve on an Intel N100 system and now, when mounting an NFSv4.2 share from a NAS server, performing write operations causes a massive increase in IO delay and eventually the entire system freezes, requiring a manual reboot.

Details:

- The NFS server is working fine and the network is not saturated.

- Other servers mounting the same NFS share don’t experience issues.

- The mount uses default NFSv4.2 options with large rsize and wsize.

- Tried different mount options (including NFSv3 and smaller sizes) with no success.

- The problem only occurs on Proxmox 9 running kernel 6.14.8.

- SMB mounts work fine without freezes.

- Reinstalled nfs-common package without changes.

- No ECC memory and no visible hardware issues.

Thanks in advance.