Hi,

I upgraded one of my proxmox systems from version 8 to 9. The whole process went fine until the end where I got this message :

Couldn't find EFI system partition. It is recommended to mount it to /boot or /efi.

I didn't think this would really cause an issue but I guess it does now as my system wont boot from the M2 SSD where the OS was stored. It almost seems as if the bootloader isn't there anymore. Not sure if anyone has had the same issue and was able to fix it.

This is the second system I upgraded, first one went very smooth and no issues. Really not sure what happened for this to happen. No VMs were running and all status was green when I checked with PVE8TO9.

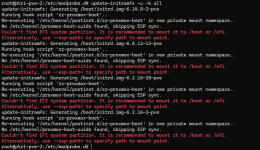

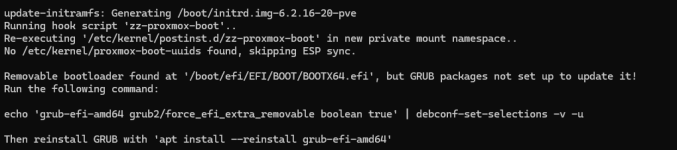

These are the last lines of the output from the upgrade

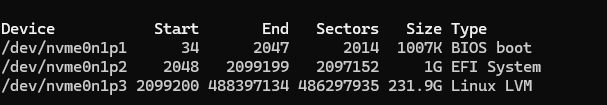

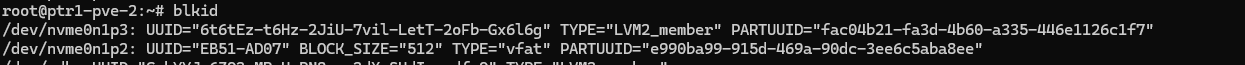

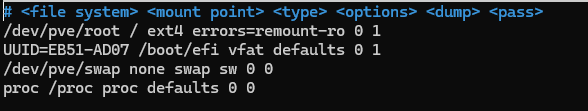

I used Rescuezilla to use fdisk and check if somehow the NVME where proxmox is located was missing the boot partition and boot flag but that is still there. What is really weird out of all this is that grub does not even complain, it is as is there is nothing on the NVME anymore... Anyway, hope someone can help or has an idea what happened.

Update #1 :

I tried booting from a Proxmox 9 USB drive and selected Rescue Boot and it will boot my system however, without that, it wont work. Any ideas on how to fix this?

Update #2 :

While Update #1 worked, it gets me to the login prompt but then starts to do some pci reset of some kind... Really strange.

I upgraded one of my proxmox systems from version 8 to 9. The whole process went fine until the end where I got this message :

Couldn't find EFI system partition. It is recommended to mount it to /boot or /efi.

I didn't think this would really cause an issue but I guess it does now as my system wont boot from the M2 SSD where the OS was stored. It almost seems as if the bootloader isn't there anymore. Not sure if anyone has had the same issue and was able to fix it.

This is the second system I upgraded, first one went very smooth and no issues. Really not sure what happened for this to happen. No VMs were running and all status was green when I checked with PVE8TO9.

These are the last lines of the output from the upgrade

Bash:

Processing triggers for initramfs-tools (0.148.3) ...

update-initramfs: Generating /boot/initrd.img-6.14.8-2-pve

Running hook script 'zz-proxmox-boot'..

Re-executing '/etc/kernel/postinst.d/zz-proxmox-boot' in new private mount namespace..

No /etc/kernel/proxmox-boot-uuids found, skipping ESP sync.

Couldn't find EFI system partition. It is recommended to mount it to /boot or /efi.

Alternatively, use --esp-path= to specify path to mount point.

Processing triggers for rsyslog (8.2504.0-1) ...

Processing triggers for postfix (3.10.3-2) ...

Restarting postfix

Processing triggers for pve-ha-manager (5.0.4) ...

Processing triggers for shim-signed:amd64 (1.47+pmx1+15.8-1+pmx1) ...

root@ptr1-pve-2:~# apt modernize-sources

The following files need modernizing:

- /etc/apt/sources.list

- /etc/apt/sources.list.d/ceph.list

- /etc/apt/sources.list.d/pve-enterprise.list

- /etc/apt/sources.list.d/zabbix.listI used Rescuezilla to use fdisk and check if somehow the NVME where proxmox is located was missing the boot partition and boot flag but that is still there. What is really weird out of all this is that grub does not even complain, it is as is there is nothing on the NVME anymore... Anyway, hope someone can help or has an idea what happened.

Update #1 :

I tried booting from a Proxmox 9 USB drive and selected Rescue Boot and it will boot my system however, without that, it wont work. Any ideas on how to fix this?

Update #2 :

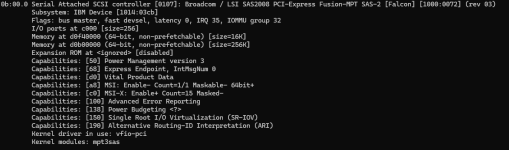

While Update #1 worked, it gets me to the login prompt but then starts to do some pci reset of some kind... Really strange.

Last edited: