I have not examined, nor taken measurements in regards of performance, so i cannot provide you with data.Another question about write performance,

I have done some test with fio, and I have abymissal results when the vm disk file is not preallocated.

preallocated: I got around 20000iops 4k randwrite, 3GB/S write 4M. (This is almost the same than my physical disk without gfs2)

but when the disk is not preallocated, or when I take a snapshot on a preallocated drive. (so new write are not preallocated anymore), I have :

60 iops 4k randwrite, 40MB/S for write 4M

[TUTORIAL] PVE 7.x Cluster Setup of shared LVM/LV with MSA2040 SAS [partial howto]

- Thread starter Glowsome

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

ok thanks !I have not examined, nor taken measurements in regards of performance, so i cannot provide you with data.

works fine without lvm in my tests, so no need to use lvmlockd, vgscan,.and all other lvm stuff.

About performance, I have compared with ocfs2, and it's really night and day with 4k direct write when the file is not preallocated. (i'm around 20000iops on ocfs2 and 200 iops on gfs2).

I have also notice that qcow2 snapshot is lowering iops de 100~200iops for 4k direct write. It's also happening with local storage, so I'll look to implement external qcow2 snapshot. (snapshot in external file). I don't have performance regression with external snapshot.

Hey @spirit & @Glowsome thank you for such an informative thread.

I have 6 hosts in my cluster and 2 MSAs that I am trying to use as clustered distributed storage. I initially tried using LVM on top of iSCSI to implement that but soon found out that the files were not being replicated across nodes and realised I needed GFS2. So I've installed and configured to the best of my knowledge (I don't want to use LVM if I can avoid it so have configured only GFS2 & DLM) but I don't get a prompt back when I try to mount - here is my dlm.conf

I'd appreciate any help.

I have 6 hosts in my cluster and 2 MSAs that I am trying to use as clustered distributed storage. I initially tried using LVM on top of iSCSI to implement that but soon found out that the files were not being replicated across nodes and realised I needed GFS2. So I've installed and configured to the best of my knowledge (I don't want to use LVM if I can avoid it so have configured only GFS2 & DLM) but I don't get a prompt back when I try to mount - here is my dlm.conf

log_debug=1

protocol=tcp

post_join_delay=10

enable_fencing=0

lockspace Xypro-Cluster nodir=1

dlm_tool status

cluster nodeid 1 quorate 1 ring seq 9277 9277

daemon now 2743 fence_pid 0

node 1 M add 16 rem 0 fail 0 fence 0 at 0 0

node 2 M add 710 rem 0 fail 0 fence 0 at 0 0

node 3 M add 785 rem 0 fail 0 fence 0 at 0 0

node 4 M add 751 rem 0 fail 0 fence 0 at 0 0

node 5 M add 816 rem 0 fail 0 fence 0 at 0 0

node 6 M add 1145 rem 0 fail 0 fence 0 at 0 0

I'd appreciate any help.

Last edited:

Hi,Hey @spirit & @Glowsome thank you for such an informative thread.

I have 6 hosts in my cluster and 2 MSAs that I am trying to use as clustered distributed storage. I initially tried using LVM on top of iSCSI to implement that but soon found out that the files were not being replicated across nodes and realised I needed GFS2. So I've installed and configured to the best of my knowledge (I don't want to use LVM if I can avoid it so have configured only GFS2 & DLM) but I don't get a prompt back when I try to mount - here is my dlm.conf

I'd appreciate any help.

here my dlm.conf

Code:

# Enable debugging

log_debug=1

# Use tcp as protocol

protocol=sctp

# Delay at join

#post_join_delay=10

# Disable fencing (for now)

enable_fencing=0I'm using protocol=sctp because I have multiple corosync link, and it's mandatory.

then I format with gfs2 my block device

mkfs.gfs2 -t <corosync_clustername>:testgfs2 -j 4 -J 128 /dev/mapper/36742b0f0000010480000000000e02bf3

(here I'm using a multipath iscsi lun)

and finally I'm mounting it

mount -t gfs2 -o noatime /dev/mapper/36742b0f0000010480000000000e02bf3 /mnt/pve/gfs2

Hi,

I’m writing this post after testing the Glowsome configuration for about two months, followed by four months of production use on three nodes with mixed servers connected via FC to a Lenovo De2000H SAN.

I want to thank @Glowsome for the excellent work they’ve done.

I sincerely hope that this solution can become officially supported in Proxmox in the future.

Thank you again!

I’m writing this post after testing the Glowsome configuration for about two months, followed by four months of production use on three nodes with mixed servers connected via FC to a Lenovo De2000H SAN.

I want to thank @Glowsome for the excellent work they’ve done.

I sincerely hope that this solution can become officially supported in Proxmox in the future.

Thank you again!

There is this tutorial https://forum.proxmox.com/threads/poc-2-node-ha-cluster-with-shared-iscsi-gfs2.160177/ which I have used to setup 2 node cluster in our lab, FC SAN (all flash storage), GFS2 directly on multipath device (simple setup)

From features perspective everything seems to be working (we only miss tpm2 blocking snapshots), all basic features we need (snapshots + san)

In lab seems to be stable, performance is also ok, even discard is supported on gfs2.

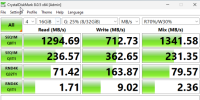

Some performance from windows VM:

Sequential speeds shows 8Gbit HBA are bottlenecks in this case.

From features perspective everything seems to be working (we only miss tpm2 blocking snapshots), all basic features we need (snapshots + san)

In lab seems to be stable, performance is also ok, even discard is supported on gfs2.

Some performance from windows VM:

Sequential speeds shows 8Gbit HBA are bottlenecks in this case.

Last edited:

I have issues with DLM/Mount on boot with this setup - although I'm not using LVM but the raw LUNs themselves. I've added some dependencies to the FStab entries, but the automatic mount still somehow runs into indefinite "kern_stop" for the "mount" commands. Can only be fixed by rebooting.

My current workaround is to define the mount as "noauto" and mount it manually after the proxmox box is completely booted. That works fine up until now.

Here's my fstab entry:

With the x-systemd.requires and the _netdev flag, systemd adds following dependencies:

DLM should obviously be started, and the NVMe-over-TCP connection should be established. The last bit was a first stab at a workaround, trying to wait for corosync to be ready, but it didn't work reliably. Systemd automatically added the "After=blockdev@...target", which seems fine.

I don't know whether it's a race condition because of mounting two shares at once - or is it a fencing related issue? This is my default DLM config, I'm using sctp because I've got two rings defined in corosync. I was already experimenting with disabling additional fencing related options, though. I wasn't sure whether disabling something like "enable_quorum_lockspace" would be a good idea...

Can anyone see an error I've overlooked?

My current workaround is to define the mount as "noauto" and mount it manually after the proxmox box is completely booted. That works fine up until now.

Here's my fstab entry:

Code:

/dev/disk/by-uuid/8ee5d7a9-7b19-4b45-b388-bb5758c20d77 /mnt/pve/storage-gfs2-01 gfs2 _netdev,noauto,noacl,lazytime,noatime,rgrplvb,discard,x-systemd.requires=dlm.service,x-systemd.requires=nvmf-connect-script.service,x-systemd.requires=pve-ha-crm.service,nofail 0 0

/dev/disk/by-uuid/1a89385a-965c-4014-9b83-f90a1f3782f6 /mnt/pve/storage-gfs2-02 gfs2 _netdev,noauto,noacl,lazytime,noatime,rgrplvb,discard,x-systemd.requires=dlm.service,x-systemd.requires=nvmf-connect-script.service,x-systemd.requires=pve-ha-crm.service,nofail 0 0With the x-systemd.requires and the _netdev flag, systemd adds following dependencies:

Code:

After=dlm.service nvmf-connect-script.service pve-ha-crm.service

Requires=dlm.service nvmf-connect-script.service pve-ha-crm.service

After=blockdev@dev-disk-by\x2duuid-1a89385a\x2d965c\x2d4014\x2d9b83\x2df90a1f...targetDLM should obviously be started, and the NVMe-over-TCP connection should be established. The last bit was a first stab at a workaround, trying to wait for corosync to be ready, but it didn't work reliably. Systemd automatically added the "After=blockdev@...target", which seems fine.

I don't know whether it's a race condition because of mounting two shares at once - or is it a fencing related issue? This is my default DLM config, I'm using sctp because I've got two rings defined in corosync. I was already experimenting with disabling additional fencing related options, though. I wasn't sure whether disabling something like "enable_quorum_lockspace" would be a good idea...

Code:

# cat /etc/default/dlm

DLM_CONTROLD_OPTS="--enable_fencing 0 --protocol sctp --log_debug"

# new settings might add

# --enable_startup_fencing 0 --enable_quorum_fencing 0Can anyone see an error I've overlooked?

Last edited:

Hi einhirn: you don't have to use DLM, it's required by GFS instead Shared LVM. Recommend you can reference to https://kb.blockbridge.com/technote/proxmox-lvm-shared-storage/I have issues with DLM/Mount on boot with this setup - although I'm not using LVM but the raw LUNs themselves. I've added some dependencies to the FStab entries, but the automatic mount still somehow runs into indefinite "kern_stop" for the "mount" commands. Can only be fixed by rebooting.

My current workaround is to define the mount as "noauto" and mount it manually after the proxmox box is completely booted. That works fine up until now.

Here's my fstab entry:

Code:/dev/disk/by-uuid/8ee5d7a9-7b19-4b45-b388-bb5758c20d77 /mnt/pve/storage-gfs2-01 gfs2 _netdev,noauto,noacl,lazytime,noatime,rgrplvb,discard,x-systemd.requires=dlm.service,x-systemd.requires=nvmf-connect-script.service,x-systemd.requires=pve-ha-crm.service,nofail 0 0 /dev/disk/by-uuid/1a89385a-965c-4014-9b83-f90a1f3782f6 /mnt/pve/storage-gfs2-02 gfs2 _netdev,noauto,noacl,lazytime,noatime,rgrplvb,discard,x-systemd.requires=dlm.service,x-systemd.requires=nvmf-connect-script.service,x-systemd.requires=pve-ha-crm.service,nofail 0 0

With the x-systemd.requires and the _netdev flag, systemd adds following dependencies:

Code:After=dlm.service nvmf-connect-script.service pve-ha-crm.service Requires=dlm.service nvmf-connect-script.service pve-ha-crm.service After=blockdev@dev-disk-by\x2duuid-1a89385a\x2d965c\x2d4014\x2d9b83\x2df90a1f...target

DLM should obviously be started, and the NVMe-over-TCP connection should be established. The last bit was a first stab at a workaround, trying to wait for corosync to be ready, but it didn't work reliably. Systemd automatically added the "After=blockdev@...target", which seems fine.

I don't know whether it's a race condition because of mounting two shares at once - or is it a fencing related issue? This is my default DLM config, I'm using sctp because I've got two rings defined in corosync. I was already experimenting with disabling additional fencing related options, though. I wasn't sure whether disabling something like "enable_quorum_lockspace" would be a good idea...

Code:# cat /etc/default/dlm DLM_CONTROLD_OPTS="--enable_fencing 0 --protocol sctp --log_debug" # new settings might add # --enable_startup_fencing 0 --enable_quorum_fencing 0

Can anyone see an error I've overlooked?

Exactly - that's what I'm using. Ok, I didn't mention that other than in the "fstab" lines, but since this thread is about using GFS2 I didn't think it neccessary.it's required by GFS

Btw: I'm also using shared thick LVM storage via iSCSI+Multipathing and NVMe-over-TCP, but I really like to use thin provisioning for VMs and possibly snapshots, even though I was surprised that QCOW-Snapshots were internal (i.e. same file) in PVE, but that's a different topic.

Last edited:

It seems that there are some dependencies to take care of:I have issues with DLM/Mount on boot with this setup - although I'm not using LVM but the raw LUNs themselves. I've added some dependencies to the FStab entries, but the automatic mount still somehow runs into indefinite "kern_stop" for the "mount" commands. Can only be fixed by rebooting.

[...]

Can anyone see an error I've overlooked?

dlm.service needs

to be able to mount GFS2 on boot.

Code:

[Unit]

After=pve-ha-crm.serviceto be able to mount GFS2 on boot.

BTW: You also have to remove the $remote_fs dependency from /etc/init.d/rrdcached, otherwise you get a dependency cycle:

rrdcached never writes to a remote filesystem, AFAIK.

Code:

remote-fs.target -> rrdached.service -> pve-cluster.service

^ |

| V

gfs2.mount <- dlm.service <- corosync.servicerrdcached never writes to a remote filesystem, AFAIK.

I'll try those and check whether it helps...

Hi there,

I must say we did configure a production cluster with GFS2, following the instructions on this thread, and it has been working like a charm for around a year, but after some time the storage has become completely unstable and has left the cluster unusable.

For the moment, we've switched to RAW storage. Losing the ability to have snapshots is preferable to having such an unstable filesystem.

Just wanted to leave this comment as a warning to potential users: GFS2 does work, but in the long term it can also become corrupted (maybe it requires some additional maintenance?)

I must say we did configure a production cluster with GFS2, following the instructions on this thread, and it has been working like a charm for around a year, but after some time the storage has become completely unstable and has left the cluster unusable.

For the moment, we've switched to RAW storage. Losing the ability to have snapshots is preferable to having such an unstable filesystem.

Just wanted to leave this comment as a warning to potential users: GFS2 does work, but in the long term it can also become corrupted (maybe it requires some additional maintenance?)

The main issue with GFS2 (and OCFS2) is that they are not really supported, so if something bad happens you are on your own. I might be wrong but I remember also, that their isn't much development with them. Luckily there is a high chance, that Proxmox9 will feature snapshot support with qcow2 on LVM-thick (there is development work at the moment, I don't know however whether it will be ready in time) in a VMFS-like fashion. This should cover most usecases why people use ocfs2 or gfs2 and will be supported officially.

Until the snapshot/qcow2 support on LVM/thick is available this might be a workaround:

Now obviovsly this isn't a solution for every usecase, but maybe it's enough for you. Even if you use another backup software and a limited budget you could still use PBS only for "pseudo-snapshots" without obtaining a subscription as long as you can live with the nag screen. I wouldn't do this as permanent solution without obtaining a support subscription but for a workaround until qcow2 on LVM-thick is supported.

For the moment, we've switched to RAW storage. Losing the ability to have snapshots is preferable to having such an unstable filesystem.

Until the snapshot/qcow2 support on LVM/thick is available this might be a workaround:

Alternatives to Snapshots

If an existing iSCSI/FC/SAS storage needs to be repurposed for a Proxmox VE cluster and using a network share like NFS/CIFS is not an option, it may be possible to rethink the overall strategy; if you plan to use a Proxmox Backup Server, then you could use backups and live restore of VMs instead of snapshots.

Backups of running VMs will be quick thanks to dirty bitmap (aka changed block tracking) and the downtime of a VM on restore can also be minimized if the live-restore option is used, where the VM is powered on while the backup is restored.

https://pve.proxmox.com/wiki/Migrate_to_Proxmox_VE#Alternatives_to_Snapshots

Now obviovsly this isn't a solution for every usecase, but maybe it's enough for you. Even if you use another backup software and a limited budget you could still use PBS only for "pseudo-snapshots" without obtaining a subscription as long as you can live with the nag screen. I wouldn't do this as permanent solution without obtaining a support subscription but for a workaround until qcow2 on LVM-thick is supported.

Luckily there is a high chance, that Proxmox9 will feature snapshot support with qcow2 on LVM-thick (there is development work at the moment, I don't know however whether it will be ready in time) in a VMFS-like fashion. This should cover most usecases why people use ocfs2 or gfs2 and will be supported officially.

I stand corrected: The Proxmox 9 beta has snapshot support on LVM for iscsi and FC SAN but without the overhead of a Filesystem. So @javieitez and other SAN owners finally can have snapshots with them without using non-supported stuff like ocfs2 or GFS.

Hello everyone,

Support for LVM Thick is a big step, but the question is how many people will use it. I personally manage several Proxmox and VMware environments. The VMware environments will eventually be converted to Proxmox, but unfortunately, that will only be possible if LVM Thin support with snapshots on shared storage is finally available. That's the closest thing to VMFS. Unfortunately, these environments are all at a size where Ceph would be too complex and wouldn't deliver the appropriate performance for certain workloads. They're all 3-4 server environments with different SANs. Sure, you can make the LUN 16 TB with LVM Thick, but not every SAN can support deduplication below that. And each snapshot of a VM always takes up the entire reserved space of the VM. 2-3 snapshots, and you've quickly used up 1 TB.

I hope there will be more development in this regard in PVE version 9.

Support for LVM Thick is a big step, but the question is how many people will use it. I personally manage several Proxmox and VMware environments. The VMware environments will eventually be converted to Proxmox, but unfortunately, that will only be possible if LVM Thin support with snapshots on shared storage is finally available. That's the closest thing to VMFS. Unfortunately, these environments are all at a size where Ceph would be too complex and wouldn't deliver the appropriate performance for certain workloads. They're all 3-4 server environments with different SANs. Sure, you can make the LUN 16 TB with LVM Thick, but not every SAN can support deduplication below that. And each snapshot of a VM always takes up the entire reserved space of the VM. 2-3 snapshots, and you've quickly used up 1 TB.

I hope there will be more development in this regard in PVE version 9.

Why is LVM thin required? your SAN is likely thin provisioned anyway, so it serves no actual benefit to thin provision above that. the problem was that there was no snapshot support, not the lvm thin part.but unfortunately, that will only be possible if LVM Thin support with snapshots on shared storage is finally available.

your SAN either supports dedup or it doesnt. not sure what the relevance is for lvm in either case.Sure, you can make the LUN 16 TB with LVM Thick, but not every SAN can support deduplication below that

Thats true no matter what kind of snapshot you use, and whether lvm is thin or thick. so what?And each snapshot of a VM always takes up the entire reserved space of the VM. 2-3 snapshots, and you've quickly used up 1 TB.

Why is LVM thin required? your SAN is likely thin provisioned anyway, so it serves no actual benefit to thin provision above that. the problem was that there was no snapshot support, not the lvm thin part.

Adding to that lvm/thin can't be used as a shared storage because there is no way to ensure it's consistency inside a cluster.

As far I know there is a technical limitation which is not present in LVM/thick.

Hello everyone,

I'd like to explain why LVM-Thin is so important, especially for shared storage operations. First, because Proxmox doesn't support a cluster-capable COW file system like ESXi does with VMFS, which allows VM images to be made available to all hosts via block storage. While this is compensated for with LVM-Thick, it's not very efficient. Why is quite simple; anyone can try it out for themselves. If you deploy an LVM-Thick with a size of, say, 1 TB, and have a VM on it that can take up 500 GB of space, but it only requires 100 GB of space, these 500 GB of space are still reserved for the VM. If I now create a snapshot on the LVM-Thick, the VM already requires 1 TB of storage, even though the actual storage usage would still be only 100 GB + changed blocks. This means you can no longer create VMs or create snapshots on the LVM. Of course, this can be compensated for by creating the LVM with, for example, 10 TB of size, but that's not a solution either, since in this case the 10 TB of storage must also be available in the SAN. Unless the SAN supports thin provisioning or deduplication. Overall, this is currently not a satisfactory solution, since you shouldn't assume that every SAN supports thin provisioning or deduplication. You have to provide a solution that can truly work comfortably with every SAN. Also, the LVM LUN should only be as large as the actual physical storage space in the SAN, otherwise confusion can quickly arise, and the operators of such an environment, who have nothing to do with storage and SAN, might think they suddenly have that much storage space (10 TB) available. LVM Thin helps enormously here, since you only use as much storage space as you need, even if you could use more. And snapshots, even if they are exactly as large as the VM here with 500 GB, can only take up the space they actually need.

If you rely on a SAN that operates like a file system and supports thin provisioning with deduplication and compression, and has snapshot support, you might want to, for example, snapshot all VMs on the LVM, as is common with some VMware plugins, and then create a hardware snapshot on the SAN and then delete the snapshots on the LVM so that they only exist in the snapshot on the SAN. This concept is also not possible with LVM Thick due to its limitations.

It's not necessary for Proxmox VE to provide a cluster file system such as VMFS for SAN operation, but then you should provide a solution that enables a similar operating concept, and in this case, that can only be "LVM Thin." Anything else is unfortunately not a sensible solution. The widely held misconception that SANs are no longer needed has also been around for 25 years. A cluster file system like Ceph can never be as powerful as a SAN, as this would require hardware that would far exceed the cost of a SAN.

Snapshot support for the VMs' TPM disks would also be necessary, as this has unfortunately become essential for virtualizing Windows systems and for compliance with security regulations.

I'd like to explain why LVM-Thin is so important, especially for shared storage operations. First, because Proxmox doesn't support a cluster-capable COW file system like ESXi does with VMFS, which allows VM images to be made available to all hosts via block storage. While this is compensated for with LVM-Thick, it's not very efficient. Why is quite simple; anyone can try it out for themselves. If you deploy an LVM-Thick with a size of, say, 1 TB, and have a VM on it that can take up 500 GB of space, but it only requires 100 GB of space, these 500 GB of space are still reserved for the VM. If I now create a snapshot on the LVM-Thick, the VM already requires 1 TB of storage, even though the actual storage usage would still be only 100 GB + changed blocks. This means you can no longer create VMs or create snapshots on the LVM. Of course, this can be compensated for by creating the LVM with, for example, 10 TB of size, but that's not a solution either, since in this case the 10 TB of storage must also be available in the SAN. Unless the SAN supports thin provisioning or deduplication. Overall, this is currently not a satisfactory solution, since you shouldn't assume that every SAN supports thin provisioning or deduplication. You have to provide a solution that can truly work comfortably with every SAN. Also, the LVM LUN should only be as large as the actual physical storage space in the SAN, otherwise confusion can quickly arise, and the operators of such an environment, who have nothing to do with storage and SAN, might think they suddenly have that much storage space (10 TB) available. LVM Thin helps enormously here, since you only use as much storage space as you need, even if you could use more. And snapshots, even if they are exactly as large as the VM here with 500 GB, can only take up the space they actually need.

If you rely on a SAN that operates like a file system and supports thin provisioning with deduplication and compression, and has snapshot support, you might want to, for example, snapshot all VMs on the LVM, as is common with some VMware plugins, and then create a hardware snapshot on the SAN and then delete the snapshots on the LVM so that they only exist in the snapshot on the SAN. This concept is also not possible with LVM Thick due to its limitations.

It's not necessary for Proxmox VE to provide a cluster file system such as VMFS for SAN operation, but then you should provide a solution that enables a similar operating concept, and in this case, that can only be "LVM Thin." Anything else is unfortunately not a sensible solution. The widely held misconception that SANs are no longer needed has also been around for 25 years. A cluster file system like Ceph can never be as powerful as a SAN, as this would require hardware that would far exceed the cost of a SAN.

Snapshot support for the VMs' TPM disks would also be necessary, as this has unfortunately become essential for virtualizing Windows systems and for compliance with security regulations.

<snip>

I fear we have a missunderstanding. I can see why one want to have thin provisoning on shared storages so you can still use your existing storage hardware without sacrificing features compared to vmware. The trouble is just, that implementing shared storage on LVM/thin is (at leas to my understanding) more challenging (if even possible) than LVM/thick due to technical reasons. But maybe I missrember and it might actually be possible. Whether this will hapen is a different thing though. The lack of snapshots was the biggest issue for many folks considering a migration from VMWare to ProxmoxVE and that problem is solved now.

Snapshot support for the VMs' TPM disks would also be necessary, as this has unfortunately become essential for virtualizing Windows systems and for compliance with security regulations.

Agreed. AFIK there are plans how to implement TPM with qcow images but no idea whether there is actual work done.

Possible solutions for using LVM Thin in shared SAN storage could be the implementation of "Atomic Test and Set" or "Atomic Test and Set with SCSI Reservations" (SCSI COMPARE and WRITE – ATS). This means that a protected area or blocks must be created in the LVM metadata area that function as a journal and mailbox area and are dynamically allocated when the LVM is mounted. How this works with VMFS is explained here, for example: https://virtualcubes.wordpress.com/2017/11/27/vmfs-locking-and-ats-miscompare-issues/

But actually, if you implement something like this for LVM, you're almost not that far removed from a file system. A file system would be just a VFS layer with a corresponding metadata database. Cluster locking for metadata would be similar here.

A file system would, of course, have the advantage of being able to store the configuration there in addition to the VM disk files. Then you would only need PMXFS under /etc/pve for the host configuration.

When I look at file systems like OCFS or GFS2, which are designed for SAN operation, you can see from the way locking is handled that these file systems are only intended for data exchange and not for running VMs. If you have applications that write a file with a fixed length and nothing changes within the file, these file systems might work. But not for VM disks that don't have a fixed length, because data is constantly being appended or data is changing within the file.

A SAN file system for VMs would have to be COW, because that's the only way to ensure that data being written is always continuously written to new sectors and not overwritten. LVM is also read-write-modify in this case. This doesn't cause any problems with thick provisioning, of course, but with thin provisioning it won't work and would lead to the same problems that OCFS and GFS2 have.

For people who can get by with a 2-node PVE cluster, a VFS layer for ZFS would be good, for example. This layer does nothing more than provide the ZFS mount point 1:1 with all file system functions (VFS) in the other node. This would allow ZFS to be used directly on shared SAS storage and be used by both hosts. The host that has directly mounted the pool always writes to it. The other node that has not mounted the pool must read and write all data over the network connection. Unfortunately, it is not easy to implement this with NFS, as NFS restricts ZFS's file system functions too much. A corresponding implementation existed in the Solaris cluster back then under the name - Global Filesystem -. But do not confuse this with the GFS2 SAN file system I mentioned above. The Global File System was a VFS layer for Solaris ZFS that allowed access to the ZFS datasets and volumes of another node in the cluster as if the storage were locally mounted. NetApp storage systems use the same principle because the storage is also active-passive. The active-active principle is only achieved by sharing the storage with other nodes via the cluster network ports. In a larger NetApp cluster, this is also shared with all other nodes in the cluster.

But actually, if you implement something like this for LVM, you're almost not that far removed from a file system. A file system would be just a VFS layer with a corresponding metadata database. Cluster locking for metadata would be similar here.

A file system would, of course, have the advantage of being able to store the configuration there in addition to the VM disk files. Then you would only need PMXFS under /etc/pve for the host configuration.

When I look at file systems like OCFS or GFS2, which are designed for SAN operation, you can see from the way locking is handled that these file systems are only intended for data exchange and not for running VMs. If you have applications that write a file with a fixed length and nothing changes within the file, these file systems might work. But not for VM disks that don't have a fixed length, because data is constantly being appended or data is changing within the file.

A SAN file system for VMs would have to be COW, because that's the only way to ensure that data being written is always continuously written to new sectors and not overwritten. LVM is also read-write-modify in this case. This doesn't cause any problems with thick provisioning, of course, but with thin provisioning it won't work and would lead to the same problems that OCFS and GFS2 have.

For people who can get by with a 2-node PVE cluster, a VFS layer for ZFS would be good, for example. This layer does nothing more than provide the ZFS mount point 1:1 with all file system functions (VFS) in the other node. This would allow ZFS to be used directly on shared SAS storage and be used by both hosts. The host that has directly mounted the pool always writes to it. The other node that has not mounted the pool must read and write all data over the network connection. Unfortunately, it is not easy to implement this with NFS, as NFS restricts ZFS's file system functions too much. A corresponding implementation existed in the Solaris cluster back then under the name - Global Filesystem -. But do not confuse this with the GFS2 SAN file system I mentioned above. The Global File System was a VFS layer for Solaris ZFS that allowed access to the ZFS datasets and volumes of another node in the cluster as if the storage were locally mounted. NetApp storage systems use the same principle because the storage is also active-passive. The active-active principle is only achieved by sharing the storage with other nodes via the cluster network ports. In a larger NetApp cluster, this is also shared with all other nodes in the cluster.

This is only true if the underlying storage is also thick provisioned.If you deploy an LVM-Thick with a size of, say, 1 TB, and have a VM on it that can take up 500 GB of space, but it only requires 100 GB of space, these 500 GB of space are still reserved for the VM. If I now create a snapshot on the LVM-Thick, the VM already requires 1 TB of storage, even though the actual storage usage would still be only 100 GB + changed blocks.

You are conflating hardware snapshot=all snapshots. Its true that pve does not provide any built in orchestration tools for hardware snapshotting- but the option to make your own was always available using pve and storage apis- and it could work exactly as you describe the vmware orchestration function. Since this approach is too hardware dependent, and no storage vendor has (as of yet) decided to make such a plugin, the PVE team made the choice to instead write support for qemu snapshots instead; if you require the same coordination between external snapshot and guest snapshot it would require the same kind of orchestration using PBS hookscripts regardless of which method you choose.If you rely on a SAN that operates like a file system and supports thin provisioning with deduplication and compression, and has snapshot support, you might want to, for example, snapshot all VMs on the LVM, as is common with some VMware plugins, and then create a hardware snapshot on the SAN and then delete the snapshots on the LVM so that they only exist in the snapshot on the SAN. This concept is also not possible with LVM Thick due to its limitations.

The long and the short of it is that

1. storage utilization efficiency is subject to the underlying storage. If you are using dumb block storage (eg, simple raid controller) you are correct- lvm thick will sequester the entirety of the volume. Most modern storage SANs thin provision and compress volumes regardless of what the host puts on them. better ones dedup too.

2. snapshots use space. period. deltas arent free.

3. guest snapshot orchestration is not provided, and is usually not necessary since file system quiescence is usually enough for consistency. If your application does require it, you have a choice of writing the orchestration yourself or request it from the devs (ideally you'd write it and offer it to the devs for inclusion.)