Proxmox VE 8.4 released!

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I tried to upgrade to this version and now getting a kernel panic. No idea how to get out of this

If you have console access, on boot, choose an earlier kernel

Last edited:

You can use the "Updates" panel which can be found in the nodes' navigation menu.Hi, i‘m still very new to Proxmox. How can I Upgrade from 8.3 to 8.4 ?

Ensure you got valid package update repositories setup, that can be checked in the Repositories panel also at the nodes level.

Exactly.I assume I have to Upgrade all three nodes correct?

You mean the CTs and VMs do not actually start? That sounds off, might be good to be investigated in a separate new thread, e.g. checking the system log for any errors would be a good first thing to do then.Any idea why after updating Proxmox1 the VM‘s and CT‘s say start OK , but nothing happens?

Hi,

can you share the output/error message?I tried to upgrade to this version and now getting a kernel panic. No idea how to get out of this

Hi

First of all thank you very much for this great software.

I always make all updates , so my system is always up to date.

This time was no exception, so now my system is updated to 8.4.1

Everything seems to be working very well, except for the following:

In past I have tried install a vm with MacOS Ventura and it worked very well, but now when I change media=cdrom to cache=unsafe on my vm config file and try to start the vm to begin installation the vm do not start and I get error on Proxmox logs : "error ide0 explicit media parameter is required for iso images"

Something wrong with my software or this is caused by some changes on Proxmox 8.4 ?

Any idea appreciated.

Thank you very much.

First of all thank you very much for this great software.

I always make all updates , so my system is always up to date.

This time was no exception, so now my system is updated to 8.4.1

Everything seems to be working very well, except for the following:

In past I have tried install a vm with MacOS Ventura and it worked very well, but now when I change media=cdrom to cache=unsafe on my vm config file and try to start the vm to begin installation the vm do not start and I get error on Proxmox logs : "error ide0 explicit media parameter is required for iso images"

Something wrong with my software or this is caused by some changes on Proxmox 8.4 ?

Any idea appreciated.

Thank you very much.

Upgraded node one of my nodes in my proxmox/ceph/thunderbolt cluster to new release (wont upgrade ceph untill all nodes are on the new release) seems good so far. Have started to play with virtioFS passthrough of CephFS to my docker VMs (to replace glusterFS inside the VMs).

2nd node - fine

3rd node - oh dear, hangs at boot on the loading kernel message - never seems to do anthing with ram disk, reverting to kernel 6.8.12.-2 got me backup and running

hangs here, doesn't seem to touch the initramfs - i even try regenerating it with that specific kernel, nothing.... turned secure boot off, nothing

reverted to older kernel worked

process to get kernel working:

1. uninstall kernel 6.8.12-9

2. uninstall and remove dkms module for i915 (i had seen a couple off dkms errors in upgrade - normally these are harmless.

3. reintsall kernel

ok

4. renabled secure boot

on reboot i got a shim error message

5. turned off secure boot

6 now it booted just fine - guess i need to re-do all the secure boot setup

i don't understand why this broke

the shim is there, why would it be a bad shim?

the shim is there and appears to be a correct THALES signed MS boot shim

--edit--

fixed by installing the signed version of the kernel, for some reason that doesn't get installed as part of an upgrade process.

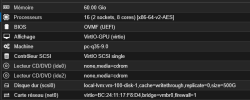

My ceph cluster for reference

2nd node - fine

3rd node - oh dear, hangs at boot on the loading kernel message - never seems to do anthing with ram disk, reverting to kernel 6.8.12.-2 got me backup and running

hangs here, doesn't seem to touch the initramfs - i even try regenerating it with that specific kernel, nothing.... turned secure boot off, nothing

reverted to older kernel worked

process to get kernel working:

1. uninstall kernel 6.8.12-9

2. uninstall and remove dkms module for i915 (i had seen a couple off dkms errors in upgrade - normally these are harmless.

3. reintsall kernel

ok

4. renabled secure boot

on reboot i got a shim error message

5. turned off secure boot

6 now it booted just fine - guess i need to re-do all the secure boot setup

i don't understand why this broke

the shim is there, why would it be a bad shim?

Code:

root@pve1:~# efibootmgr -v

BootCurrent: 0000

Timeout: 1 seconds

BootOrder: 0000,0001

Boot0000* proxmox HD(2,GPT,de159af4-f1a7-4b0d-a39d-000986476331,0x800,0x200000)/File(\EFI\proxmox\shimx64.efi)

Boot0001* UEFI OS HD(2,GPT,de159af4-f1a7-4b0d-a39d-000986476331,0x800,0x200000)/File(\EFI\BOOT\BOOTX64.EFI)..BOthe shim is there and appears to be a correct THALES signed MS boot shim

Code:

root@pve1:/boot/efi/EFI/proxmox# ls -l

total 5972

-rwxr-xr-x 1 root root 112 Apr 11 15:53 BOOTX64.CSV

-rwxr-xr-x 1 root root 88568 Apr 11 15:53 fbx64.efi

-rwxr-xr-x 1 root root 201 Apr 11 15:53 grub.cfg

-rwxr-xr-x 1 root root 4209256 Apr 11 15:53 grubx64.efi

-rwxr-xr-x 1 root root 851368 Apr 11 15:53 mmx64.efi

-rwxr-xr-x 1 root root 952384 Apr 11 15:53 shimx64.efi--edit--

fixed by installing the signed version of the kernel, for some reason that doesn't get installed as part of an upgrade process.

My ceph cluster for reference

Last edited:

@proxmox Staff - Just a thank you note for the great work. This update came just 48 hours before upgrading several multi location clusters during a maintenance window schedule a while ago, that was only approved on 8.3.6 and 18.2.4 ceph before 8.4 and 19.2 release on Enterprise repo.

Upgrade went smoothly over to the latest with both PVE and Ceph whilst maintaining high availability on customer VM's.

Upgrade went smoothly over to the latest with both PVE and Ceph whilst maintaining high availability on customer VM's.

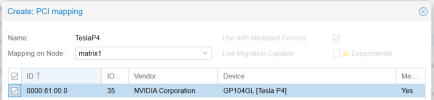

Is there something special to get the live migration of mdev devices working? (problem with mapping)?

Here is my entry in the conf file:

hostpci0: mapping=TeslaP4,mdev=nvidia-65,pcie=1

When I attempt to migration I get a popup that says:

Cannot migrate running VM with mapped resources: hostpci0

Is that because I have the mapping TeslaP4? I have 3 nodes and all of them have the same mapping. Wouldn't it make sense to use a mapping?

I'm on 8.4.1 and the opt in 6.14 kernel

Edit: Looks like it isn't supported? I see here the option is disabled. I tried it without mapping and it wouldn't let me do it either

Here is my entry in the conf file:

hostpci0: mapping=TeslaP4,mdev=nvidia-65,pcie=1

When I attempt to migration I get a popup that says:

Cannot migrate running VM with mapped resources: hostpci0

Is that because I have the mapping TeslaP4? I have 3 nodes and all of them have the same mapping. Wouldn't it make sense to use a mapping?

I'm on 8.4.1 and the opt in 6.14 kernel

Edit: Looks like it isn't supported? I see here the option is disabled. I tried it without mapping and it wouldn't let me do it either

Last edited:

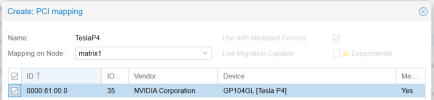

Is that because I have the mapping TeslaP4? I have 3 nodes and all of them have the same mapping. Wouldn't it make sense to use a mapping?

A Resource Mapping? their an option in the Mapping for allow live migrations.

I just did an edit to my post with a screen capture showing that option is disabled. I even removed the mapping and tried to add it again and it wasn't enabled. Maybe these old cards aren't supported, or I'm missing some other option to enable elsewhere?A Resource Mapping? their an option in the Mapping for allow live migrations.

Edit: Yup, it was on the top level mapping:

Last edited:

I just did an edit to my post with a screen capture showing that option is disabled. I even removed the mapping and tried to add it again and it wasn't enabled. Maybe these old cards aren't supported, or I'm missing some other option to enable elsewhere?

Edit: Yup, it was on the top level mapping:

Be interesting to see how it works for you, I tried it between two different model Intel GPU's, no surprise, that died on its ass

I was able to migrate my Tesla P4 between 2 nodes. Takes a moment because of the constantly changing video memory.Be interesting to see how it works for you, I tried it between two different model Intel GPU's, no surprise, that died on its assBut still great for offline migrations.

I do have some issues I'm investigating unrelated to migration. Using the patched driver on the opt in kernel, I see this on my host:

WARNING: CPU: 2 PID: 631019 at ./include/linux/rwsem.h:85 remap_pfn_range_internal+0x4af/0x5a0

and this in my VM:

WARNING: CPU: 3 PID: 557 at drivers/pci/msi/msi.c:888 __pci_enable_msi_range+0x1b3/0x1d0

I don't remember seeing this before, but I tried rolling back to the 6.8 kernel with the drivers I know was working and I still had the issue, so I'm not sure when I started getting those warnings. Everything is working, so I dunno.

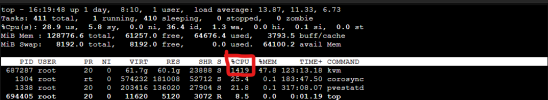

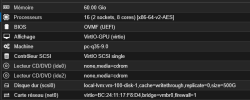

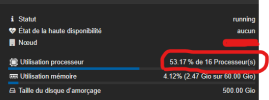

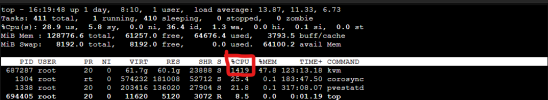

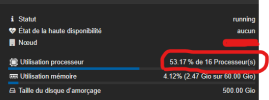

Since the upgrade, i have very slowly VMs (all) (windows & Linux) in all my server, it's now impossible to use them, any idear, it's work well before the upgrade !

starting a VM take now more than 5 minutes, the connexion to the console of an VM is also realy slow !

is it normal for only 1 VM windows 11 started ?

My windows VM : (no change on the configuration)

the VM is just started !!

1 pass a CPU BENCH on my VM ...

Perf single thread CPU is 7 now, 260 Before the upgrade

perf multi thread CPU is 100 now, 2350 before the upgrade !!!

starting a VM take now more than 5 minutes, the connexion to the console of an VM is also realy slow !

is it normal for only 1 VM windows 11 started ?

My windows VM : (no change on the configuration)

the VM is just started !!

1 pass a CPU BENCH on my VM ...

Perf single thread CPU is 7 now, 260 Before the upgrade

perf multi thread CPU is 100 now, 2350 before the upgrade !!!

Last edited:

Release Notes:

I tried OVMF VM without VirtIO RNG device and PXE (dhcp+tftp) was working. Is there any other special condition for this breaking change?

Maybe only PXE via HTTP(S)?

it also works if you don't have an EFI disk (but then you can't persists EFI settings) or if the virtual CPU type includes RDRAND support (e.g., host).

could you be more specific what you mean with "there's been some development on the kernel side that should make this a lot easier and more robust recently."?

Especiall do you mean on proxmoxs kernel site or general linux, i have many problems with that, because of that i asked

upstream in Linux - the mount API got extended and idmapped mounts are now supported pretty much across the board, so we don't need workarounds like shiftfs which would have previously been required to implement this.

Dear @t.lamprecht , is there any timeline for zfs 2.3 implementation?As that ZFS release has some other relatively big changes, e.g. how the ARC is managed, especially w.r.t. interaction with the kernel and marking its memory as reclaimable, we did not felt comfortable with including ZFS 2.3 already, but rather want to ensure those changes can mature and are well understood.