The question is: What's the benefit that development effort should be spent on this although professional users won't need it? I prefer developers effort is spent on features which might convince my employer to switch their vmware cluster to ProxmoxVE with support subscription instead of appeasing the homelab crowd which won't pay for further development anyhow.

We are all users, regardless of whether we pay a subscription, or not. Business users are not first-class citizens and homelaber's are not 2nd-class citizens. What some users do not offer in terms of monetary support to the Proxmox project, they make up in volumes in terms of patches being sent and documentation/content being created (as most of Proxmox's wide reach is indeed created by the vast amount of content created for it, mostly by homelab'ers).

I hence wholeheartedly invite you to reconsider this logic. We are all users and we must be able to consolidate and litigate issues taking our combined interest into mind. Not only that this dichotomy of users unethical, it is also inaccurate, as many people started as homelab'ers before they upgraded their use to start making money off Proxmox.

Im an administator for 7 VMware ESXi environments atm (so no homelabber) and I very much like to have a good info how much RAM a host has AVAILABLE.

Look at the "free -h" command earlier in this thread: You can clearly see that 18 / 19 GiB are "available" for processes to be used. If I had that information I'd know that I can migrate another VM here with a current RAM usage of 16 GiB. Of course the host would have use reduce the amount files cached which would make everything else a little bit slower (by how much we can argue), but this much ram is AVAILABLE for processes (according to "free").

If the host displays "98%" full, I'd have to assume I cant migrate this VM here - because I'd run into OOM and the host would start killing crucial processes.

Is that an unreasonable use case? Just a homelab thing? Why?

See

https://www.linuxatemyram.com/ for explanations what "available" means.

I wholeheartedly agree with this definition of what free means. Regardless of what is right to do. Failing to engage and acknowledge this simple perspective to me seems to speak of a problem in our community, which is manifesting in this thread.

Some people are clearly prepared to go to incredible lengths to convince everyone that since the VM is using a great part of this RAM for caching, and since not allowing it to use as much cache would effect its function, then this amount of cache is "used memory", and hence, it is "unavailable memory". We disagree. Many people disagree (

https://www.linuxatemyram.com/). Please acknowledge that this is not a universal point of view.

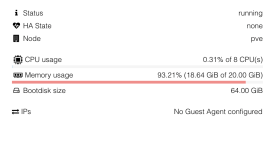

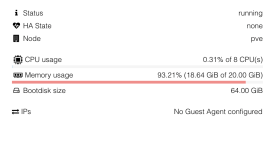

At the very least, someone can argue that the red color of the RAM utilisation bar indicator in the VM summary is misleading:

In the UI view above, red seems to suggest that an action is needed. NOTHING can be further from the truth. In fact, this VM's RAM can be reduced to 4GB and it would perform similarly.

Now that we know that it is misleading, we will start ignoring it, in favour of an internal probing method, but then, what use is this RAM utilisation bar indicator? maybe the right solution would be to remove it entirely.

I did not know what ballooning is until I did some reading. As far as I understood, this is an optional feature that requires an agent running inside the VM which is disabled by default.

Refusing to acknowledge the quite-valid perspective of many people here is a blocker of innovation because it is preventing us from thinking of simple ways to deal with this misleading UI problem. Indeed, there might be very simple fixes but to be able to figure them out, we need to agree that the current situation can benefit from some improvement.

I also think that when someone's point of view is acknowledged, they feel more understood which leads to more constructive dialogue. At the end of the day, you might be paying a subscription and you might think that your use of Proxmox more legit but I doubt that you would be prepared to spend a week diving into Proxmox's source code to fix an issue, whereas many homelab'ers (hobby users) are prepared to do such a thing. So by seeing each other eye to eye and acknowledging each other's concerns not only you make someone feel better, but you will also create development resources that will help solve problems.