Many thanks for the analysis you are doing. It is not clear to me if the problem is related to the server firmware and / or the kernel. I say this because with the same firmware version the problem occurs with both 5.13 and 5.15 kernels. However in my specific case we are talking about several server clusters (lenovo) with firmware dated October 2021. I know for a fact that there was an update in February 2022. So, should I update that server's firmware?On a hunch, can anybody try with the 5.15.35 kernel while turning some spectre/meltdown security mitigations off (shouldn't be done if you host for untrusted and/or third parties).

You'd need to addmitigations=offto your kernel boot command line

https://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysboot_edit_kernel_cmdline

Then don't forget toupdate-gruborproxmox-boot-tool refreshand also do a full host reboot.

Because I could reproduce a Windows issue on an older host with outdated FW that gets fixed by that (not the whole spectre/meltdown patches are at fault, just some extra ones that got in with 5.15.27-1-pve that I'm still investigating more closely), while the symptoms are are partially the same as described in this (and other similar) thread, it's a bit hard to tell for sure that this is the exact same problem most of you are facing, which also may be more than one issue.

Note that in our case we got an identical host with a newer, up-to-date BIOS/Firmware, which also fixes the this particular issue on this particular HW.

Windows VMs stuck on boot after Proxmox Upgrade to 7.0

- Thread starter lolomat

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

May you try to set the Machine Type as Q35, instead of i440fx type, and see if the issue will happen again?It just the MetricVM with InfluxDB for Proxmox-Metric-Collection.

It does auto-update security patches and then reboot once a week, and guess what... hangs once a week....

May you try to set the Machine Type as Q35, instead of i440fx type, and see if the issue will happen again?

@Moayad ,

maybe you are referring to a different problem, because the one that represents the focus of this thread is unrelated to the type machine (Q35 or i440fx).

As mentioned above.

Yes Update Firmware and also Install Intel-Microcode. This should help.Many thanks for the analysis you are doing. It is not clear to me if the problem is related to the server firmware and / or the kernel. I say this because with the same firmware version the problem occurs with both 5.13 and 5.15 kernels. However in my specific case we are talking about several server clusters (lenovo) with firmware dated October 2021. I know for a fact that there was an update in February 2022. So, should I update that server's firmware?

Try with "mitigations=off" in GRUB.Hy

We have the same problem and using AMD Cpu's with amd64-microcode installed.

Ok many thanks. You mean the Debian intel-microcode package, correct?Yes Update Firmware and also Install Intel-Microcode. This should help.

Try with "mitigations=off" in GRUB.

HyTry with "mitigations=off" in GRUB.

i will search for an affected testsystem and will try ist

thanks

Same story on many Windows vms in our cluster (Windows Server 2012/2016/2019). NFS storage and SCSI disks techzpod download mobdro

Last edited:

And what did you try so far, after reading the hints in this thread?Same story on many Windows vms in our cluster (Windows Server 2012/2016/2019). NFS storage and SCSI disks

I use several Lenovo server clusters (sr650), some time ago I updated the UEFI firmware of two clusters to version 3.22, this includes an Intel microcode update (Update processor microcode to MB750654_02006C0A, MBF50656_0400320A, MBF50657_0500320A).

The intel-microcode software does not bring benefits, it does not make further updates to the specific version for these processors.

The saddest thing is that ... this morning a server stuck to the automatic reboot.

Have any of you made improvements by updating the microcode?

Should I try turning off mitigations?

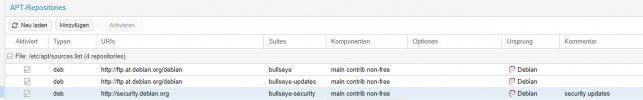

I don't use GRUB, I use two Nvme mirrored ZFS as boot media, where should I put that option?

The intel-microcode software does not bring benefits, it does not make further updates to the specific version for these processors.

The saddest thing is that ... this morning a server stuck to the automatic reboot.

Have any of you made improvements by updating the microcode?

Should I try turning off mitigations?

I don't use GRUB, I use two Nvme mirrored ZFS as boot media, where should I put that option?

This one ?I use several Lenovo server clusters (sr650), some time ago I updated the UEFI firmware of two clusters to version 3.22, this includes an Intel microcode update (Update processor microcode to MB750654_02006C0A, MBF50656_0400320A, MBF50657_0500320A).

The intel-microcode software does not bring benefits, it does not make further updates to the specific version for these processors.

The saddest thing is that ... this morning a server stuck to the automatic reboot.

Have any of you made improvements by updating the microcode?

Should I try turning off mitigations?

I don't use GRUB, I use two Nvme mirrored ZFS as boot media, where should I put that option?

Systemd-boot

The kernel commandline needs to be placed as one line in /etc/kernel/cmdline. To apply your changes, run proxmox-boot-tool refresh, which sets it as the option line for all config files in loader/entries/proxmox-*.conf.

Hi All

Just to put in more info that will be semi helpful.

I see multiple issues here that are potentially being grouped together.

There are QEMU and Windows specific issues, probably others too that are presenting as the same behaviour but are a different issue all together.

We’ve been running VMware and ProxMox for years and have seen this same behaviour for windows servers that have been rebooted by the OS.

Haven’t run into This with Unix based VM’s yet.

Same logo screen with spinning balls stuck indefinitely until the VM is powered down/ up.

It doesn’t happen every time on a reboot but often after windows updates and reboot.

The solution is the same power down/ up resolves the problem.

Some fixes for VMware refer to changing the windows VM type from 2008 > 2012 > 2016 > 2019 etc as there are some os specific VM modes.

Even after these changes the issue still presents regularly after windows updates again not every reboot but often.

If you perform a google search for stuck windows after reboot and add your favourite hypervisor to the search term you’ll see it’s a common issue for windows across every hypervisor and even on bare metal.

For those experiencing this with Debian or other OS’s this may be related to QEMU and potentially your hardware configuration for the VM etc, remove USB devices from the VM under options, change machine types etc, update kernels and make sure QEMU guest tools are installed if available as some troubleshooting steps.

We see these issues across Intel CPU’s mostly but it still presents on AMD just less frequently, could just be a coincidence that it’s presenting less on AMD.

This forum post has so many different scenarios happening that are presenting the same solution presented doesn’t mean that it will resolve for someone else’s problem.

Hopefully the info above will help some readers differentiate their issues from others and look at other options for a fix.

“”G

Just to put in more info that will be semi helpful.

I see multiple issues here that are potentially being grouped together.

There are QEMU and Windows specific issues, probably others too that are presenting as the same behaviour but are a different issue all together.

We’ve been running VMware and ProxMox for years and have seen this same behaviour for windows servers that have been rebooted by the OS.

Haven’t run into This with Unix based VM’s yet.

Same logo screen with spinning balls stuck indefinitely until the VM is powered down/ up.

It doesn’t happen every time on a reboot but often after windows updates and reboot.

The solution is the same power down/ up resolves the problem.

Some fixes for VMware refer to changing the windows VM type from 2008 > 2012 > 2016 > 2019 etc as there are some os specific VM modes.

Even after these changes the issue still presents regularly after windows updates again not every reboot but often.

If you perform a google search for stuck windows after reboot and add your favourite hypervisor to the search term you’ll see it’s a common issue for windows across every hypervisor and even on bare metal.

For those experiencing this with Debian or other OS’s this may be related to QEMU and potentially your hardware configuration for the VM etc, remove USB devices from the VM under options, change machine types etc, update kernels and make sure QEMU guest tools are installed if available as some troubleshooting steps.

We see these issues across Intel CPU’s mostly but it still presents on AMD just less frequently, could just be a coincidence that it’s presenting less on AMD.

This forum post has so many different scenarios happening that are presenting the same solution presented doesn’t mean that it will resolve for someone else’s problem.

Hopefully the info above will help some readers differentiate their issues from others and look at other options for a fix.

“”G

There's an update on the bugtracker which is interesting.

https://bugzilla.proxmox.com/show_bug.cgi?id=3933

If that holds up that would point to some additional processes outside the vm (dismounting, CEPH syncing, making sure other processes aren't accessing the vm, etc) that is performed during a proxmox triggered reboot; because presumably proxmox isn't doing any reboot prep processes inside the Windows vm; Windows is likely doing it's own builtin things identically in those 4 scenarios.

https://bugzilla.proxmox.com/show_bug.cgi?id=3933

Ian 2022-06-07 15:28:16 CEST

We created a snapshot on a VM and we were able to reproduce the problem multiple times and test every reboot options.

- Reboot from Windows Start Menu using RDP (Problem occured)

- Reboot from Windows Start Menu using noVNC Console (Problem occured)

- Reboot from Windows CMD using noVNC Console (Problem occured)

- Reboot from Proxmox WEBUI with HA enabled (Problem did not occur)

The problem seems to occur only when the reboot commands is sent from the operating system.

If that holds up that would point to some additional processes outside the vm (dismounting, CEPH syncing, making sure other processes aren't accessing the vm, etc) that is performed during a proxmox triggered reboot; because presumably proxmox isn't doing any reboot prep processes inside the Windows vm; Windows is likely doing it's own builtin things identically in those 4 scenarios.

... me too. Windows receives the restart signal from the QEMU AGENT or via ACPI, then whether the reboot signal is passed from the agent or inside the VM, what changes?There's an update on the bugtracker which is interesting.

https://bugzilla.proxmox.com/show_bug.cgi?id=3933

If that holds up that would point to some additional processes outside the vm (dismounting, CEPH syncing, making sure other processes aren't accessing the vm, etc) that is performed during a proxmox triggered reboot; because presumably proxmox isn't doing any reboot prep processes inside the Windows vm; Windows is likely doing it's own builtin things identically in those 4 scenarios.

For Proxmox a VM reboot does not imply closing the QEMU process and opening a new one, it always remains the same. Different speech for the stop-start.

mmmm I've never seen that error .. and I'm talking about a good number of clusters. While the problem of spinning balls when restarting VMs arises methodically.mitigations=off does not fix "KVM: entry failed, hardware error 0x80000021"

I personally think that this problem (KVM: entry failed, hardware error 0x80000021) is related to the specific machine and its firmware. I use more than 14 Lenovo servers, UEFI firmware as of February 2022 and I've never seen this problem.

As mentioned before, however, the problem under discussion (spinning balls on reboot) happens everywhere.

As mentioned before, however, the problem under discussion (spinning balls on reboot) happens everywhere.

Did you actually reboot into a 5.15 family kernel yet? (see output of uname -a) If not, you are not affected until you rebootI personally think that this problem (KVM: entry failed, hardware error 0x80000021) is related to the specific machine and its firmware. I use more than 14 Lenovo servers, UEFI firmware as of February 2022 and I've never seen this problem.

As mentioned before, however, the problem under discussion (spinning balls on reboot) happens everywhere.