Hi guys,

During the free time I had to think how to extend and add new resources (storage) to our Cloud. For the moment I do have stroage base on Ceph - OSD only SSD type.

I was reading docs about Ceph and I can say it's possbile I have even the plan. Problem is I have no idea if the actions I want to do are pretty safe. I took over handling the type of work. I read the the red hat docs, but the problem is we have pretty general configuration, I want to do the right way. I will be happy that somebone could see and comment it a bit. I am not sure the current configuration is fine to expand they way I want...

My current configuration:

8 nodes:

4 nodes has 6x 2 TB ssd SATA

4 nodes has 4x 2 TB ssd SATA

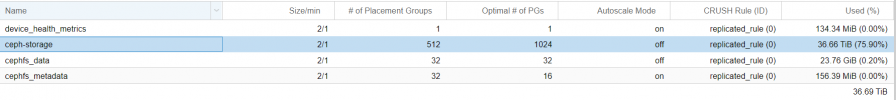

I have already one pool with the rule replicated_rule, problem is I am not sure if the rule is global for ssds but also for anything else and how to make work with new nodes... Because I want to create new pool from new nodes.

New nodes will be :

3 nodes has 10x 6 TB Drives .

I want to create new pool only for HDDs drives - OSD. It will require changes I believe.

rule: replicated_rule_hdd_6TB - will contain only pool and osd from 3 new nodes.

root : root hdd_6TB - will contain only those 3 nodes.

new pool : cephfs_hdd_6tb which will contain new rule.

As PGs are not autoscalable for the moment, should I enable it for the new pool or incresed manually? when adding new OSD?

If my couting is right : I want to have 2 replicas on new nodes:

Would be : 1024 = (30 OSD * 100 Target per OSD ) / 2 number of replicas. It should be 1500 but recommended is 1024.

Problems I do have and consideration:

I do not want to touch current configratuin, and I want to make sure it will be safe.

I did not find any informations but does the Live migration will work?

*Imporant - add 2 more ssds per node to the HDD nodes, problem is if I have 10x 6 TB and I have 2 spare slots (bays) on each machine what would be the best size of ssd for journal of Ceph. How to make it working with current confiugration?

** Addin new rule and pool, I believe adding new osd won't happen via Web interface and adding for example in future will be done only via CLI ?

If you guys have some comments would be great

During the free time I had to think how to extend and add new resources (storage) to our Cloud. For the moment I do have stroage base on Ceph - OSD only SSD type.

I was reading docs about Ceph and I can say it's possbile I have even the plan. Problem is I have no idea if the actions I want to do are pretty safe. I took over handling the type of work. I read the the red hat docs, but the problem is we have pretty general configuration, I want to do the right way. I will be happy that somebone could see and comment it a bit. I am not sure the current configuration is fine to expand they way I want...

My current configuration:

Proxmox version: Virtual Environment 6.4

Ceph version : 15.2.15

Cluster of 8 nodes.

Ceph configration:

[Global]

cluster_network = XXX.XXX.XXX.XXX/XX

fsid = XXXXXXXXXXXXXXXXXXXX

mon_allow_pool_delete = true

mon_host = XXX.XXX.XXX.XXX XXX.XXX.XXX.XXX XXX.XXX.XXX.XXX XXX.XXX.XXX.XXX

osd_pool_default_min_size = 1

osd_pool_default_size = 2

public_network = XXX.XXX.XXX.XXX/XX

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class ssd

device 1 osd.1 class ssd

device 2 osd.2 class ssd

device 3 osd.3 class ssd

device 4 osd.4 class ssd

device 5 osd.5 class ssd

device 6 osd.6 class ssd

device 7 osd.7 class ssd

device 8 osd.8 class ssd

device 9 osd.9 class ssd

device 10 osd.10 class ssd

device 11 osd.11 class ssd

device 12 osd.12 class ssd

device 13 osd.13 class ssd

device 14 osd.14 class ssd

device 15 osd.15 class ssd

device 16 osd.16 class ssd

device 17 osd.17 class ssd

device 18 osd.18 class ssd

device 19 osd.19 class ssd

device 20 osd.20 class ssd

device 21 osd.21 class ssd

device 22 osd.22 class ssd

device 23 osd.23 class ssd

device 24 osd.24 class ssd

device 25 osd.25 class ssd

device 26 osd.26 class ssd

device 27 osd.27 class ssd

device 28 osd.28 class ssd

device 29 osd.29 class ssd

device 30 osd.30 class ssd

device 31 osd.31 class ssd

device 32 osd.32 class ssd

device 33 osd.33 class ssd

device 34 osd.34 class ssd

device 35 osd.35 class ssd

device 36 osd.36 class ssd

device 37 osd.37 class ssd

device 38 osd.38 class ssd

device 39 osd.39 class ssd

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 zone

type 10 region

type 11 root

# buckets

host pve1 {

id -3 # do not change unnecessarily

id -4 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.0 weight 1.819

item osd.1 weight 1.819

item osd.2 weight 1.819

item osd.3 weight 1.819

}

host pve2 {

id -5 # do not change unnecessarily

id -6 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.5 weight 1.819

item osd.6 weight 1.819

item osd.7 weight 1.819

item osd.4 weight 1.819

}

host pve3 {

id -7 # do not change unnecessarily

id -8 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.8 weight 1.819

item osd.9 weight 1.819

item osd.10 weight 1.819

item osd.11 weight 1.819

}

host pve4 {

id -9 # do not change unnecessarily

id -10 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.12 weight 1.819

item osd.13 weight 1.819

item osd.14 weight 1.819

item osd.15 weight 1.819

}

host pve5 {

id -11 # do not change unnecessarily

id -12 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.16 weight 1.819

item osd.17 weight 1.819

item osd.18 weight 1.819

item osd.19 weight 1.819

item osd.24 weight 1.819

item osd.25 weight 1.819

}

host pve6 {

id -13 # do not change unnecessarily

id -14 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.20 weight 1.819

item osd.21 weight 1.819

item osd.22 weight 1.819

item osd.23 weight 1.819

item osd.26 weight 1.819

item osd.27 weight 1.819

}

host pve7 {

id -15 # do not change unnecessarily

id -16 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.28 weight 1.819

item osd.29 weight 1.819

item osd.30 weight 1.819

item osd.31 weight 1.819

item osd.32 weight 1.819

item osd.33 weight 1.819

}

host pve8 {

id -17 # do not change unnecessarily

id -18 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.34 weight 1.819

item osd.35 weight 1.819

item osd.36 weight 1.819

item osd.37 weight 1.819

item osd.38 weight 1.819

item osd.39 weight 1.819

}

root default {

id -1 # do not change unnecessarily

id -2 class ssd # do not change unnecessarily

# weight 72.776

alg straw2

hash 0 # rjenkins1

item pve1 weight 7.278

item pve2 weight 7.278

item pve3 weight 7.278

item pve4 weight 7.278

item pve5 weight 10.916

item pve6 weight 10.916

item pve7 weight 10.916

item pve8 weight 10.916

}

# rules

rule replicated_rule {

id 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

# end crush map

Ceph version : 15.2.15

Cluster of 8 nodes.

Ceph configration:

[Global]

cluster_network = XXX.XXX.XXX.XXX/XX

fsid = XXXXXXXXXXXXXXXXXXXX

mon_allow_pool_delete = true

mon_host = XXX.XXX.XXX.XXX XXX.XXX.XXX.XXX XXX.XXX.XXX.XXX XXX.XXX.XXX.XXX

osd_pool_default_min_size = 1

osd_pool_default_size = 2

public_network = XXX.XXX.XXX.XXX/XX

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class ssd

device 1 osd.1 class ssd

device 2 osd.2 class ssd

device 3 osd.3 class ssd

device 4 osd.4 class ssd

device 5 osd.5 class ssd

device 6 osd.6 class ssd

device 7 osd.7 class ssd

device 8 osd.8 class ssd

device 9 osd.9 class ssd

device 10 osd.10 class ssd

device 11 osd.11 class ssd

device 12 osd.12 class ssd

device 13 osd.13 class ssd

device 14 osd.14 class ssd

device 15 osd.15 class ssd

device 16 osd.16 class ssd

device 17 osd.17 class ssd

device 18 osd.18 class ssd

device 19 osd.19 class ssd

device 20 osd.20 class ssd

device 21 osd.21 class ssd

device 22 osd.22 class ssd

device 23 osd.23 class ssd

device 24 osd.24 class ssd

device 25 osd.25 class ssd

device 26 osd.26 class ssd

device 27 osd.27 class ssd

device 28 osd.28 class ssd

device 29 osd.29 class ssd

device 30 osd.30 class ssd

device 31 osd.31 class ssd

device 32 osd.32 class ssd

device 33 osd.33 class ssd

device 34 osd.34 class ssd

device 35 osd.35 class ssd

device 36 osd.36 class ssd

device 37 osd.37 class ssd

device 38 osd.38 class ssd

device 39 osd.39 class ssd

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 zone

type 10 region

type 11 root

# buckets

host pve1 {

id -3 # do not change unnecessarily

id -4 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.0 weight 1.819

item osd.1 weight 1.819

item osd.2 weight 1.819

item osd.3 weight 1.819

}

host pve2 {

id -5 # do not change unnecessarily

id -6 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.5 weight 1.819

item osd.6 weight 1.819

item osd.7 weight 1.819

item osd.4 weight 1.819

}

host pve3 {

id -7 # do not change unnecessarily

id -8 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.8 weight 1.819

item osd.9 weight 1.819

item osd.10 weight 1.819

item osd.11 weight 1.819

}

host pve4 {

id -9 # do not change unnecessarily

id -10 class ssd # do not change unnecessarily

# weight 7.278

alg straw2

hash 0 # rjenkins1

item osd.12 weight 1.819

item osd.13 weight 1.819

item osd.14 weight 1.819

item osd.15 weight 1.819

}

host pve5 {

id -11 # do not change unnecessarily

id -12 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.16 weight 1.819

item osd.17 weight 1.819

item osd.18 weight 1.819

item osd.19 weight 1.819

item osd.24 weight 1.819

item osd.25 weight 1.819

}

host pve6 {

id -13 # do not change unnecessarily

id -14 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.20 weight 1.819

item osd.21 weight 1.819

item osd.22 weight 1.819

item osd.23 weight 1.819

item osd.26 weight 1.819

item osd.27 weight 1.819

}

host pve7 {

id -15 # do not change unnecessarily

id -16 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.28 weight 1.819

item osd.29 weight 1.819

item osd.30 weight 1.819

item osd.31 weight 1.819

item osd.32 weight 1.819

item osd.33 weight 1.819

}

host pve8 {

id -17 # do not change unnecessarily

id -18 class ssd # do not change unnecessarily

# weight 10.916

alg straw2

hash 0 # rjenkins1

item osd.34 weight 1.819

item osd.35 weight 1.819

item osd.36 weight 1.819

item osd.37 weight 1.819

item osd.38 weight 1.819

item osd.39 weight 1.819

}

root default {

id -1 # do not change unnecessarily

id -2 class ssd # do not change unnecessarily

# weight 72.776

alg straw2

hash 0 # rjenkins1

item pve1 weight 7.278

item pve2 weight 7.278

item pve3 weight 7.278

item pve4 weight 7.278

item pve5 weight 10.916

item pve6 weight 10.916

item pve7 weight 10.916

item pve8 weight 10.916

}

# rules

rule replicated_rule {

id 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

# end crush map

8 nodes:

4 nodes has 6x 2 TB ssd SATA

4 nodes has 4x 2 TB ssd SATA

I have already one pool with the rule replicated_rule, problem is I am not sure if the rule is global for ssds but also for anything else and how to make work with new nodes... Because I want to create new pool from new nodes.

New nodes will be :

3 nodes has 10x 6 TB Drives .

I want to create new pool only for HDDs drives - OSD. It will require changes I believe.

rule: replicated_rule_hdd_6TB - will contain only pool and osd from 3 new nodes.

root : root hdd_6TB - will contain only those 3 nodes.

new pool : cephfs_hdd_6tb which will contain new rule.

As PGs are not autoscalable for the moment, should I enable it for the new pool or incresed manually? when adding new OSD?

If my couting is right : I want to have 2 replicas on new nodes:

Would be : 1024 = (30 OSD * 100 Target per OSD ) / 2 number of replicas. It should be 1500 but recommended is 1024.

Problems I do have and consideration:

I do not want to touch current configratuin, and I want to make sure it will be safe.

I did not find any informations but does the Live migration will work?

*Imporant - add 2 more ssds per node to the HDD nodes, problem is if I have 10x 6 TB and I have 2 spare slots (bays) on each machine what would be the best size of ssd for journal of Ceph. How to make it working with current confiugration?

** Addin new rule and pool, I believe adding new osd won't happen via Web interface and adding for example in future will be done only via CLI ?

If you guys have some comments would be great

Last edited: