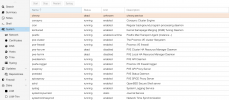

I understand the confusion ...I got the status:

apt(8) now waits for the lock indefinitely if connected to a tty, or for 120 seconds if not.

As far as I can see this is not a message/instruction by apt, but rather the changelogs of all upgraded packages being displayed in a pager (less (1))

try typing 'q' to exit less - the upgrade should proceed.

However - since I'm not sure whether this is the virtual console - make sure to run the upgrade via ssh (or a direct console/IPMI) - see the upgrade guide:

Perform the actions via console or ssh; preferably via console to avoid interrupted ssh connections. Do not carry out the upgrade when connected via the virtual console offered by the GUI; as this will get interrupted during the upgrade.

https://pve.proxmox.com/wiki/Upgrade_from_6.x_to_7.0#Actions_step-by-step

I hope this helps!