Hi,

I switched my storage server from NFS 2,3,4 to only NFS4, now no NFS is exposed with the RPCBind thing (used only with NFS 2,3 as far as i understand)

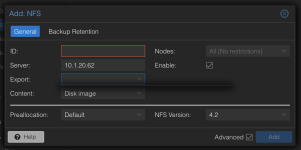

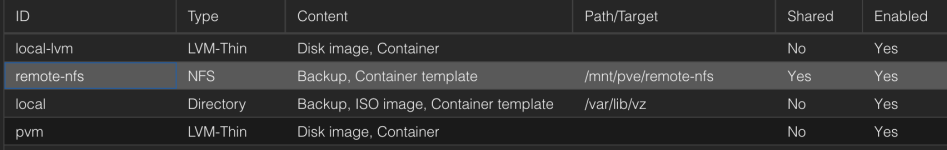

and my proxmox servers are not able anymore to access my share, i tried to remove under the storage section my server and to re-add it

but it fails,

i tried:

and here i believe the problem is that showmount only work with nfs2,3 but not with 4 because it uses RPCbind that is not used with NFS4

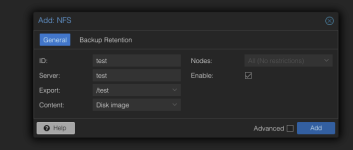

I believe one solution to this is to not run showmount when a user specifies NFS4 like here:

but instead just run the mount command right away.

is there also a dirty temporary fix to add a nfs share manually with some commands? (I could also try to replace /sbin/showmount with /bin/true or some crap like that)

I switched my storage server from NFS 2,3,4 to only NFS4, now no NFS is exposed with the RPCBind thing (used only with NFS 2,3 as far as i understand)

and my proxmox servers are not able anymore to access my share, i tried to remove under the storage section my server and to re-add it

but it fails,

i tried:

Code:

pvesm nfsscan 10.10.10.199

clnt_create: RPC: Program not registered

command '/sbin/showmount --no-headers --exports 10.10.10.199' failed: exit code 1and here i believe the problem is that showmount only work with nfs2,3 but not with 4 because it uses RPCbind that is not used with NFS4

I believe one solution to this is to not run showmount when a user specifies NFS4 like here:

but instead just run the mount command right away.

is there also a dirty temporary fix to add a nfs share manually with some commands? (I could also try to replace /sbin/showmount with /bin/true or some crap like that)