Ok I have done others test, using ZFS on Proxmox I'm getting an high IO delay. Just to recap, here my hardware config:

CPU: Intel I5-7400

RAM: 32GB

Network: 2x Intel Gigabit

The config I have been using on the test, is not the final one. In the final solution, I'll have 2 or 3 disks WD RED 4TB in RAID1 or RAIDZ, then I'll use a Samsung EVO 840 256GB for SLOG/L2ARC

For test, I'm using these disks:

Hardisk: 1TB SATA 7200RPM

SSD: Intel SSD 520 180GB

I created 2x zpool:

Name: proxmox

Disk: 1TB SATA 7200RPM

Options: compression=lz4, sync=disabled, atime=off, ashift=12

Dataset: 8k

Name: ssd

Disk: Intel SSD 520 180GB

Option: compression=lz4, sync=disabled, atime=off

Dataset: 8k

I created 2x ZVOL ( 1 for each zpool ) and I attached them to a VM. The VM is an XPEnology ( Synology ) that is using a btrfs on the top of zvol.

Then I created two shared folder over SMB, one for each zvol/zpool.

For the test, I used 14 files ( movie files ) for a total amount of 64GB, copy them from another XPEnology VM on the same server ( no ZFS ) over SMB

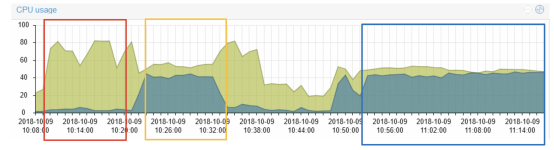

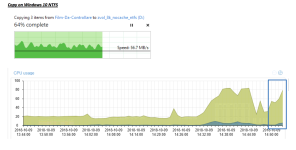

I copied the files on the SSD and HDD, as you can show from the follow images:

Screen Shot 2018-10-09 at 12.01.50.png

RED, SSD copy: NO IO Delay

Yellow, HDD copy: High IO Delay

Then, I added the ssd as log to the first pool and I enabled the sync, same behavior, as you can see from the Blue test.

Basically, the server doesn't seems out of resources.. so What can be?

Here the zfs arc info:

Code:

# arc_summary

------------------------------------------------------------------------

ZFS Subsystem Report Tue Oct 09 10:25:34 2018

ARC Summary: (HEALTHY)

Memory Throttle Count: 0

ARC Misc:

Deleted: 96.38M

Mutex Misses: 28.56k

Evict Skips: 957.97k

ARC Size: 10.09% 1.58 GiB

Target Size: (Adaptive) 10.69% 1.67 GiB

Min Size (Hard Limit): 6.25% 1001.74 MiB

Max Size (High Water): 16:1 15.65 GiB

ARC Size Breakdown:

Recently Used Cache Size: 99.81% 1.14 GiB

Frequently Used Cache Size: 0.19% 2.27 MiB

ARC Hash Breakdown:

Elements Max: 2.06M

Elements Current: 9.60% 197.96k

Collisions: 20.88M

Chain Max: 7

Chains: 8.56k

ARC Total accesses: 71.39M

Cache Hit Ratio: 92.45% 66.00M

Cache Miss Ratio: 7.55% 5.39M

Actual Hit Ratio: 92.34% 65.92M

Data Demand Efficiency: 63.38% 13.40M

Data Prefetch Efficiency: 26.91% 343.35k

CACHE HITS BY CACHE LIST:

Most Recently Used: 24.29% 16.03M

Most Frequently Used: 75.59% 49.90M

Most Recently Used Ghost: 0.16% 106.13k

Most Frequently Used Ghost: 0.10% 67.05k

CACHE HITS BY DATA TYPE:

Demand Data: 12.87% 8.49M

Prefetch Data: 0.14% 92.39k

Demand Metadata: 86.97% 57.41M

Prefetch Metadata: 0.02% 13.30k

CACHE MISSES BY DATA TYPE:

Demand Data: 91.01% 4.91M

Prefetch Data: 4.65% 250.96k

Demand Metadata: 4.09% 220.55k

Prefetch Metadata: 0.25% 13.38k

DMU Prefetch Efficiency: 85.74M

Hit Ratio: 7.03% 6.03M

Miss Ratio: 92.97% 79.71M

ZFS Tunables:

dbuf_cache_hiwater_pct 10

dbuf_cache_lowater_pct 10

dbuf_cache_max_bytes 104857600

dbuf_cache_max_shift 5

dmu_object_alloc_chunk_shift 7

ignore_hole_birth 1

l2arc_feed_again 1

l2arc_feed_min_ms 200

l2arc_feed_secs 1

l2arc_headroom 2

l2arc_headroom_boost 200

l2arc_noprefetch 1

l2arc_norw 0

l2arc_write_boost 8388608

l2arc_write_max 8388608

metaslab_aliquot 524288

metaslab_bias_enabled 1

metaslab_debug_load 0

metaslab_debug_unload 0

metaslab_fragmentation_factor_enabled 1

metaslab_lba_weighting_enabled 1

metaslab_preload_enabled 1

metaslabs_per_vdev 200

send_holes_without_birth_time 1

spa_asize_inflation 24

spa_config_path /etc/zfs/zpool.cache

spa_load_verify_data 1

spa_load_verify_maxinflight 10000

spa_load_verify_metadata 1

spa_slop_shift 5

zfetch_array_rd_sz 1048576

zfetch_max_distance 8388608

zfetch_max_streams 8

zfetch_min_sec_reap 2

zfs_abd_scatter_enabled 1

zfs_abd_scatter_max_order 10

zfs_admin_snapshot 1

zfs_arc_average_blocksize 8192

zfs_arc_dnode_limit 0

zfs_arc_dnode_limit_percent 10

zfs_arc_dnode_reduce_percent 10

zfs_arc_grow_retry 0

zfs_arc_lotsfree_percent 10

zfs_arc_max 0

zfs_arc_meta_adjust_restarts 4096

zfs_arc_meta_limit 0

zfs_arc_meta_limit_percent 75

zfs_arc_meta_min 0

zfs_arc_meta_prune 10000

zfs_arc_meta_strategy 1

zfs_arc_min 0

zfs_arc_min_prefetch_lifespan 0

zfs_arc_p_dampener_disable 1

zfs_arc_p_min_shift 0

zfs_arc_pc_percent 0

zfs_arc_shrink_shift 0

zfs_arc_sys_free 0

zfs_autoimport_disable 1

zfs_checksums_per_second 20

zfs_compressed_arc_enabled 1

zfs_dbgmsg_enable 0

zfs_dbgmsg_maxsize 4194304

zfs_dbuf_state_index 0

zfs_deadman_checktime_ms 5000

zfs_deadman_enabled 1

zfs_deadman_synctime_ms 1000000

zfs_dedup_prefetch 0

zfs_delay_min_dirty_percent 60

zfs_delay_scale 500000

zfs_delays_per_second 20

zfs_delete_blocks 20480

zfs_dirty_data_max 3361280819

zfs_dirty_data_max_max 4294967296

zfs_dirty_data_max_max_percent 25

zfs_dirty_data_max_percent 10

zfs_dirty_data_sync 67108864

zfs_dmu_offset_next_sync 0

zfs_expire_snapshot 300

zfs_flags 0

zfs_free_bpobj_enabled 1

zfs_free_leak_on_eio 0

zfs_free_max_blocks 100000

zfs_free_min_time_ms 1000

zfs_immediate_write_sz 32768

zfs_max_recordsize 1048576

zfs_mdcomp_disable 0

zfs_metaslab_fragmentation_threshold 70

zfs_metaslab_segment_weight_enabled 1

zfs_metaslab_switch_threshold 2

zfs_mg_fragmentation_threshold 85

zfs_mg_noalloc_threshold 0

zfs_multihost_fail_intervals 5

zfs_multihost_history 0

zfs_multihost_import_intervals 10

zfs_multihost_interval 1000

zfs_multilist_num_sublists 0

zfs_no_scrub_io 0

zfs_no_scrub_prefetch 0

zfs_nocacheflush 0

zfs_nopwrite_enabled 1

zfs_object_mutex_size 64

zfs_pd_bytes_max 52428800

zfs_per_txg_dirty_frees_percent 30

zfs_prefetch_disable 0

zfs_read_chunk_size 1048576

zfs_read_history 0

zfs_read_history_hits 0

zfs_recover 0

zfs_recv_queue_length 16777216

zfs_resilver_delay 2

zfs_resilver_min_time_ms 3000

zfs_scan_idle 50

zfs_scan_ignore_errors 0

zfs_scan_min_time_ms 1000

zfs_scrub_delay 4

zfs_send_corrupt_data 0

zfs_send_queue_length 16777216

zfs_sync_pass_deferred_free 2

zfs_sync_pass_dont_compress 5

zfs_sync_pass_rewrite 2

zfs_sync_taskq_batch_pct 75

zfs_top_maxinflight 32

zfs_txg_history 0

zfs_txg_timeout 5

zfs_vdev_aggregation_limit 131072

zfs_vdev_async_read_max_active 3

zfs_vdev_async_read_min_active 1

zfs_vdev_async_write_active_max_dirty_percent 60

zfs_vdev_async_write_active_min_dirty_percent 30

zfs_vdev_async_write_max_active 10

zfs_vdev_async_write_min_active 2

zfs_vdev_cache_bshift 16

zfs_vdev_cache_max 16384

zfs_vdev_cache_size 0

zfs_vdev_max_active 1000

zfs_vdev_mirror_non_rotating_inc 0

zfs_vdev_mirror_non_rotating_seek_inc 1

zfs_vdev_mirror_rotating_inc 0

zfs_vdev_mirror_rotating_seek_inc 5

zfs_vdev_mirror_rotating_seek_offset 1048576

zfs_vdev_queue_depth_pct 1000

zfs_vdev_raidz_impl [fastest] original scalar sse2 ssse3 avx2

zfs_vdev_read_gap_limit 32768

zfs_vdev_scheduler noop

zfs_vdev_scrub_max_active 2

zfs_vdev_scrub_min_active 1

zfs_vdev_sync_read_max_active 10

zfs_vdev_sync_read_min_active 10

zfs_vdev_sync_write_max_active 10

zfs_vdev_sync_write_min_active 10

zfs_vdev_write_gap_limit 4096

zfs_zevent_cols 80

zfs_zevent_console 0

zfs_zevent_len_max 64

zil_replay_disable 0

zil_slog_bulk 786432

zio_delay_max 30000

zio_dva_throttle_enabled 1

zio_requeue_io_start_cut_in_line 1

zio_taskq_batch_pct 75

zvol_inhibit_dev 0

zvol_major 230

zvol_max_discard_blocks 16384

zvol_prefetch_bytes 131072

zvol_request_sync 0

zvol_threads 32

zvol_volmode 1

# arcstat

time read miss miss% dmis dm% pmis pm% mmis mm% arcsz c

10:26:57 128 44 34 44 34 0 0 0 0 3.1G 3.1G

Thanks,

Jack