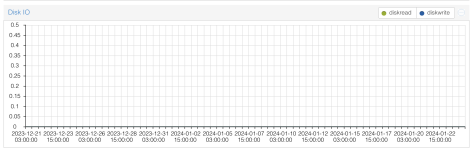

Greetings. I have issues with my LXC containers (all of them), when they report 0 disk reads/writes, like on a screenshot here:

I am using prometheus-pve-exporter for monitoring my Proxmox containers, and it takes the data from the same API the UI is taking it from, which basically makes it impossible for me to build alerts on big disk reads/writes.

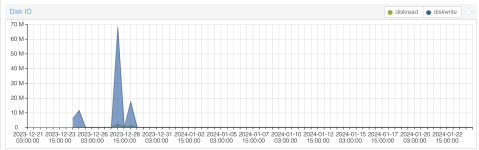

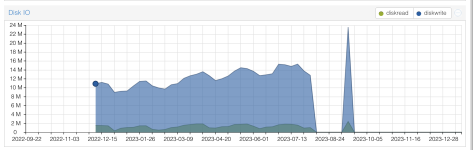

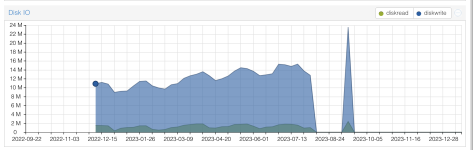

From what I understood, these metrics are taken from cgroups, and apparently it's cgroups which is not reporting any disk reads/writes:

and the rbytes/wbytes values are not changed overtime, while the container surely did some disk reads/writes.

I also found a somewhat relevant issue: https://forum.proxmox.com/threads/disk-i-o-graph-is-empty.134728/, but I am not using ZFS on my containers (all of my containers are set to use one mount endpoint on a separate SSD, and also I have 2 SSDs which are set as RAID 1 and are used to boot a Proxmox instance itself).

Not sure where to dig any further or anyone faced the same issue, can someone assist?

I am using prometheus-pve-exporter for monitoring my Proxmox containers, and it takes the data from the same API the UI is taking it from, which basically makes it impossible for me to build alerts on big disk reads/writes.

From what I understood, these metrics are taken from cgroups, and apparently it's cgroups which is not reporting any disk reads/writes:

Bash:

proxmox@proxmox-1 ~ ❯ cat /sys/fs/cgroup/lxc/102/io.stat

8:48 rbytes=26578944 wbytes=0 rios=1426 wios=0 dbytes=0 dios=0

252:4 rbytes=26578944 wbytes=0 rios=1426 wios=0 dbytes=0 dios=0I also found a somewhat relevant issue: https://forum.proxmox.com/threads/disk-i-o-graph-is-empty.134728/, but I am not using ZFS on my containers (all of my containers are set to use one mount endpoint on a separate SSD, and also I have 2 SSDs which are set as RAID 1 and are used to boot a Proxmox instance itself).

Not sure where to dig any further or anyone faced the same issue, can someone assist?