Latest activity

-

SStudlyCaps posted the thread [SOLVED] Unprivileged LXC will not start with NFS share via bind mount in Proxmox VE: Installation and configuration.I am trying to get a NFS share accessible by an unprivileged LXC. I've been following the guide here, mounting the NFS share on my host, then bind mounting the host directory to my container. The problem I am having is that when the mount point...

-

Gglockmane replied to the thread USB Backup für mehrere Server - Best Practice?.@news Profix? Danke für den Link, schaue ich mir mal an! Also machst du erst ein lokales Backup und syncst das dann mit rsync auf die externe Platte? Mit LUKS verschlüsseln klingt nach einer guten Idee..

-

Mmadindehead replied to the thread Dell OpenManage installation on Proxmox 9 with Debian 13.Sorry, can you please clarify where you used the import tool? Is this in the webui or via the cli?

-

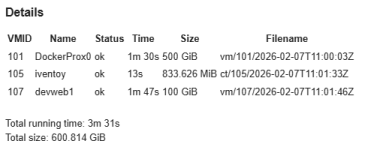

Jjlauro replied to the thread New Import Wizard Available for Migrating VMware ESXi Based Virtual Machines.I have seen others report problems with importing sometimes... and thought I would share one that can cause the issue that I don't think has been widely mentioned... (mainly because it happened today... down to about 6 vms out of almost...

-

Kkorenchkin replied to the thread e1000 driver hang.oh cra*,i should have not looked into this thread :) i welcome myself to this issue with dell pro max t2 onboard piece of... i think i was downloading 500mbit tops (500mbit from isp) when this happened funny thing is,i went to meshcentral(other...

-

Bbl1mp replied to the thread Odd Behavior in Debian 13 VM: Cannot Reach Internet After Adding Second NIC to VM Set Up with Single NIC?.Hi SinistersPisces, can you elaborate a little bit, on how you setup the VM? And which services are used for network interface management? When using the Debian cloudimages, they will ship with netplan and the according network management...

-

Eesquimo replied to the thread [SOLVED] qemu-agent 100% CPU usage.Thanks for the reply. I agree that it seems to be storage related but I don't really think so. Let me know what you think with the following info - I have a total of 3 identical hosts, same harware, same pve, and more or less same usage. they are...

-

Ddas1996 replied to the thread Try to install Proxmox 9.1 on a old linux server, and get lots of disk IO error..^^Won't using this reduce performance as it disables NCQ? If so, seems like a bandaid for the real issue, some change in the kernel.

-

TTErxleben replied to the thread Zugriff mit Windows auf ein Proxmox Storage möglich?.Also können wir festhalten, dass fleecing nur bei SSD vs HDD etwas bringen könnte, aber zusätzliche Fallstricke birgt?

-

Sscarfaceuk replied to the thread Mountpoints for LXC containers broken after update.I've ran into the same problem, took me a while trying to figure out what the problem was until I found this thread. Downgrading pve-container to 6.0.18 resolved it for me, thanks. Will hold off updating my proxmox nodes until an updated version...

-

SInisterPisces posted the thread Odd Behavior in Debian 13 VM: Cannot Reach Internet After Adding Second NIC to VM Set Up with Single NIC? in Proxmox VE: Networking and Firewall.Hello, I'm troubleshooting this now, but wanted to start a thread to document what happened. Hopefully, someone will find this in the future if they're troubleshooting something similar. I've got a Debian 13 VM. I set it up with NIC 1, on VLAN...

SInisterPisces posted the thread Odd Behavior in Debian 13 VM: Cannot Reach Internet After Adding Second NIC to VM Set Up with Single NIC? in Proxmox VE: Networking and Firewall.Hello, I'm troubleshooting this now, but wanted to start a thread to document what happened. Hopefully, someone will find this in the future if they're troubleshooting something similar. I've got a Debian 13 VM. I set it up with NIC 1, on VLAN... -

Sscarfaceuk reacted to miztahsparklez's post in the thread Mountpoints for LXC containers broken after update with

Like.

unfortunately, I also got caught on this. It's affecting all unprivileged containers with bind mounts. I caught mine by trying to migrate a container after updating. It doesn't matter if its RO or not. My NFS share is RW. Current workaround...

Like.

unfortunately, I also got caught on this. It's affecting all unprivileged containers with bind mounts. I caught mine by trying to migrate a container after updating. It doesn't matter if its RO or not. My NFS share is RW. Current workaround... -

jsterr reacted to RoCE-geek's post in the thread Redhat VirtIO developers would like to coordinate with Proxmox devs re: "[vioscsi] Reset to device ... system unresponsive" with

jsterr reacted to RoCE-geek's post in the thread Redhat VirtIO developers would like to coordinate with Proxmox devs re: "[vioscsi] Reset to device ... system unresponsive" with Like.

The resolving commit for mentioned vioscsi (and viostor) bug was merged 21 Jan 2026 into virtio master (commit cade4cb, corresponding tag mm315). So if the to-be-released version will be tagged as >= mm315, the patch will be there. As of me...

Like.

The resolving commit for mentioned vioscsi (and viostor) bug was merged 21 Jan 2026 into virtio master (commit cade4cb, corresponding tag mm315). So if the to-be-released version will be tagged as >= mm315, the patch will be there. As of me... -

RRoCE-geek replied to the thread Redhat VirtIO developers would like to coordinate with Proxmox devs re: "[vioscsi] Reset to device ... system unresponsive".The resolving commit for mentioned vioscsi (and viostor) bug was merged 21 Jan 2026 into virtio master (commit cade4cb, corresponding tag mm315). So if the to-be-released version will be tagged as >= mm315, the patch will be there. As of me...

-

RIt looks like you run into the bug reported here: https://bugzilla.proxmox.com/show_bug.cgi?id=7271 Feel free to chime in there! To temporarily downgrade pve-container you can run apt install pve-container=6.0.18

-

RRense reacted to chamo207's post in the thread LXC containers not startting after Proxmox update with

Like.

I ran into this same issue today. This thread fixed it for me: https://forum.proxmox.com/threads/proxmox-9-1-5-breaks-lxc-mount-points.180161/ tldr: downgrade pve-container to 6.0.18

Like.

I ran into this same issue today. This thread fixed it for me: https://forum.proxmox.com/threads/proxmox-9-1-5-breaks-lxc-mount-points.180161/ tldr: downgrade pve-container to 6.0.18 -

SShaMAD posted the thread davfs2 malfunction in Proxmox 9.1.5 environment in Proxmox VE: Installation and configuration.davfs2 has stopped functioning in both privileged and unprivileged LXC containers with FUSE enabled, as well as on the Proxmox host itself. The issue occurs when mounting WebDAV resources (tested with a cloud storage service), where the mount...

-

Kkombuussy reacted to dondizzurp's post in the thread [GUIDE] Install Home Assistant OS in a VM with

Like.

So just like my other guide, this is more for my own records so I can come back and refer to it. I know there are scripts for these things, but I prefer doing them manually so I can learn along the way. Maybe it can help someone else in the...

Like.

So just like my other guide, this is more for my own records so I can come back and refer to it. I know there are scripts for these things, but I prefer doing them manually so I can learn along the way. Maybe it can help someone else in the... -

Bbl1mp reacted to Johannes S's post in the thread Cluster - Corosync - nur 1 nic - VLAN nutzen? with

Like.

Grundsätzlich ist das ja auch keine falsche Annahme, da ja ZFS streng genommen kein echter shared Storage ist (eben weil man da immer einen Datenverlust hat). Aber wenn es für den eigenen Zweck reicht, stört das ja nicht ;)

Like.

Grundsätzlich ist das ja auch keine falsche Annahme, da ja ZFS streng genommen kein echter shared Storage ist (eben weil man da immer einen Datenverlust hat). Aber wenn es für den eigenen Zweck reicht, stört das ja nicht ;) -

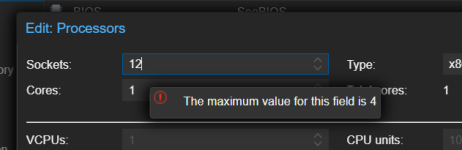

IITMader replied to the thread Proxmox Backup/Migration mit Veeam.Die Kirsche auf der Sahnetorte ist, dass das Veeam Plugin beim Full VM Restore statt der Anzahl Cores, die Anzahl Sockel nimmt. Ist bei Veeam v13.0.1 und Proxmox 9.1.1 passiert. Schludrigkeit hoch 3