Latest activity

-

CCookiefamily reacted to mkoeppl's post in the thread Adding HA resources to an existing HA affinity rule and outdated manpages with

Like.

In addition to what @dakralex said, the API for HA rules is indeed a bit different than the one for groups was. So the way to do it right now is to run ha-manager rules set <type> <rule name> --resources vm:100,vm:101,vm:102, listing the all of...

Like.

In addition to what @dakralex said, the API for HA rules is indeed a bit different than the one for groups was. So the way to do it right now is to run ha-manager rules set <type> <rule name> --resources vm:100,vm:101,vm:102, listing the all of... -

Cccolotti replied to the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups.Also an interesting note from the lifecycle pages on B2. I realize B2 is currently a "Generic" use of S3 with PBS. Not sure the long term plans for PBS and S3 providers but I do see many applications have specific selections for...

-

BReine Vermutungen: Deine VMs haben ja nun eine eigene, private IP Adresse in einem Netz, welches nicht außerhalb des PVE geroutet wird. Korrekt? Dann ist die übliche Lösung: NAT/Masquerading. Für ein "normales" Setup schaust du hier...

-

Cccolotti replied to the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups.this is also interesting... so I am going to try uploadtohide = 1 and hidetodelete=30. I mean this is all for testing the S3 aspect and these copies are the "3rd" copy of backups. However this could produce some data on using B2, but I suspect...

-

XXN-Matt replied to the thread Watchdog Reboots.Logs don’t show any. Just to note, this issue occurs any time of the day. Our backups only run at very specific times. There is no correlation

-

Cccolotti reacted to fabian's post in the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups with

Like.

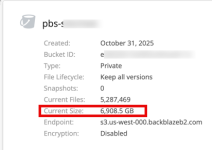

no, the 7TB is referring to the logical data before deduplication.. your file count is also a lot higher compared to your GC log, so something is very much fishy.. could you check your lifecycle rules to ensure that objects are not moved to some...

Like.

no, the 7TB is referring to the logical data before deduplication.. your file count is also a lot higher compared to your GC log, so something is very much fishy.. could you check your lifecycle rules to ensure that objects are not moved to some... -

Cccolotti replied to the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups.Since I only keep about 14 total backups (not days) I "could" set this to 30 days and see if it removed some. This could be something that has to be considered differently for each S3 provider and their lifecycle options.. Just adding the B2...

-

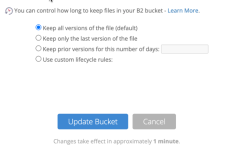

Cccolotti replied to the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups.On Backblaze, initially the setup was recommended to be set this way: It was an older post back when S3 first appeared but this is how it was set and has been set. I would have assumed to allow the PBS to delete. I guess now the question may...

-

OIf your storage supports NFS (which is a big if see alexskysilk question) you can of course use it. The benefit would be that then you can use snapshots and thin provisioning and it will (mostly) feel like vmfs. These benefits come with some...

-

XXN-Matt replied to the thread Watchdog Reboots.20 mins before the last reboot and one prior to that.

-

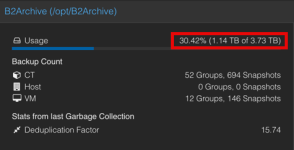

Cccolotti replied to the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups.the last couple lines stand out but correlate the 7TB on S3 it seems. So there does seem to be a bit of a disconnect on the S3 usage side possibly. The local PBS server only has about 4TB total space available locally on disk...

-

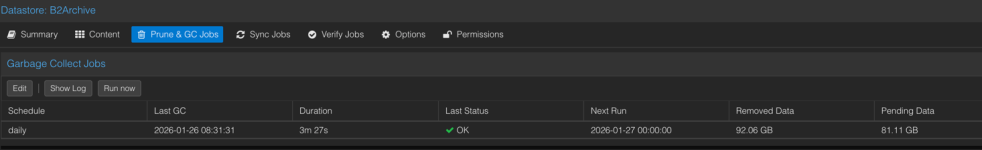

Cccolotti replied to the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups.Sure here is the one that just ran..

-

Cccolotti reacted to fabian's post in the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups with

Like.

could you post a GC task log?

Like.

could you post a GC task log? -

Cccolotti replied to the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups.yes I checked that it runs once a day and even ran it manually..only shows 80GB or so each run...which is why I am thinking locally it's fine it's the S3 side that may not be getting cleaned out "correctly" in the process, and maybe this could...

-

MHello, today I updated my Proxmox VE nodes from Linux 6.8.12-16-pve to Linux 6.8.12-18-pve and now Thunderbolt networking isn't working anymore. The driver "/lib/modules/$(uname -r)/kernel/drivers/thunderbolt/thunderbolt-net.ko" is missing. Did...

-

XXN-Matt replied to the thread Watchdog Reboots.Full logs as in the whole of the journal, or just specific to a process? The usually happens just out of the blue but has during an upgrade too. pveversion -v

-

XXN-Matt replied to the thread Watchdog Reboots.Interesting. Wonder when those issues were introduced. The number of VMs and HA resources we have has not changed since these issues occurred. Our issues started mid-Nov.

-

STHANK you all for you answer!!!

-

KHi, We have a proxmox 8.3 host running on a fujitsu Primergy RX2540 M4 server. It's running zfs on top of hw raid which I know is not recommended, but I don't think it should lead to this behavior. It has crashed three times during the last 4...

-

Cccolotti posted the thread S3 Bucket Appears to not reflect Deleted/Pruned Backups in Proxmox Backup: Installation and configuration.Hey all. I have been testing an S3 (Backblaze B2) bucket on PBS 4.x for an archive. I've setup a sync to it with basic retentions of only about 14 days. The odd thing is the local size is showing about 1TB of use, but the bucket shows 7x...