Latest activity

-

XXoxxoxuatl replied to the thread Geringe Lese-Performance mit iSCSI und Dell ME5.Was mir noch aufgefallen ist...ab einer bestimmten queue size (--iodepth) bricht die Performance ein. READ: bw=3876MiB/s (4064MB/s), 3876MiB/s-3876MiB/s (4064MB/s-4064MB/s), io=37.9GiB (40.6GB), run=10001-10001msec READ: bw=1396MiB/s...

-

Rrobertlukan reacted to theflakes's post in the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20 with

Like.

fwiw, I had a stall on backups after upgrading and unpinning my PBS server but not upgrading PVE to the newest kernel a couple days ago. I then upgraded the PVE cluster to the new kernel and so far its working as it should.

Like.

fwiw, I had a stall on backups after upgrading and unpinning my PBS server but not upgrading PVE to the newest kernel a couple days ago. I then upgraded the PVE cluster to the new kernel and so far its working as it should. -

Ttheflakes replied to the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20.fwiw, I had a stall on backups after upgrading and unpinning my PBS server but not upgrading PVE to the newest kernel a couple days ago. I then upgraded the PVE cluster to the new kernel and so far its working as it should.

-

AAnthony_wolfSSL replied to the thread Proxmox in a US Federal environment?.Hi All, @dpearceFL (and everyone else), have you perhaps considered wolfSSL's FIPS 140-3 offerings? Recently we've done work to override the cryptography underlying OpenSSL, gnuTLS, NSS and libgcrypt while keeping all their interfaces...

-

Mmuekno replied to the thread [SOLVED] difference between host screen and effective IP.Thats the trick, corrected /etc/hosts and everything shows correct static IP, thank you

-

Mmuekno reacted to leesteken's post in the thread [SOLVED] difference between host screen and effective IP with

Like.

Check your /etc/hosts and /etc/network/interfaces. Please note that Proxmox does not support DHCP out of the box and it's best to use a static IP address outside the DHCP-range of the router.

Like.

Check your /etc/hosts and /etc/network/interfaces. Please note that Proxmox does not support DHCP out of the box and it's best to use a static IP address outside the DHCP-range of the router. -

Mmuekno reacted to Impact's post in the thread [SOLVED] difference between host screen and effective IP with

Like.

Please share this grep -sR "172.16.1" /etc The pvebanner command suggestion above was made under the assumption that /etc/hosts is configured correctly.

Like.

Please share this grep -sR "172.16.1" /etc The pvebanner command suggestion above was made under the assumption that /etc/hosts is configured correctly. -

Ddagaspa replied to the thread MicroVM support.I think there may indeed be some overlap with bug 6905. In both cases, the issue seems to stem from devices being added unconditionally in PVE::QemuServer.pm, based on the assumption of a PCI-based “classic PC” machine. With machine: microvm...

-

Ttherealkeith reacted to fabian's post in the thread [SOLVED] Proxmox VE 9.0 ISO Upload Corrupts Some Windows Server 2025 ISOs with

Like.

since this weekend's Debian point release, glibc is fixed, so this should no longer be an issue on a fully updated and rebooted system.

Like.

since this weekend's Debian point release, glibc is fixed, so this should no longer be an issue on a fully updated and rebooted system. -

bbgeek17 replied to the thread [SOLVED] Not able to add any of my 3 proxmox nodes to PDM.You can also try curl -k https://[fqdn or IP]:8006 , this will show not only that the port is open but that you get a full response. Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

bbgeek17 replied to the thread [SOLVED] Not able to add any of my 3 proxmox nodes to PDM.You can also try curl -k https://[fqdn or IP]:8006 , this will show not only that the port is open but that you get a full response. Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox -

LLeopold31 reacted to fabian's post in the thread [SOLVED] Proxmox VE 9.0 ISO Upload Corrupts Some Windows Server 2025 ISOs with

Like.

since this weekend's Debian point release, glibc is fixed, so this should no longer be an issue on a fully updated and rebooted system.

Like.

since this weekend's Debian point release, glibc is fixed, so this should no longer be an issue on a fully updated and rebooted system. -

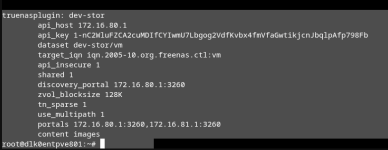

Jjt_telrite replied to the thread TrueNAS Storage Plugin.I am looking for a configuration to "pre-stage" vm disks as a DR for a primary storage failure. I understand the Proxmox limitation, TY for confirming. It looks like I'm going to have to look at using the Truenas replication tool for this.. or...

-

DDeviss replied to the thread [SOLVED] PVE 9.1.4 RAM läuft voll und OOM-Killer schießt VMs ab.Genau, ARC hatte ich manuell auf 8GB festgesetzt, da er damals in seinem Default Wert ca. 25GB genommen hat. Was bis vor kurzem auch egal war, weil der Host keine Zicken gemacht hat. Ballooning habe ich inzwischen bei allen VMs deaktiviert. Aber...

-

VictorSTS reacted to t.lamprecht's post in the thread New Archive CDN for End-of-Life (EOL) Releases with

VictorSTS reacted to t.lamprecht's post in the thread New Archive CDN for End-of-Life (EOL) Releases with Like.

Today, we announce the availability of a new archive CDN dedicated to the long-term archival of our old and End-of-Life (EOL) releases. Effective immediately, this archive hosts all repositories for releases based on Debian 10 (Buster) and older...

Like.

Today, we announce the availability of a new archive CDN dedicated to the long-term archival of our old and End-of-Life (EOL) releases. Effective immediately, this archive hosts all repositories for releases based on Debian 10 (Buster) and older... -

bbgeek17 replied to the thread [SOLVED] Qemu agent install on PDM.Try: rm -rf /var/lib/apt/lists/* apt clean apt update Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

bbgeek17 replied to the thread [SOLVED] Qemu agent install on PDM.Try: rm -rf /var/lib/apt/lists/* apt clean apt update Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox -

bbgeek17 replied to the thread Open with PVE UI adds wrong port at the end.That is a somewhat critical piece of information for your particular situation. You can create a trusted self-signed certificate for the IP if you wanted. Without looking at the code I suspect that it is hard-coded. You may want to try to...

bbgeek17 replied to the thread Open with PVE UI adds wrong port at the end.That is a somewhat critical piece of information for your particular situation. You can create a trusted self-signed certificate for the IP if you wanted. Without looking at the code I suspect that it is hard-coded. You may want to try to... -

CCarontes replied to the thread Problems in restoring LXCs backups from proxmox 8.4 to 9.1.I've reinstalled Proxmox 8.4 and restored the backups I had. Now everything works fine. I don't know if it because there's some kind of incompatibility between 8.4 and 9.1 backups or because 9.1 is still "young". I've resolved reinstalling the...

-

CCarontes replied to the thread SDN DHCP not working.Hello, I'm sorry if I write here after a long time from the last message of this thread but I have the same problem. I've set the SDN and gave it a DHCP range and enabled DHCP, but when I create a LXC or VM giving it the SDN zone name bridge with...

-

PHallo zusammen, ich habe am Wochenende unseren PVE Cluster von PVE 8.4 auf 9.1 aktualisiert. CEPH war bereits vorher auf Squid und wurde von 19.2.1 auf 19.2.3 aktualisiert. Das Update verlief problemlos, jedoch meldet CEPH seither die folgende...

-

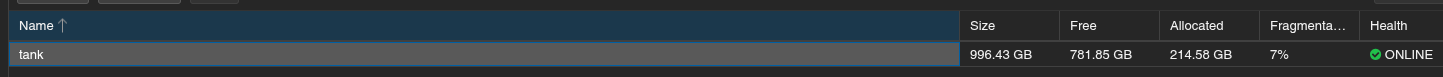

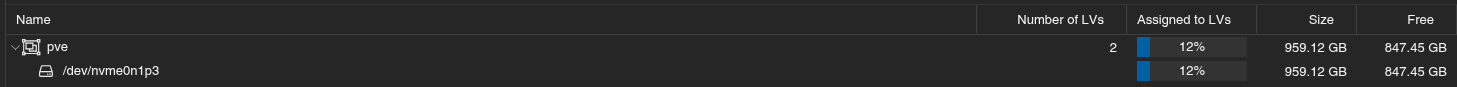

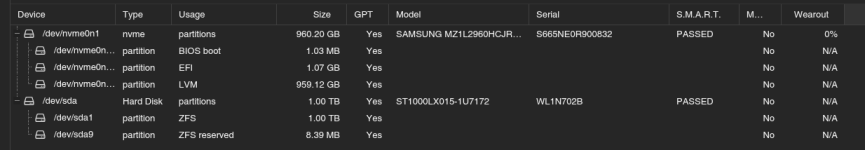

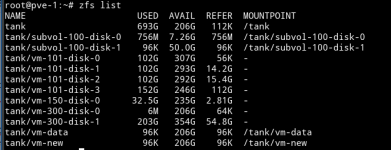

SHi, i think i got some mess on my storage settings: got 2*1 tb disks, 1 ssd and 1 spindel, node disks screenshot attached. i got zfs mount as tank. The problem is - on bm creation: local shows only 25gb out of 100gb. cannot...