Latest activity

-

OOdai S. replied to the thread PBS Garbage Collection fails on S3 (Contabo Object Storage) – missing `Name` field in response.Thanks for the confirmation, Chris. Happy New Year, and appreciate your prompt response.

-

LLG-ITI reacted to bbgeek17's post in the thread [SOLVED] External Storage Server for 2 Node Cluster with

Like.

Hi @LG-ITI , welcome to the forum. First, let’s make sure we are aligned on what “ZFS-over-iSCSI” means: This approach allows you to programmatically expose virtual disks as iSCSI LUNs. These virtual disks are backed by ZFS. The consumers of...

Like.

Hi @LG-ITI , welcome to the forum. First, let’s make sure we are aligned on what “ZFS-over-iSCSI” means: This approach allows you to programmatically expose virtual disks as iSCSI LUNs. These virtual disks are backed by ZFS. The consumers of... -

LLG-ITI reacted to alexskysilk's post in the thread [SOLVED] External Storage Server for 2 Node Cluster with

Like.

There are two ways to accomplish what you're after (three if you include zfs replication but that doesnt use the external storage device.) 1- zfs over iscsi- as @bbgeek17 explained. 2. qcow2 over nfs- install nfsd, map the dataset into exports...

Like.

There are two ways to accomplish what you're after (three if you include zfs replication but that doesnt use the external storage device.) 1- zfs over iscsi- as @bbgeek17 explained. 2. qcow2 over nfs- install nfsd, map the dataset into exports... -

Llordpit reacted to varlog's post in the thread pvesh get /nodes/{node}/qemu & qm list very slow since Proxmox 9 with

Like.

Same here - brand new installed server with proxmox 9.1.4. After rebooting, commands like "qm list" are very fast - like ~0,7 seconds. But the longer the uptime, the slower the commands become. Uptime 3 days: time qm list real 0m21.239s user...

Like.

Same here - brand new installed server with proxmox 9.1.4. After rebooting, commands like "qm list" are very fast - like ~0,7 seconds. But the longer the uptime, the slower the commands become. Uptime 3 days: time qm list real 0m21.239s user... -

Llordpit reacted to Decco1337's post in the thread pvesh get /nodes/{node}/qemu & qm list very slow since Proxmox 9 with

Like.

I have upgraded several hosts from Proxmox 8 to 9 and since then I have very long loading times when I use the command pvesh get /nodes/{node}/qemu and the same for qm list The problem is it takes 40-60s to get a result on different hosts...

Like.

I have upgraded several hosts from Proxmox 8 to 9 and since then I have very long loading times when I use the command pvesh get /nodes/{node}/qemu and the same for qm list The problem is it takes 40-60s to get a result on different hosts... -

DDerekG replied to the thread CT backup to PBS - Excess data causing very long backup window.Hi Chris, Thanks for your input, I used ncdu to check for the large sparse file but the only one I could find of anything like 2TB was the kcore file, which as 120TB & normal as far as I can tell. I did change this CT from unprivileged to...

-

OOdai S. replied to the thread PBS Garbage Collection fails on S3 (Contabo Object Storage) – missing `Name` field in response.So i consulted uncle chaty "GTP and he give this? is this what is happening ??? This part: Means: Chris has already submitted a NEW patch That patch tells PBS: This patch is not yet released in a stable package It’s currently in...

-

Nniekniek89 posted the thread Detected Hardware Unit Hang in Proxmox VE: Installation and configuration.Hello, I can't find the right answer to my question right away, so I'm opening a new post. I've had my Proxmox host suddenly become unreachable several times now. The log shows the following message: Dec 31 19:55:58 Proxmox01 kernel: e1000e...

-

GGlowsome reacted to maennlse's post in the thread Rocky 10 template and resolv.conf incomplete with

Like.

@Glowsome, thanks for the 'ansible-fix', we're using saltstack (slightly different architecture - client connects to server, not vice versa) that's why we currently workaround using the hook script. btw. i reported the bug and it seems to be...

Like.

@Glowsome, thanks for the 'ansible-fix', we're using saltstack (slightly different architecture - client connects to server, not vice versa) that's why we currently workaround using the hook script. btw. i reported the bug and it seems to be... -

MMario Rossi replied to the thread After upgrading from 9.0 and 9.1 VM Truenas won't start.That's the new VM. The old one installed in UEFI mode. The new one clearly said it couldn't boot from the ISO because it wasn't UEFI compatible, so I had to use seabios.

-

Mmaennlse replied to the thread Rocky 10 template and resolv.conf incomplete.@Glowsome, thanks for the 'ansible-fix', we're using saltstack (slightly different architecture - client connects to server, not vice versa) that's why we currently workaround using the hook script. btw. i reported the bug and it seems to be...

-

SSneedsFeedAndSeed replied to the thread About to pull my hair out.What is your nic? I have two computers with intel e1000e and learned about the offloading bug which results in the conditions you have, if so search around on here for the fix.

-

Aasteffen replied to the thread S3 object retention.Ah, thank you for finding that. I now see in the original bug tracker for implementing S3 support that object locking was not included initially but may be considered in the future.

-

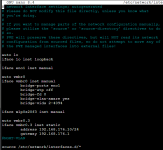

Llouie1961 replied to the thread About to pull my hair out.try adding 192.168.1.1 to your resolver list and see if that helps.

-

Uuzumo replied to the thread After upgrading from 9.0 and 9.1 VM Truenas won't start.Press any key to enter the Boot Manager menu, then enter EFI Firmware Setup. Open Device Manager - Secure Boot Configuration and uncheck “attempt secure boot”. edit : Oh. If that screen doesn't even display, I can't tell. I wrote this because...

-

SSteveITS replied to the thread setting to trigger VM power cycle when guest OS reboots?.I could see that being handy…maybe add to the bugzilla site as a suggestion if it is new. It’s not what you asked but I expect you should be able to grant permission in PVE to just their VM so they can do the shutdown.

-

MMario Rossi replied to the thread After upgrading from 9.0 and 9.1 VM Truenas won't start.I understand. I have another VM with FreeBSD OPNsense, but that one updates constantly and has no problems.

-

SSteveITS replied to the thread Connect and sync two PVEs drives with PBS.Though it’s intended for a second PBS, check if it is able to sync one datastore to another.

-

Uuzumo replied to the thread GPU Passthrough not respecting secondary GPU.Even if you remove vfio, it doesn't appear to indicate that blacklist has been removed. If you are using a script, blacklist is unnecessary.