Latest activity

-

G_gabriel replied to the thread network speed problems.what is the host CPU ?

-

SSteveITS replied to the thread CEPH cache disk.Yes, the cache tiering. I meant set up pools by device class like https://pve.proxmox.com/wiki/Deploy_Hyper-Converged_Ceph_Cluster#pve_ceph_device_classes. The DB/WAL is generally on SSD or faster disk in front of an HDD OSD. If you share a...

-

bbgeek17 replied to the thread [SOLVED] API agent/get-fsinfo missing VM ID in response.Hi @soufiyan , welcome to the forum. I think the expectation is that you already know the $vmid. You can always do something like this: pvesh get /nodes/pve-2/qemu/$vmid/agent/get-fsinfo --output-format json | jq --argjson vmid "$vmid" '...

bbgeek17 replied to the thread [SOLVED] API agent/get-fsinfo missing VM ID in response.Hi @soufiyan , welcome to the forum. I think the expectation is that you already know the $vmid. You can always do something like this: pvesh get /nodes/pve-2/qemu/$vmid/agent/get-fsinfo --output-format json | jq --argjson vmid "$vmid" '... -

Ssoufiyan posted the thread [SOLVED] API agent/get-fsinfo missing VM ID in response in Proxmox VE: Installation and configuration.Hello Proxmox community! I'm working on a monitoring script that collects filesystem information from multiple VMs using the agent/get-fsinfo API endpoint. However, I've hit a major roadblock: The Problem: When I call GET...

-

tcabernoch replied to the thread CEPH cache disk.Thanks @guruevi. (And @SteveITS) - So don't bother with doing cache with one of the current disks, as they are all the same. K. - And a SAS disk with high IOPs for DB/WAL on each host should be significantly faster than these SATAs. I've had...

tcabernoch replied to the thread CEPH cache disk.Thanks @guruevi. (And @SteveITS) - So don't bother with doing cache with one of the current disks, as they are all the same. K. - And a SAS disk with high IOPs for DB/WAL on each host should be significantly faster than these SATAs. I've had... -

SSteveITS replied to the thread CEPH cache disk.@tcabernoch IIRC from when I looked into it using a disk for read cache was deprecated or otherwise not viable with Ceph. I just don’t recall the details now. It does have memory caching. Our prior Virtuozzo Storage setup did have that ability...

-

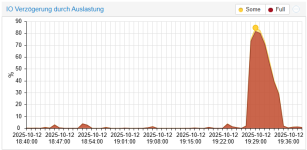

LLSX3 replied to the thread Server-Disk I/O delay 100% during cloning and backup.I’m experiencing the same issue where the I/O utilization goes up to 100% when restarting VMs — apparently whenever some load is generated. Is anyone else having this problem? Can anyone reproduce this behavior? The problem occurs with both an...

-

Ttwowordz replied to the thread zpool issue while importing pool.thanks, that did it.

-

news replied to the thread zpool issue while importing pool.or zpool import -f -d /dev/disk/by-id/ 2697102583354024049

news replied to the thread zpool issue while importing pool.or zpool import -f -d /dev/disk/by-id/ 2697102583354024049 -

Ttoto-ets replied to the thread network speed problems.Yes, in single mode with the down I go a little better, I even get to 5G

-

JJorgeCanusso reacted to AndreasS's post in the thread Proxmox VE 9 vm backup with Veeam Backup & Replication 12.3.2 with

Like.

Good morning all, Proxmox VE 9 is now officially supported on most recent Veeam with Proxmox VE plugin according to this KB article: Veeam KB4775 - Proxmox VE 9 plugin I have tried backup with latest VM version, which is working now. Download...

Like.

Good morning all, Proxmox VE 9 is now officially supported on most recent Veeam with Proxmox VE plugin according to this KB article: Veeam KB4775 - Proxmox VE 9 plugin I have tried backup with latest VM version, which is working now. Download... -

LSolution once and for all to all those "disk is busy" "disk has holder" disk has....." root@pve01:~# sgdisk --zap-all /dev/sdx root@pve01:~# readlink /sys/block/sdx...

-

G_gabriel replied to the thread network speed problems.is there a difference between single stream and multi streams speedtest ?

-

BHi all, just in case this is still relevant, I've build a 3.4.7 client for armhf (ie Beaglebone on armv7l) running Buster. Not fully tested, but at least compiled after lot of tweaks and running the first backups right now, some problem with >4GB...