Latest activity

-

Aavidesh replied to the thread update freezes.in a next attempt, i stopped all VMs and LXCs running and re executed the same process. That seem to have did the trick. it went through and completed the process. wonder what was wrong.

-

DLooks good, keep in mind that the img needs to be in your current directory the way the command is written. If this worked and you are all set, dont forget to mark the thread solved by editing the first post and selecting appropriate drop down...

-

Sshodan posted the thread Proxmox does not allow deleting CT with missing LVM volumes but does for VMs in Proxmox VE: Installation and configuration.Hi, I am salvaging a broken system, I'm copying and deleting the thin volumes manually as I determine they are no longer needed. Some are on a disk no longer in the system. I could delete VMs with missing thin volumes, however I just...

-

Ppattonb replied to the thread weird info in logs.correct, easily fixe, I wonder what I was thinking when I created that mount point. thank you.

-

Hhonggnoh replied to the thread Container backup failed.Thx for reply let me check the space

-

KKingneutron replied to the thread Container backup failed.INFO: zstd: error 70 : Write error : cannot write block : No space left on device Either your fleecing drive, rootfs, or destination is out of free disk space

-

HI would like backup my Container, but get error. Can anyone help ? INFO: starting new backup job: vzdump 106 --notes-template '{{guestname}}' --mode snapshot --node pve --notification-mode notification-system --remove 0 --storage local...

-

Sshadow wizard replied to the thread Restoring a VM causes whole system to slow to a halt.Just like the guy in the last post, I am kinda new to all this. Interesting enough I have a server similar to his. What exactly do you suggest I try, and what to set it to? I am about to head to bed for the evening, but will try the settings...

-

Aavidesh replied to the thread update freezes.root@aadpve:~# ps aux | grep update-initramfs root 8172 0.0 0.0 6660 2252 pts/1 S+ 09:22 0:00 grep update-initramfs

-

Aavidesh replied to the thread update freezes.here is what is shown by top just after running dpkg --configure -a. zstd is consuming very high cpu just prior to system freezing. nothing happens. ssh terminal is frozen as well. I waited for 20 min. then had to physically restart the server.

-

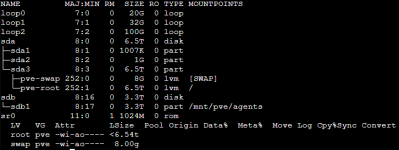

Aavidesh replied to the thread update freezes.disks are free Filesystem Size Used Avail Use% Mounted on udev 7.7G 0 7.7G 0% /dev tmpfs 1.6G 1.6M 1.6G 1% /run /dev/mapper/pve-root 94G 5.7G 84G 7% / tmpfs...

-

Rredactedhosting replied to the thread Restoring a VM causes whole system to slow to a halt.hmm try the steps in this post: https://forum.proxmox.com/threads/vm-performance-issue-while-clone-and-restore.143211/ Backup limit restore traffic.

-

Sshadow wizard replied to the thread Restoring a VM causes whole system to slow to a halt.AHA! Here is an part of it. hopefully this will help you find the issue.. (PS. There is a LOT of this) Oct 10 23:27:12 proliantproxmox kernel: file_write_and_wait_range+0x90/0xc0 Oct 10 23:27:12 proliantproxmox kernel: blkdev_fsync+0x36/0x60...

-

Rredactedhosting replied to the thread Restoring a VM causes whole system to slow to a halt.Can you look in node - > System log -> and focus on when you start the restore and when it finishes. What does it say? i have this issue when eth1 was crashing due to being overloaded on backups.

-

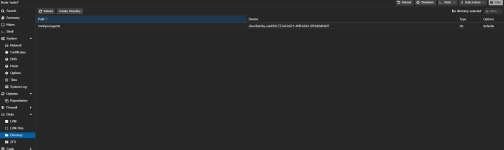

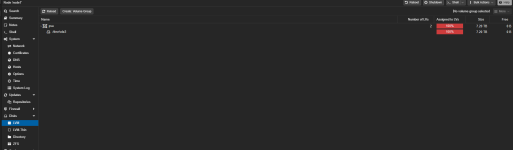

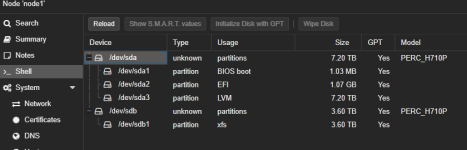

Rredactedhosting posted the thread Storage question, im stumped after hours of research :(! Shrink local and increase Directory storage in Proxmox VE: Installation and configuration.Hi all, im trying to reduce my local storage and re assign it to my "agents" storage that i use for all of my LXCs & VMs. Im short, i have 6ish TB in my node1 local, and i am looking at 3.6TB Agents storage in directory type. My question is how...

-

Sshadow wizard posted the thread Restoring a VM causes whole system to slow to a halt in Proxmox VE: Installation and configuration.When I restore a VM (32 GB Virtual drive, onto a Sata SSD, from network location) my IO delay will go up to over 80% (After the restore is 100% complete) and stay there for 30 min, making the rest of the system totally unusable. There is plenty...

-

SAnyone ever tried IP over USB? Everything I've read indicated that it's easy, just run "modprobe g_ether" and configure an IP address on usb0, use a USB cable to connect to your other device, and boom, done. I just tried it on 3 proxmox boxes...

-

KKingneutron replied to the thread Full Server backup + restore solution.I used to use Veeam, but it broke with the 6.x kernel upgrade. I have custom scripts with fsarchiver that fill my needs Try REaR (Relax And Recover) and do a restore test into a VM, IIRC it also backs up the LVM config

-

Ddevedse replied to the thread How can I find out how much data CEPH repaired on my disks?.Hmm, that explains a few things, however I'd still like to know if there's a way to show the historical bit repairs it did. Anyone else has an idea on that?

-

UIn case it helps anyone I was getting this error from the shell but only when accessing the webui through my reverse proxy, I only have one node configured. Enabling web sockets support in the proxy host configuration fixed the issue for me.