Hello, all.

One of the drives in my zpool has failed, and so I removed it and ordered a replacement drive.

Now that it's here, I am having problems replacing it.

OS: Debian 11

Pve: 7.3-4

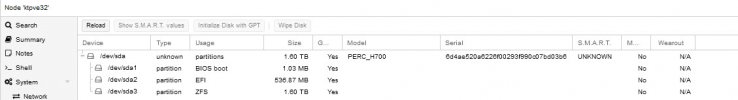

I've installed the replacement drive and it shows up under both lsblk and in the gui.

Zpool status:

The previous disk in the pool has this weird name, and the system notes that it was formerly on /dev/sda2 (the remaining disk in the pool has already been renamed sda). Also it's status is FAULTED and not REMOVED.

I first tried a straight replace..

The former drive IS shown in /dev/disk/by-id...

The new disk s/n is ZFL63932

I tried zpool replace with the disk-id values (wwn-0x5000c5002f9110e4 and wwn-0x5000c500e437d5cb), but got the same 'no such device in pool' error.

What do I have to do to replace the old disk?

Do I have to format the new disk, or will zpool set it up properly? It currently has part1 & part9, but not part2 like the current disk in the pool.

Thanks.

One of the drives in my zpool has failed, and so I removed it and ordered a replacement drive.

Now that it's here, I am having problems replacing it.

OS: Debian 11

Pve: 7.3-4

I've installed the replacement drive and it shows up under both lsblk and in the gui.

Zpool status:

Code:

root@proxmox:/home/mikec# zpool status

pool: rpool

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

scan: scrub in progress since Wed Jan 18 21:45:57 2023

143G scanned at 238M/s, 5.12G issued at 8.53M/s, 143G total

0B repaired, 3.59% done, no estimated completion time

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

10342417906894345042 FAULTED 0 0 0 was /dev/sda2

sda2 ONLINE 0 0 0

errors: No known data errors

Code:

root@proxmox:/home/mikec# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 1.8T 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1.8T 0 part

└─sda9 8:9 0 8M 0 part

sdb 8:16 0 1.8T 0 disk

├─sdb1 8:17 0 1.8T 0 part

└─sdb9 8:25 0 8M 0 part

zd0 230:0 0 24G 0 disk

├─zd0p1 230:1 0 50M 0 part

├─zd0p2 230:2 0 23.5G 0 part

└─zd0p3 230:3 0 509M 0 part

zd16 230:16 0 24G 0 disk

├─zd16p1 230:17 0 500M 0 part

└─zd16p2 230:18 0 23.5G 0 part

zd32 230:32 0 7G 0 disk [SWAP]The previous disk in the pool has this weird name, and the system notes that it was formerly on /dev/sda2 (the remaining disk in the pool has already been renamed sda). Also it's status is FAULTED and not REMOVED.

I first tried a straight replace..

Code:

zpool replace rpool 1034241706894345042 /dev/sdb

cannot replace 1034241706894345042 with /dev/sdb: no such device in poolThe former drive IS shown in /dev/disk/by-id...

Code:

root@proxmox:/home/mikec# ls /dev/disk/by-id/

ata-ST2000DM008-2FR102_ZFL63932 ata-ST32000542AS_5XW2JWV2-part1 wwn-0x5000c5002f9110e4-part1 wwn-0x5000c500e437d5cb-part1

ata-ST2000DM008-2FR102_ZFL63932-part1 ata-ST32000542AS_5XW2JWV2-part2 wwn-0x5000c5002f9110e4-part2 wwn-0x5000c500e437d5cb-part9

ata-ST2000DM008-2FR102_ZFL63932-part9 ata-ST32000542AS_5XW2JWV2-part9 wwn-0x5000c5002f9110e4-part9

ata-ST32000542AS_5XW2JWV2 wwn-0x5000c5002f9110e4 wwn-0x5000c500e437d5cbThe new disk s/n is ZFL63932

I tried zpool replace with the disk-id values (wwn-0x5000c5002f9110e4 and wwn-0x5000c500e437d5cb), but got the same 'no such device in pool' error.

What do I have to do to replace the old disk?

Do I have to format the new disk, or will zpool set it up properly? It currently has part1 & part9, but not part2 like the current disk in the pool.

Thanks.