I replaced one of my raid 1 (mirror) rpool drives via commands below:

(rpool autoexpand on)

sgdisk /dev/sdb -R /dev/nvme1n1

sgdisk -G /dev/nvme1n1

proxmox-boot-tool format /dev/nvme1n1p2 --force

proxmox-boot-tool init /dev/nvme1n1p2 --force

zpool replace -f rpool nvme-eui.0000000001000000e4d25c54db2a4c00-part3 nvme-eui.6479a7735000001f-part3

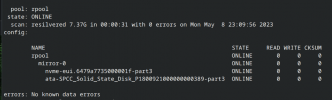

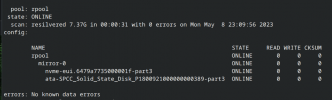

Everything seems to be in order, when I check zpool status I get:

Everything works great until I remove the replaced drive, at that point I lose the ability to ssh in and the web console to proxmox. I add back in the removed drive and everything works as it should.

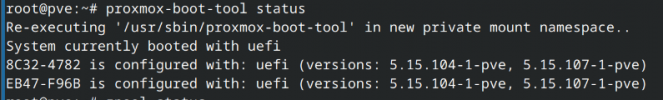

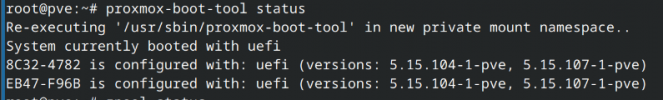

looks like the problem is shown when proxmox-boot-tool status is run "Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace.."

any ideas where I messed up? or what can be done to get the rpool to work as it should?

Thanks for your help

(rpool autoexpand on)

sgdisk /dev/sdb -R /dev/nvme1n1

sgdisk -G /dev/nvme1n1

proxmox-boot-tool format /dev/nvme1n1p2 --force

proxmox-boot-tool init /dev/nvme1n1p2 --force

zpool replace -f rpool nvme-eui.0000000001000000e4d25c54db2a4c00-part3 nvme-eui.6479a7735000001f-part3

Everything seems to be in order, when I check zpool status I get:

Everything works great until I remove the replaced drive, at that point I lose the ability to ssh in and the web console to proxmox. I add back in the removed drive and everything works as it should.

looks like the problem is shown when proxmox-boot-tool status is run "Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace.."

any ideas where I messed up? or what can be done to get the rpool to work as it should?

Thanks for your help