Just for fun, we can do the math for your setup and what you should get with 128k.

Let's look at a 132k write.

First block is an incompressible 128k write. Second is also a 128k block but with only 4k data, the rest zeros. LZ4 can compress that down to a 4k block.

Side note: This is one of the reasons why you never should disable compression even if your data is not compressible.

Ok, we take a look at the first 128k block.

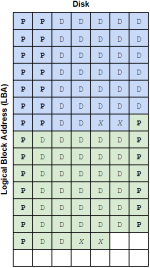

This is a single stripe:

P + P + D + D + D + D + D + D.

So a single stripe offers 6 * 4k=24 K

128k / 24k =5.333

Ok so we take 5 "full stripes" to get 5*24k=120 K

Still need another 8k.

So we do another stripe like this

P + P + D + D.

In total we now have 5 * 8 sectors + 4 sectors = 44 sectors. That is not a multiple of 3, so we add one padding to get 45 sectors.

The second block contains 4k real data and mostly zeros. It is still a 128k volblock but LZ4 compresses that to 4k actual write data.

P + P + D. Another 3 sectors.

We now have 48 sectors to store 128k data. Or 48 * 4k=192 K to store 128k.

128 / 192 * 100 =66.66%

Now I don't know what happens at the OS level, if you write another 132k. I think it should make use of the previous second volblock that is mostly empty. But that is way above my paygrade

So for simplicity, if you really want to run some test, I would test it with 16k volblock and just fill them with random data.

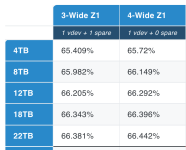

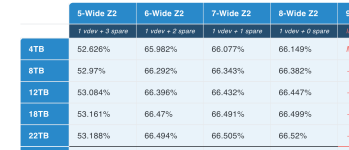

You will get 66.66% instead of 75% for a 8 wide RAIDZ2.

Let's look at a 132k write.

First block is an incompressible 128k write. Second is also a 128k block but with only 4k data, the rest zeros. LZ4 can compress that down to a 4k block.

Side note: This is one of the reasons why you never should disable compression even if your data is not compressible.

Ok, we take a look at the first 128k block.

This is a single stripe:

P + P + D + D + D + D + D + D.

So a single stripe offers 6 * 4k=24 K

128k / 24k =5.333

Ok so we take 5 "full stripes" to get 5*24k=120 K

Still need another 8k.

So we do another stripe like this

P + P + D + D.

In total we now have 5 * 8 sectors + 4 sectors = 44 sectors. That is not a multiple of 3, so we add one padding to get 45 sectors.

The second block contains 4k real data and mostly zeros. It is still a 128k volblock but LZ4 compresses that to 4k actual write data.

P + P + D. Another 3 sectors.

We now have 48 sectors to store 128k data. Or 48 * 4k=192 K to store 128k.

128 / 192 * 100 =66.66%

Now I don't know what happens at the OS level, if you write another 132k. I think it should make use of the previous second volblock that is mostly empty. But that is way above my paygrade

So for simplicity, if you really want to run some test, I would test it with 16k volblock and just fill them with random data.

You will get 66.66% instead of 75% for a 8 wide RAIDZ2.

Last edited: