Hi all, in the past we had some problems with the performance of ZFS slowing down after a few minutes, after a long ride, we decided to turn off the sync-Feature in our pool and that solved the problem for a long time.

After the last upgrade of proxmox and ZFS 2.2.0, exactly the same problem came back. We did an ZPOOL UPGRADE -A after the update. If we start a large transfer to a hosted Ubuntu VM, it starts with nice full 1 GBit and after a few minutes it slows down to 250 MBit in the max and stays there. We checked if zfs sync is still disabled and it is.

Our pool looks like this:

rpool 124T 37.5T 13 430 170K 71.5M

raidz1-0 73.8T 6.23T 4 184 30.4K 33.9M

ata-WDC_WUH722222ALE6L4_2GG2RTXF-part3 - - 1 47 9.60K 8.49M

ata-WDC_WUH722222ALE6L4_2GG4EUUD-part3 - - 1 42 6.67K 8.49M

ata-WDC_WUH722222ALE6L4_2GG1WZVD-part3 - - 1 46 7.73K 8.49M

ata-WDC_WUH722222ALE6L4_2GG1DBJD-part3 - - 0 47 6.40K 8.49M

raidz1-1 49.9T 30.2T 7 176 54.7K 35.9M

ata-WDC_WUH722222ALE6L4_2GG4GTJD-part3 - - 1 46 16.0K 8.97M

ata-WDC_WUH722222ALE6L4_2GG4BD8D-part3 - - 1 50 12.0K 8.97M

ata-WDC_WUH722222ALE6L4_2GG4B11D-part3 - - 1 42 13.9K 8.97M

ata-WDC_WUH722222ALE6L4_2GG42ZZD-part3 - - 1 36 12.8K 8.97M

special - - - - - -

mirror-3 249G 1.14T 1 69 84.8K 1.63M

nvme-TS2TMTE220S_H632680216-part2 - - 0 34 38.4K 837K

nvme-TS2TMTE220S_H632680217-part2 - - 0 34 46.4K 837K

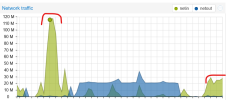

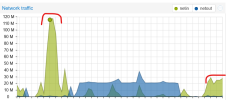

In this screenshot you can see the performance over a week. Last week it was all o.k. Full 1 GBit transfer speed. And now only 250 MBit after a few minutes. After a Proxmox Reboot or the Ubuntu VM on it, the transfer starts again with full 1GBit for est. 5 minutes, and then down again to 250 MBit.

The Ubuntu 22 is running in latest qemu, 8.1.

our package versions are:

proxmox-ve: 8.1.0 (running kernel: 6.5.11-4-pve)

pve-manager: 8.1.3 (running version: 8.1.3/b46aac3b42da5d15)

proxmox-kernel-helper: 8.0.9

pve-kernel-5.15: 7.4-6

proxmox-kernel-6.5.11-4-pve-signed: 6.5.11-4

proxmox-kernel-6.5: 6.5.11-4

proxmox-kernel-6.2.16-19-pve: 6.2.16-19

proxmox-kernel-6.2: 6.2.16-19

proxmox-kernel-6.2.16-18-pve: 6.2.16-18

proxmox-kernel-6.2.16-15-pve: 6.2.16-15

proxmox-kernel-6.2.16-12-pve: 6.2.16-12

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx7

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.1

libpve-access-control: 8.0.7

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.0

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.4

libpve-rs-perl: 0.8.7

libpve-storage-perl: 8.0.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.0.4-1

proxmox-backup-file-restore: 3.0.4-1

proxmox-kernel-helper: 8.0.9

proxmox-mail-forward: 0.2.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.2

proxmox-widget-toolkit: 4.1.3

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.3

pve-edk2-firmware: 4.2023.08-2

pve-firewall: 5.0.3

pve-firmware: 3.9-1

pve-ha-manager: 4.0.3

pve-i18n: 3.1.2

pve-qemu-kvm: 8.1.2-4

pve-xtermjs: 5.3.0-2

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.0-pve3

root@pvezfsdus:~# zpool get all

NAME PROPERTY VALUE SOURCE

rpool size 161T -

rpool capacity 76% -

rpool altroot - default

rpool health ONLINE -

rpool guid 1845857329845318629 -

rpool version - default

rpool bootfs rpool/ROOT/pve-1 local

rpool delegation on default

rpool autoreplace off default

rpool cachefile - default

rpool failmode wait default

rpool listsnapshots on local

rpool autoexpand off default

rpool dedupratio 1.00x -

rpool free 37.5T -

rpool allocated 124T -

rpool readonly off -

rpool ashift 12 local

rpool comment - default

rpool expandsize - -

rpool freeing 0 -

rpool fragmentation 13% -

rpool leaked 0 -

rpool multihost off default

rpool checkpoint - -

rpool load_guid 6890186714854732407 -

rpool autotrim off default

rpool compatibility off default

rpool bcloneused 0 -

rpool bclonesaved 0 -

rpool bcloneratio 1.00x -

rpool feature@async_destroy enabled local

rpool feature@empty_bpobj active local

rpool feature@lz4_compress active local

rpool feature@multi_vdev_crash_dump enabled local

rpool feature@spacemap_histogram active local

rpool feature@enabled_txg active local

rpool feature@hole_birth active local

rpool feature@extensible_dataset active local

rpool feature@embedded_data active local

rpool feature@bookmarks enabled local

rpool feature@filesystem_limits enabled local

rpool feature@large_blocks enabled local

rpool feature@large_dnode enabled local

rpool feature@sha512 enabled local

rpool feature@skein enabled local

rpool feature@edonr enabled local

rpool feature@userobj_accounting active local

rpool feature@encryption active local

rpool feature@project_quota active local

rpool feature@device_removal enabled local

rpool feature@obsolete_counts enabled local

rpool feature@zpool_checkpoint enabled local

rpool feature@spacemap_v2 active local

rpool feature@allocation_classes active local

rpool feature@resilver_defer enabled local

rpool feature@bookmark_v2 enabled local

rpool feature@redaction_bookmarks enabled local

rpool feature@redacted_datasets enabled local

rpool feature@bookmark_written enabled local

rpool feature@log_spacemap active local

rpool feature@livelist enabled local

rpool feature@device_rebuild enabled local

rpool feature@zstd_compress enabled local

rpool feature@draid enabled local

rpool feature@zilsaxattr enabled local

rpool feature@head_errlog active local

rpool feature@blake3 enabled local

rpool feature@block_cloning enabled local

rpool feature@vdev_zaps_v2 active local

root@pvezfsdus:~# zfs get all rpool

NAME PROPERTY VALUE SOURCE

rpool type filesystem -

rpool creation Tue Nov 1 17:27 2022 -

rpool used 90.1T -

rpool available 26.0T -

rpool referenced 151K -

rpool compressratio 1.13x -

rpool mounted yes -

rpool quota none default

rpool reservation none default

rpool recordsize 128K default

rpool mountpoint /rpool default

rpool sharenfs off default

rpool checksum on default

rpool compression lz4 local

rpool atime on local

rpool devices on default

rpool exec on default

rpool setuid on default

rpool readonly off default

rpool zoned off default

rpool snapdir hidden default

rpool aclmode discard default

rpool aclinherit restricted default

rpool createtxg 1 -

rpool canmount on default

rpool xattr on default

rpool copies 1 default

rpool version 5 -

rpool utf8only off -

rpool normalization none -

rpool casesensitivity sensitive -

rpool vscan off default

rpool nbmand off default

rpool sharesmb off default

rpool refquota none default

rpool refreservation none default

rpool guid 12661903171730691671 -

rpool primarycache all default

rpool secondarycache all default

rpool usedbysnapshots 0B -

rpool usedbydataset 151K -

rpool usedbychildren 90.1T -

rpool usedbyrefreservation 0B -

rpool logbias latency default

rpool objsetid 54 -

rpool dedup off default

rpool mlslabel none default

rpool sync disabled local

rpool dnodesize legacy default

rpool refcompressratio 1.00x -

rpool written 151K -

rpool logicalused 93.7T -

rpool logicalreferenced 46K -

rpool volmode default default

rpool filesystem_limit none default

rpool snapshot_limit none default

rpool filesystem_count none default

rpool snapshot_count none default

rpool snapdev hidden default

rpool acltype off default

rpool context none default

rpool fscontext none default

rpool defcontext none default

rpool rootcontext none default

rpool relatime on local

rpool redundant_metadata all default

rpool overlay on default

rpool encryption off default

rpool keylocation none default

rpool keyformat none default

rpool pbkdf2iters 0 default

rpool special_small_blocks 0 default

Anyone has an idea, what's happenig there?

After the last upgrade of proxmox and ZFS 2.2.0, exactly the same problem came back. We did an ZPOOL UPGRADE -A after the update. If we start a large transfer to a hosted Ubuntu VM, it starts with nice full 1 GBit and after a few minutes it slows down to 250 MBit in the max and stays there. We checked if zfs sync is still disabled and it is.

Our pool looks like this:

rpool 124T 37.5T 13 430 170K 71.5M

raidz1-0 73.8T 6.23T 4 184 30.4K 33.9M

ata-WDC_WUH722222ALE6L4_2GG2RTXF-part3 - - 1 47 9.60K 8.49M

ata-WDC_WUH722222ALE6L4_2GG4EUUD-part3 - - 1 42 6.67K 8.49M

ata-WDC_WUH722222ALE6L4_2GG1WZVD-part3 - - 1 46 7.73K 8.49M

ata-WDC_WUH722222ALE6L4_2GG1DBJD-part3 - - 0 47 6.40K 8.49M

raidz1-1 49.9T 30.2T 7 176 54.7K 35.9M

ata-WDC_WUH722222ALE6L4_2GG4GTJD-part3 - - 1 46 16.0K 8.97M

ata-WDC_WUH722222ALE6L4_2GG4BD8D-part3 - - 1 50 12.0K 8.97M

ata-WDC_WUH722222ALE6L4_2GG4B11D-part3 - - 1 42 13.9K 8.97M

ata-WDC_WUH722222ALE6L4_2GG42ZZD-part3 - - 1 36 12.8K 8.97M

special - - - - - -

mirror-3 249G 1.14T 1 69 84.8K 1.63M

nvme-TS2TMTE220S_H632680216-part2 - - 0 34 38.4K 837K

nvme-TS2TMTE220S_H632680217-part2 - - 0 34 46.4K 837K

In this screenshot you can see the performance over a week. Last week it was all o.k. Full 1 GBit transfer speed. And now only 250 MBit after a few minutes. After a Proxmox Reboot or the Ubuntu VM on it, the transfer starts again with full 1GBit for est. 5 minutes, and then down again to 250 MBit.

The Ubuntu 22 is running in latest qemu, 8.1.

our package versions are:

proxmox-ve: 8.1.0 (running kernel: 6.5.11-4-pve)

pve-manager: 8.1.3 (running version: 8.1.3/b46aac3b42da5d15)

proxmox-kernel-helper: 8.0.9

pve-kernel-5.15: 7.4-6

proxmox-kernel-6.5.11-4-pve-signed: 6.5.11-4

proxmox-kernel-6.5: 6.5.11-4

proxmox-kernel-6.2.16-19-pve: 6.2.16-19

proxmox-kernel-6.2: 6.2.16-19

proxmox-kernel-6.2.16-18-pve: 6.2.16-18

proxmox-kernel-6.2.16-15-pve: 6.2.16-15

proxmox-kernel-6.2.16-12-pve: 6.2.16-12

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx7

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.1

libpve-access-control: 8.0.7

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.0

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.4

libpve-rs-perl: 0.8.7

libpve-storage-perl: 8.0.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.0.4-1

proxmox-backup-file-restore: 3.0.4-1

proxmox-kernel-helper: 8.0.9

proxmox-mail-forward: 0.2.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.2

proxmox-widget-toolkit: 4.1.3

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.3

pve-edk2-firmware: 4.2023.08-2

pve-firewall: 5.0.3

pve-firmware: 3.9-1

pve-ha-manager: 4.0.3

pve-i18n: 3.1.2

pve-qemu-kvm: 8.1.2-4

pve-xtermjs: 5.3.0-2

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.0-pve3

root@pvezfsdus:~# zpool get all

NAME PROPERTY VALUE SOURCE

rpool size 161T -

rpool capacity 76% -

rpool altroot - default

rpool health ONLINE -

rpool guid 1845857329845318629 -

rpool version - default

rpool bootfs rpool/ROOT/pve-1 local

rpool delegation on default

rpool autoreplace off default

rpool cachefile - default

rpool failmode wait default

rpool listsnapshots on local

rpool autoexpand off default

rpool dedupratio 1.00x -

rpool free 37.5T -

rpool allocated 124T -

rpool readonly off -

rpool ashift 12 local

rpool comment - default

rpool expandsize - -

rpool freeing 0 -

rpool fragmentation 13% -

rpool leaked 0 -

rpool multihost off default

rpool checkpoint - -

rpool load_guid 6890186714854732407 -

rpool autotrim off default

rpool compatibility off default

rpool bcloneused 0 -

rpool bclonesaved 0 -

rpool bcloneratio 1.00x -

rpool feature@async_destroy enabled local

rpool feature@empty_bpobj active local

rpool feature@lz4_compress active local

rpool feature@multi_vdev_crash_dump enabled local

rpool feature@spacemap_histogram active local

rpool feature@enabled_txg active local

rpool feature@hole_birth active local

rpool feature@extensible_dataset active local

rpool feature@embedded_data active local

rpool feature@bookmarks enabled local

rpool feature@filesystem_limits enabled local

rpool feature@large_blocks enabled local

rpool feature@large_dnode enabled local

rpool feature@sha512 enabled local

rpool feature@skein enabled local

rpool feature@edonr enabled local

rpool feature@userobj_accounting active local

rpool feature@encryption active local

rpool feature@project_quota active local

rpool feature@device_removal enabled local

rpool feature@obsolete_counts enabled local

rpool feature@zpool_checkpoint enabled local

rpool feature@spacemap_v2 active local

rpool feature@allocation_classes active local

rpool feature@resilver_defer enabled local

rpool feature@bookmark_v2 enabled local

rpool feature@redaction_bookmarks enabled local

rpool feature@redacted_datasets enabled local

rpool feature@bookmark_written enabled local

rpool feature@log_spacemap active local

rpool feature@livelist enabled local

rpool feature@device_rebuild enabled local

rpool feature@zstd_compress enabled local

rpool feature@draid enabled local

rpool feature@zilsaxattr enabled local

rpool feature@head_errlog active local

rpool feature@blake3 enabled local

rpool feature@block_cloning enabled local

rpool feature@vdev_zaps_v2 active local

root@pvezfsdus:~# zfs get all rpool

NAME PROPERTY VALUE SOURCE

rpool type filesystem -

rpool creation Tue Nov 1 17:27 2022 -

rpool used 90.1T -

rpool available 26.0T -

rpool referenced 151K -

rpool compressratio 1.13x -

rpool mounted yes -

rpool quota none default

rpool reservation none default

rpool recordsize 128K default

rpool mountpoint /rpool default

rpool sharenfs off default

rpool checksum on default

rpool compression lz4 local

rpool atime on local

rpool devices on default

rpool exec on default

rpool setuid on default

rpool readonly off default

rpool zoned off default

rpool snapdir hidden default

rpool aclmode discard default

rpool aclinherit restricted default

rpool createtxg 1 -

rpool canmount on default

rpool xattr on default

rpool copies 1 default

rpool version 5 -

rpool utf8only off -

rpool normalization none -

rpool casesensitivity sensitive -

rpool vscan off default

rpool nbmand off default

rpool sharesmb off default

rpool refquota none default

rpool refreservation none default

rpool guid 12661903171730691671 -

rpool primarycache all default

rpool secondarycache all default

rpool usedbysnapshots 0B -

rpool usedbydataset 151K -

rpool usedbychildren 90.1T -

rpool usedbyrefreservation 0B -

rpool logbias latency default

rpool objsetid 54 -

rpool dedup off default

rpool mlslabel none default

rpool sync disabled local

rpool dnodesize legacy default

rpool refcompressratio 1.00x -

rpool written 151K -

rpool logicalused 93.7T -

rpool logicalreferenced 46K -

rpool volmode default default

rpool filesystem_limit none default

rpool snapshot_limit none default

rpool filesystem_count none default

rpool snapshot_count none default

rpool snapdev hidden default

rpool acltype off default

rpool context none default

rpool fscontext none default

rpool defcontext none default

rpool rootcontext none default

rpool relatime on local

rpool redundant_metadata all default

rpool overlay on default

rpool encryption off default

rpool keylocation none default

rpool keyformat none default

rpool pbkdf2iters 0 default

rpool special_small_blocks 0 default

Anyone has an idea, what's happenig there?

Last edited: