Please try to wait a bit until all the old replication jobs have terminated (again check withupdate nodes to 7.1-6 but same pb :

2021-11-25 11:43:00 692013-1: start replication job

2021-11-25 11:43:00 692013-1: guest => VM 692013, running => 11312

2021-11-25 11:43:00 692013-1: volumes => local-zfs:vm-692013-disk-0

2021-11-25 11:43:01 692013-1: delete stale replication snapshot '__replicate_692013-1_1637836747__' on local-zfs:vm-692013-disk-0

2021-11-25 11:43:01 692013-1: delete stale replication snapshot error: zfs error: cannot destroy snapshot rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__: dataset is busy

2021-11-25 11:43:02 692013-1: freeze guest filesystem

2021-11-25 11:43:02 692013-1: create snapshot '__replicate_692013-1_1637836980__' on local-zfs:vm-692013-disk-0

2021-11-25 11:43:02 692013-1: thaw guest filesystem

2021-11-25 11:43:02 692013-1: using insecure transmission, rate limit: 10 MByte/s

2021-11-25 11:43:02 692013-1: incremental sync 'local-zfs:vm-692013-disk-0' (__replicate_692013-1_1637709304__ => __replicate_692013-1_1637836980__)

2021-11-25 11:43:02 692013-1: using a bandwidth limit of 10000000 bps for transferring 'local-zfs:vm-692013-disk-0'

2021-11-25 11:43:05 692013-1: send from @__replicate_692013-1_1637709304__ to rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__ estimated size is 27.3G

2021-11-25 11:43:05 692013-1: send from @__replicate_692013-1_1637836747__ to rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836980__ estimated size is 859K

2021-11-25 11:43:05 692013-1: total estimated size is 27.3G

2021-11-25 11:43:06 692013-1: TIME SENT SNAPSHOT rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:06 692013-1: 11:43:06 619K rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:07 692013-1: 11:43:07 619K rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:08 692013-1: 11:43:08 619K rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:09 692013-1: 11:43:09 619K rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:10 692013-1: 11:43:10 619K rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:11 692013-1: 11:43:11 619K rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:12 692013-1: 11:43:12 619K rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__

2021-11-25 11:43:12 692013-1: 586936 B 573.2 KB 7.97 s 73612 B/s 71.89 KB/s

2021-11-25 11:43:12 692013-1: write: Broken pipe

2021-11-25 11:43:12 692013-1: warning: cannot send 'rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836747__': signal received

2021-11-25 11:43:12 692013-1: warning: cannot send 'rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836980__': Broken pipe

2021-11-25 11:43:12 692013-1: cannot send 'rpool/data/vm-692013-disk-0': I/O error

2021-11-25 11:43:12 692013-1: command 'zfs send -Rpv -I __replicate_692013-1_1637709304__ -- rpool/data/vm-692013-disk-0@__replicate_692013-1_1637836980__' failed: exit code 1

2021-11-25 11:43:12 692013-1: [ve-ara-22] cannot receive incremental stream: dataset is busy

2021-11-25 11:43:12 692013-1: [ve-ara-22] command 'zfs recv -F -- rpool/data/vm-692013-disk-0' failed: exit code 1

2021-11-25 11:43:12 692013-1: delete previous replication snapshot '__replicate_692013-1_1637836980__' on local-zfs:vm-692013-disk-0

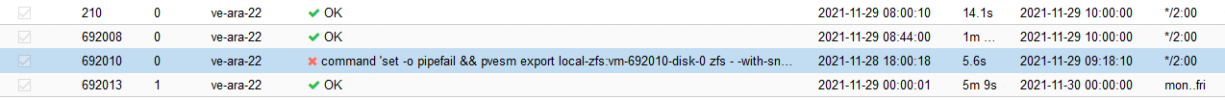

2021-11-25 11:43:13 692013-1: end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-692013-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_692013-1_1637836980__ -base __replicate_692013-1_1637709304__ | /usr/bin/cstream -t 10000000' failed: exit code 2

ps).Hmm, seems like for that one had an invalid replication state and tried to do a full sync. I'm afraid you'll have to remove and re-create that job (or remove the volume on the target).and for second VM :

2021-11-25 11:47:07 692008-0: start replication job

2021-11-25 11:47:07 692008-0: guest => VM 692008, running => 7037

2021-11-25 11:47:07 692008-0: volumes => local-zfs:vm-692008-disk-0

2021-11-25 11:47:09 692008-0: freeze guest filesystem

2021-11-25 11:47:09 692008-0: create snapshot '__replicate_692008-0_1637837227__' on local-zfs:vm-692008-disk-0

2021-11-25 11:47:09 692008-0: thaw guest filesystem

2021-11-25 11:47:09 692008-0: using insecure transmission, rate limit: 10 MByte/s

2021-11-25 11:47:09 692008-0: full sync 'local-zfs:vm-692008-disk-0' (__replicate_692008-0_1637837227__)

2021-11-25 11:47:09 692008-0: using a bandwidth limit of 10000000 bps for transferring 'local-zfs:vm-692008-disk-0'

2021-11-25 11:47:12 692008-0: full send of rpool/data/vm-692008-disk-0@__replicate_692008-0_1637837227__ estimated size is 86.7G

2021-11-25 11:47:12 692008-0: total estimated size is 86.7G

2021-11-25 11:47:12 692008-0: 1180 B 1.2 KB 0.71 s 1651 B/s 1.61 KB/s

2021-11-25 11:47:12 692008-0: write: Broken pipe

2021-11-25 11:47:12 692008-0: warning: cannot send 'rpool/data/vm-692008-disk-0@__replicate_692008-0_1637837227__': signal received

2021-11-25 11:47:12 692008-0: cannot send 'rpool/data/vm-692008-disk-0': I/O error

2021-11-25 11:47:12 692008-0: command 'zfs send -Rpv -- rpool/data/vm-692008-disk-0@__replicate_692008-0_1637837227__' failed: exit code 1

2021-11-25 11:47:12 692008-0: [ve-ara-22] volume 'rpool/data/vm-692008-disk-0' already exists

2021-11-25 11:47:12 692008-0: delete previous replication snapshot '__replicate_692008-0_1637837227__' on local-zfs:vm-692008-disk-0

2021-11-25 11:47:12 692008-0: end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-692008-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_692008-0_1637837227__ | /usr/bin/cstream -t 10000000' failed: exit code 2