Hello

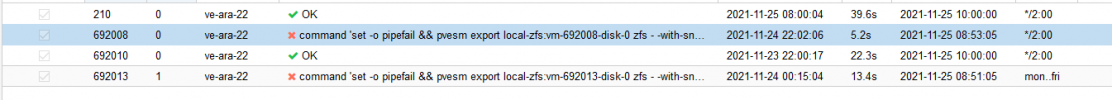

We encounter difficulties with ZFS replication between two hosts.

Some replication goes into error randomly because Proxmox try to send a full backup even if it should be incremental.

We have already try to delete replication job and disk on destination and re-create the replication job without success (it does the first full and after some time it decide to do another full)

The two hosts are on same version :

Dont hesitate to ask for more information that could help.

We encounter difficulties with ZFS replication between two hosts.

Some replication goes into error randomly because Proxmox try to send a full backup even if it should be incremental.

We have already try to delete replication job and disk on destination and re-create the replication job without success (it does the first full and after some time it decide to do another full)

Code:

2021-11-22 11:17:02 1039-0: start replication job

2021-11-22 11:17:02 1039-0: guest => VM 1039, running => 2693527

2021-11-22 11:17:02 1039-0: volumes => local-zfs:vm-1039-disk-0

2021-11-22 11:17:03 1039-0: delete stale replication snapshot '__replicate_1039-0_1637575917__' on local-zfs:vm-1039-disk-0

2021-11-22 11:17:04 1039-0: (remote_prepare_local_job) delete stale replication snapshot '__replicate_1039-0_1637575917__' on local-zfs:vm-1039-disk-0

2021-11-22 11:17:04 1039-0: freeze guest filesystem

2021-11-22 11:17:04 1039-0: create snapshot '__replicate_1039-0_1637576222__' on local-zfs:vm-1039-disk-0

2021-11-22 11:17:04 1039-0: thaw guest filesystem

2021-11-22 11:17:04 1039-0: using insecure transmission, rate limit: none

2021-11-22 11:17:04 1039-0: full sync 'local-zfs:vm-1039-disk-0' (__replicate_1039-0_1637576222__)

2021-11-22 11:17:07 1039-0: full send of local-zfs/vm-1039-disk-0@__replicate_1039-0_1637576222__ estimated size is 2.31G

2021-11-22 11:17:07 1039-0: total estimated size is 2.31G

2021-11-22 11:17:07 1039-0: warning: cannot send 'local-zfs/vm-1039-disk-0@__replicate_1039-0_1637576222__': Broken pipe

2021-11-22 11:17:07 1039-0: cannot send 'local-zfs/vm-1039-disk-0': I/O error

2021-11-22 11:17:07 1039-0: command 'zfs send -Rpv -- local-zfs/vm-1039-disk-0@__replicate_1039-0_1637576222__' failed: exit code 1

2021-11-22 11:17:07 1039-0: [pve-int-02] volume 'local-zfs/vm-1039-disk-0' already exists

2021-11-22 11:17:07 1039-0: delete previous replication snapshot '__replicate_1039-0_1637576222__' on local-zfs:vm-1039-disk-0

2021-11-22 11:17:07 1039-0: end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-1039-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_1039-0_1637576222__' failed: exit code 1The two hosts are on same version :

proxmox-ve: 7.1-1 (running kernel: 5.13.19-1-pve)

pve-manager: 7.1-4 (running version: 7.1-4/ca457116)

pve-kernel-5.13: 7.1-4

pve-kernel-helper: 7.1-4

pve-kernel-5.11: 7.0-10

pve-kernel-5.13.19-1-pve: 5.13.19-2

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-4-pve: 5.11.22-9

pve-kernel-5.11.22-1-pve: 5.11.22-2

ceph-fuse: 15.2.13-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-14

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.0-3

libpve-storage-perl: 7.0-15

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-4

lxcfs: 4.0.8-pve2

novnc-pve: 1.2.0-3

openvswitch-switch: 2.15.0+ds1-2

proxmox-backup-client: 2.0.14-1

proxmox-backup-file-restore: 2.0.14-1

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.4-2

pve-cluster: 7.1-2

pve-container: 4.1-2

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-3

pve-ha-manager: 3.3-1 pve-i18n: 2.6-1

pve-qemu-kvm: 6.1.0-2

pve-xtermjs: 4.12.0-1

pve-zsync: 2.2

qemu-server: 7.1-3

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

zfsutils-linux: 2.1.1-pve3

Dont hesitate to ask for more information that could help.

Last edited: