hello, i have two exact disk in raid 1 mirror zfs pool

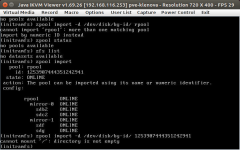

root@pve:~# zpool status

pool: rpool

state: ONLINE

scan: resilvered 2,21M in 0h0m with 0 errors on Sat Jul 16 20:32:11 2016

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-WDC_WD10EFRX-68JCSN0_WD-WMC1U6546808-part2 ONLINE 0 0 0

ata-WDC_WD10EFRX-68PJCN0_WD-WCC4J2021886-part2 ONLINE 0 0 0

i would like to add two another exact same disks and make raid 10 is it possible to do it withou reinstalling the whole pve? thank you

root@pve:~# zpool status

pool: rpool

state: ONLINE

scan: resilvered 2,21M in 0h0m with 0 errors on Sat Jul 16 20:32:11 2016

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-WDC_WD10EFRX-68JCSN0_WD-WMC1U6546808-part2 ONLINE 0 0 0

ata-WDC_WD10EFRX-68PJCN0_WD-WCC4J2021886-part2 ONLINE 0 0 0

i would like to add two another exact same disks and make raid 10 is it possible to do it withou reinstalling the whole pve? thank you

Last edited: