Now we are getting somewhere.

Probably missed the definition for zfs raid10. If it is a stripe of mirrors then it automatically answers my question as well.

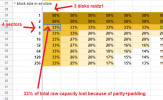

So with 8 disks created using "raid10" in the WebUI your pool would look like this:

It is my described Method 2 then, Ok.

A striped mirror (zfs term for raid10) are multiple mirrors (don't need to be 2 disks, you can also have 3 or 4 disks in a mirror so that 2 or 3 disks of the mirror might fail without losing data) striped together.

I am trying to solve the problem of having 2 disks of the same mirror fail by combining different brand disks (mostly HGST and WD or Seagate) for each mirror. What I mean is placing them in the server in a way that odd positions are covered by one brand and even positions by another,

Having for instance 3 mirrored drives is even better (assuming you can afford the storage loss) but I dont think you can accomplish that from gui. Am I right? It seems that I have to custom create the mirrors by myself. Then, can the last step which will be striping the mirrors, be done from gui or there is no need and since I started the procedure from cli it would be wise to finish it there?

If you use it in the installer keep in mind that not the whole disk will be used for ZFS. Only one of the many partitions of each disk will be used for pool.

oh, new info here. Didn t know that. Why? So you are suggesting creating the raid from cli right? Do any extra options are necessary during the creation to avoid having only one partition of each disk being used?

You ve wrote (sorry for this way of presenting it but after editing the post couldnt let me reply quote way)

<<<<<<<<<<<<Without that every read operation will cause an additional write operation to edit the metadata. So you get better IOPS, better throughput and your SSDs will live longer. Only negative effect would be that your access time isn't that accurate but it sould be accurate enough for nearly any application.>>>>>>>>>>>>>>

A little research on this option brought ..... relatime It only updates the

atime when the previous

atime is older then

mtime (modification time) or

ctime (changed time)

or when the

atime is older then 24h (based on setting). Does zfs using this kernel feature to calculate times when using snapshots and shouldn t be disabled?

Since the way to check that option is with zfs command and not zpool that means relatime is an option you can set for each individual Dataset and not for the pool inside which Datasets reside right? Or you can set it for both? Of course there would be the possibility that by setting the pool automatically options are inherited to the Datasets below that pool.

Most important question though is .... can you set that option afterwards when the pool/Dataset has already data insside? Won t that effect anything?

Currently on all proxmox nodes i have created none has that option enabled. Since you brought that up though, searching some older personal guides found me the below options I was aware of :

-You should stripe mirrors for the best IO,

-RAIDZ2 is not exactly fast, don't use it for pools larger than 10 disks

-make sure when you created your zfs pool that you used an ashift value according to the physical block size of your disks

-no gains with read cache or an SLOG for specifica configurations ZFS loves RAM, soyou can also tweak the ARC to a larger size for some performance gains.

-zfs set xattr=sa (pool) set the Linux extended attributes as so, this will stop the file system from writing tiny files

and write directly to the inodes

-zfs set sync=disabled (pool) disable sync, this may seem dangerous, but do it anyway! You will get a huge performance gain.

-zfs set compression=lz4 (pool/dataset) set the compression level default here, this is currently the best compression algorithm.

-zfs set atime=off (pool) this disables the Accessed attribute on every file that is accessed, this can double IOPS

-zfs set recordsize=(value) (pool/vdev) The recordsize value will be determined by the type of data on the file system,

16K for VM images and databases or an exact match, or 1M for collections 5-9MB JPG files

and GB+ movies ETC. If you are unsure, the default of 128K is good enough for all around

mixes of file sizes.

-acltype=posixacl, default acltype=off Hm..... don t haev any info for this one but seen it like this in many occasions. Mine is set to off

-primary cache=metadata (For Vm storage) Interesting article with production level examples.

https://www.ikus-soft.com/en/blog/2018-05-23-proxmox-primarycache-all-metadata/

Thank you for all that great info!!!!!! Really appreciated since I am adding nice tips to my guides making them more advanced.

I am really looking forward for your answer for my last post

By the way I am using sas 10k 2.5 inch drives of 1.2Tb capacity each and 512b both logical/physical., so the ashift value I am going to use is 9.

For the proxmox it self which is based on a mirror ssd pair, those disks (even though ssd and lie for some reason for that) are also 512b logical/physical and ashift is 9