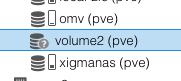

Hi everyone, I have a cluster with 3 nodes and in the last node just added I can't see a local ZFS resource of the latter.

I was told that the problem arises from the fact that the pool does not have the same ZFS pool name as the cluster. Not very experienced in the proxmox cluster, what I was told is correct. How can I make this local zfs pool available to the whole cluster without destroying it? Thank you all .

I was told that the problem arises from the fact that the pool does not have the same ZFS pool name as the cluster. Not very experienced in the proxmox cluster, what I was told is correct. How can I make this local zfs pool available to the whole cluster without destroying it? Thank you all .