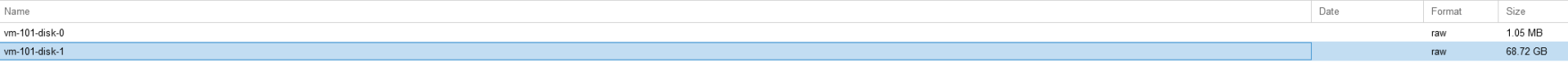

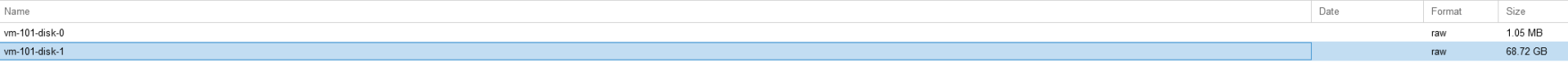

I have a ZFS raid0 single-disk pool for my pve host. I only have one VM in it with these disks:

The vDisk is configured to be only 64GB and I have a couple of snapshots that I expect are lightweight.

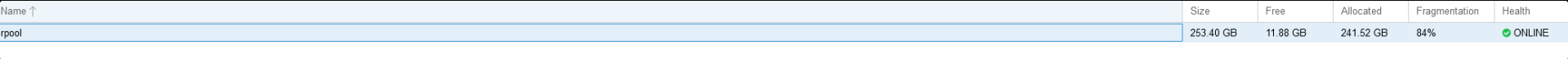

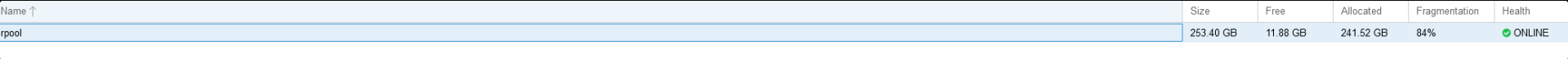

When I check the pve's zfs pool size, I get this:

Why am I seeing 241.52GB of allocated space? How do I trace what's causing this?

The vDisk is configured to be only 64GB and I have a couple of snapshots that I expect are lightweight.

When I check the pve's zfs pool size, I get this:

Why am I seeing 241.52GB of allocated space? How do I trace what's causing this?