ZFS Pool Capacity Discrepancy

- Thread starter cjones

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Can you please send the output ofzfs list,zpool listandzpool status?

I just noticed from

zfs list, that vm-102-disk-2 is reporting as 3.42T in size when it's only configured for 2T in the VM's config, so that's interesting.

Code:

root@SkyNetHost1:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

Datastore 3.48T 40.4G 208K /Datastore

Datastore/base-999999999-disk-0 112K 40.4G 112K -

Datastore/vm-101-disk-0 384K 40.4G 384K -

Datastore/vm-101-disk-1 5.89G 40.4G 5.89G -

Datastore/vm-102-disk-0 384K 40.4G 384K -

Datastore/vm-102-disk-1 9.79G 40.4G 9.79G -

Datastore/vm-102-disk-2 3.42T 40.4G 3.42T -

Datastore/vm-103-disk-0 3.50G 40.4G 3.50G -

Datastore/vm-106-disk-0 384K 40.4G 384K -

Datastore/vm-106-disk-1 9.09G 40.4G 9.09G -

Datastore/vm-107-disk-0 6.38G 40.4G 6.38G -

rpool 4.13G 221G 104K /rpool

rpool/ROOT 4.12G 221G 96K /rpool/ROOT

rpool/ROOT/pve-1 4.12G 221G 4.12G /

rpool/data 96K 221G 96K /rpool/data

Code:

root@SkyNetHost1:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

Datastore 5.45T 5.22T 235G - - 10% 95% 1.00x ONLINE -

rpool 232G 4.13G 228G - - 8% 1% 1.00x ONLINE -

Code:

root@SkyNetHost1:~# zpool status

pool: Datastore

state: ONLINE

scan: scrub repaired 0B in 0 days 03:55:55 with 0 errors on Sun May 10 04:19:57 2020

config:

NAME STATE READ WRITE CKSUM

Datastore ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

ata-ST91000640NS_9XG4X0RE ONLINE 0 0 0

scsi-35000c5005837e09b ONLINE 0 0 0

scsi-35000c50058379447 ONLINE 0 0 0

scsi-35000c5005837959b ONLINE 0 0 0

scsi-35000c5005837b127 ONLINE 0 0 0

scsi-35000c500583850e7 ONLINE 0 0 0

logs

ata-ADATA_SU800_2I1820015025-part1 ONLINE 0 0 0

ata-MKNSSDRE250GB-LT_ML2001241005F0657-part1 ONLINE 0 0 0

cache

ata-ADATA_SU800_2I1820015025-part2 ONLINE 0 0 0

ata-MKNSSDRE250GB-LT_ML2001241005F0657-part2 ONLINE 0 0 0

errors: No known data errors

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 0 days 00:00:17 with 0 errors on Sun May 10 00:24:21 2020

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-MKNSSDRE250GB-LT_ML1901021004926E6-part3 ONLINE 0 0 0

ata-MKNSSDRE250GB-LT_ML181024100460918-part3 ONLINE 0 0 0

errors: No known data errorsI see that you use a RAIDZ vdev. It is possible that, if the block size is not aligned well, the overhead in parity kinda explodes. See https://forum.proxmox.com/threads/zfs-shows-42-22tib-available-but-only-gives-the-vm-25.69874/ where someone else had a similar problem.

Also, a ZFS pool should not be filled to more than ~80% as performance will drop otherwise.

Also, a ZFS pool should not be filled to more than ~80% as performance will drop otherwise.

I see that you use a RAIDZ vdev. It is possible that, if the block size is not aligned well, the overhead in parity kinda explodes. See https://forum.proxmox.com/threads/zfs-shows-42-22tib-available-but-only-gives-the-vm-25.69874/ where someone else had a similar problem.

Also, a ZFS pool should not be filled to more than ~80% as performance will drop otherwise.

Is the block size your referencing the block size of the VM's disks with the block size of the ZFS pool?

Thank you for the 80% threshold, I am aware, so I should've probably reached out way earlier about this...

Code:root@SkyNetHost1:~# zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT Datastore 5.45T 5.22T 235G - - 10% 95% 1.00x ONLINE - rpool 232G 4.13G 228G - - 8% 1% 1.00x ONLINE -

Wow, personally, I've never seen a pool getting above 93% without throwing "out of space" errors all over the place, so you may run into trouble soon.

Why such a large discrepancy? What is causing this?

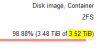

Oh, and more detail than @aaron's answer: the output if from zpool and zfs different: zpool outputs the actual disk space in use for the vdevs (the layer of redundancy including the redundancy) and the zfs shows the space after the redundancy, so the (guessed) net space. Therefore the two are not in sync and the GUI also shows the output of both commands.

Yea....I'm in progress with mitigating it. Have already hit issues and had to disable things such as backups and delete unimportant VMs so far...Wow, personally, I've never seen a pool getting above 93% without throwing "out of space" errors all over the place, so you may run into trouble soon.

Thank you for the clarification.Oh, and more detail than @aaron's answer: the output if from zpool and zfs different: zpool outputs the actual disk space in use for the vdevs (the layer of redundancy including the redundancy) and the zfs shows the space after the redundancy, so the (guessed) net space. Therefore the two are not in sync and the GUI also shows the output of both commands.