There seem to be a lot of posts about ZFS over ISCSI but not really any that detail all the steps in one full tutorial.

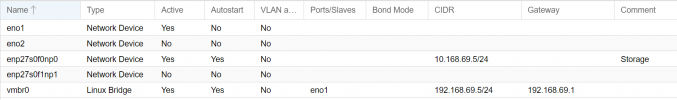

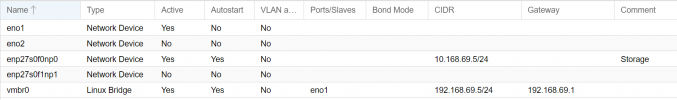

Currently, I have 2 networks setup one is my main lan 1Gb and one is dedicated to my storage network 40Gb. Can create a VM with ZFS over ISCSI. The disk appears on the Truenas machine. When I start the machine it fails to start. SSH authentication works fine from Proxmox to Truenas. This is the first machine that will be installed expanding to more but not enough at the beginning for CEPH.

Can Ping both directions Proxmox server to the Truenas Scale without an issue.

storage.cfg ZFS over ISCSI

zfs: TruenasScaleISCSI

blocksize 8k

iscsiprovider freenas

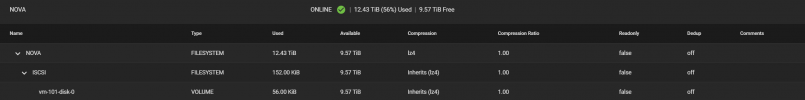

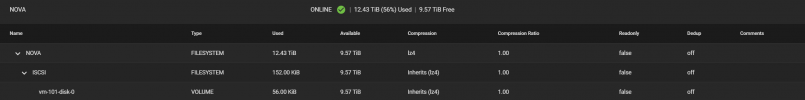

pool NOVA/ISCSI

portal 10.168.69.8

target iqn.2005-10.org.freenas.ctl roxmox-tgt

roxmox-tgt

content images

freenas_apiv4_host 10.168.69.8

freenas_password <root_password>

freenas_use_ssl 0

freenas_user root

nowritecache 0

sparse 1

VM Conf

boot: order=virtio0;ide2;net0

cores: 6

ide2: TrunasScaleISO:iso/ubuntu-20.04.5-live-server-amd64.iso,media=cdrom,size=1373568K

memory: 9472

meta: creation-qemu=7.0.0,ctime=1665090170

name: TestICSI

net0: virtio=2E:E4:5A:AE:06:C2,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsihw: virtio-scsi-pci

smbios1: uuid=9a24b130-ba2d-4a91-9bfb-6322d136c16e

sockets: 1

virtio0: TruenasScaleISCSI:vm-101-disk-0,size=100G

vmgenid: b346d4ff-1b08-41db-bb57-0963122e18ab

Truenas showing the automaticly created disk

syslog when starting machine

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: start VM 101: UPID roxmox1:002752DD:017A3D77:633F452A:qmstart:101:root@pam:

roxmox1:002752DD:017A3D77:633F452A:qmstart:101:root@pam:

Oct 07 04:14:18 proxmox1 pvedaemon[2573200]: <root@pam> starting task UPID roxmox1:002752DD:017A3D77:633F452A:qmstart:101:root@pam:

roxmox1:002752DD:017A3D77:633F452A:qmstart:101:root@pam:

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_lun_command : list_lu(/dev/zvol/NOVA/ISCSI/vm-101-disk-0)

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : REST connection header Content-Type:'text/html'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : Changing to v2.0 API's

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : REST connection header Content-Type:'application/json; charset=utf-8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : REST connection successful to '10.168.69.8' using the 'http' protocol

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : successful : Server version: TrueNAS-SCALE-22.02.4

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : TrueNAS-SCALE Unformatted Version: 22020400

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : Using TrueNAS-SCALE API version v2.0

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_globalconfiguration : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_globalconfiguration : target_basename=iqn.2005-10.org.freenas.ctl

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : successful : 1

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : called with (method: 'list_lu'; result_value_type: 'name'; param[0]: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0')

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : TrueNAS object to find: 'zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu 'zvol/NOVA/ISCSI/vm-101-disk-0' with key 'name' found with value: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_lun_command : list_view(/dev/zvol/NOVA/ISCSI/vm-101-disk-0)

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_view : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : successful : 1

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : called with (method: 'list_view'; result_value_type: 'lun-id'; param[0]: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0')

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : TrueNAS object to find: 'zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu 'zvol/NOVA/ISCSI/vm-101-disk-0' with key 'lun-id' found with value: '0'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_lun_command : list_extent(/dev/zvol/NOVA/ISCSI/vm-101-disk-0)

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_extent : called with (method: 'list_extent'; params[0]: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0')

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : successful : 1

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_extent TrueNAS object to find: 'zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_extent 'zvol/NOVA/ISCSI/vm-101-disk-0' wtih key 'naa' found with value: '0x6589cfc00000083bbdffe8d0cdf4acc6'

Oct 07 04:14:22 proxmox1 systemd[1]: Started 101.scope.

Oct 07 04:14:22 proxmox1 systemd[1]: 101.scope: Succeeded.

Oct 07 04:14:22 proxmox1 pvedaemon[2577117]: start failed: QEMU exited with code 1

Oct 07 04:14:22 proxmox1 pvedaemon[2573200]: <root@pam> end task UPID roxmox1:002752DD:017A3D77:633F452A:qmstart:101:root@pam: start failed: QEMU exited with code 1

roxmox1:002752DD:017A3D77:633F452A:qmstart:101:root@pam: start failed: QEMU exited with code 1

The log appears good and it is talking back and forth from what I see and the disk is created. If I remove the ISCSI disk I can start the VM without an issue.

Please be gentle first time with ISCSI

Thanks

Thaimichael

Currently, I have 2 networks setup one is my main lan 1Gb and one is dedicated to my storage network 40Gb. Can create a VM with ZFS over ISCSI. The disk appears on the Truenas machine. When I start the machine it fails to start. SSH authentication works fine from Proxmox to Truenas. This is the first machine that will be installed expanding to more but not enough at the beginning for CEPH.

Can Ping both directions Proxmox server to the Truenas Scale without an issue.

storage.cfg ZFS over ISCSI

zfs: TruenasScaleISCSI

blocksize 8k

iscsiprovider freenas

pool NOVA/ISCSI

portal 10.168.69.8

target iqn.2005-10.org.freenas.ctl

content images

freenas_apiv4_host 10.168.69.8

freenas_password <root_password>

freenas_use_ssl 0

freenas_user root

nowritecache 0

sparse 1

VM Conf

boot: order=virtio0;ide2;net0

cores: 6

ide2: TrunasScaleISO:iso/ubuntu-20.04.5-live-server-amd64.iso,media=cdrom,size=1373568K

memory: 9472

meta: creation-qemu=7.0.0,ctime=1665090170

name: TestICSI

net0: virtio=2E:E4:5A:AE:06:C2,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsihw: virtio-scsi-pci

smbios1: uuid=9a24b130-ba2d-4a91-9bfb-6322d136c16e

sockets: 1

virtio0: TruenasScaleISCSI:vm-101-disk-0,size=100G

vmgenid: b346d4ff-1b08-41db-bb57-0963122e18ab

Truenas showing the automaticly created disk

syslog when starting machine

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: start VM 101: UPID

Oct 07 04:14:18 proxmox1 pvedaemon[2573200]: <root@pam> starting task UPID

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_lun_command : list_lu(/dev/zvol/NOVA/ISCSI/vm-101-disk-0)

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : REST connection header Content-Type:'text/html'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : Changing to v2.0 API's

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : REST connection header Content-Type:'application/json; charset=utf-8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_connect : REST connection successful to '10.168.69.8' using the 'http' protocol

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : successful : Server version: TrueNAS-SCALE-22.02.4

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : TrueNAS-SCALE Unformatted Version: 22020400

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_check : Using TrueNAS-SCALE API version v2.0

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_globalconfiguration : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_globalconfiguration : target_basename=iqn.2005-10.org.freenas.ctl

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : successful : 1

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : called with (method: 'list_lu'; result_value_type: 'name'; param[0]: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0')

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : TrueNAS object to find: 'zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu 'zvol/NOVA/ISCSI/vm-101-disk-0' with key 'name' found with value: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_lun_command : list_view(/dev/zvol/NOVA/ISCSI/vm-101-disk-0)

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_view : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : successful : 1

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : successful

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : called

Oct 07 04:14:18 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : called with (method: 'list_view'; result_value_type: 'lun-id'; param[0]: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0')

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu : TrueNAS object to find: 'zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_lu 'zvol/NOVA/ISCSI/vm-101-disk-0' with key 'lun-id' found with value: '0'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_lun_command : list_extent(/dev/zvol/NOVA/ISCSI/vm-101-disk-0)

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_extent : called with (method: 'list_extent'; params[0]: '/dev/zvol/NOVA/ISCSI/vm-101-disk-0')

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_get_targetid : successful : 1

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_target_to_extent : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : called

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : called for host '10.168.69.8'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_api_call : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_iscsi_get_extent : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::freenas_list_lu : successful

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_extent TrueNAS object to find: 'zvol/NOVA/ISCSI/vm-101-disk-0'

Oct 07 04:14:19 proxmox1 pvedaemon[2577117]: PVE::Storage::LunCmd::FreeNAS::run_list_extent 'zvol/NOVA/ISCSI/vm-101-disk-0' wtih key 'naa' found with value: '0x6589cfc00000083bbdffe8d0cdf4acc6'

Oct 07 04:14:22 proxmox1 systemd[1]: Started 101.scope.

Oct 07 04:14:22 proxmox1 systemd[1]: 101.scope: Succeeded.

Oct 07 04:14:22 proxmox1 pvedaemon[2577117]: start failed: QEMU exited with code 1

Oct 07 04:14:22 proxmox1 pvedaemon[2573200]: <root@pam> end task UPID

The log appears good and it is talking back and forth from what I see and the disk is created. If I remove the ISCSI disk I can start the VM without an issue.

Please be gentle first time with ISCSI

Thanks

Thaimichael