Hello Proxmox community,

TL;DR

Huge performance hit after upgrading to 6.3-3, probably having to do with IO delay.

Replication from system to system will stall completely for up to a minute at a time.

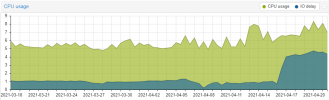

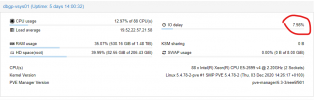

This graph shows the sharp rise in IO delay. The rise corresponds precisely with the update. The load on this server did not change.

before the update the IO delay was generally between 0 and 1%.

I have a duplicate/identical system that is sitting idle, so I can do whatever tests necessary to troubleshoot this.

Any help would be appreciated.

Thanks,

Elliot

TL;DR

Huge performance hit after upgrading to 6.3-3, probably having to do with IO delay.

Replication from system to system will stall completely for up to a minute at a time.

This graph shows the sharp rise in IO delay. The rise corresponds precisely with the update. The load on this server did not change.

before the update the IO delay was generally between 0 and 1%.

Code:

root@dbgp-vsys01:~# zpool status

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 0 days 00:07:07 with 0 errors on Sun Apr 11 00:31:08 2021

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sda2 ONLINE 0 0 0

sdb2 ONLINE 0 0 0

errors: No known data errors

pool: tank

state: ONLINE

scan: resilvered 196G in 0 days 00:10:27 with 0 errors on Sat Apr 17 00:32:39 2021

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502XJ4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502W44P0IGN ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502XE4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502V64P0IGN ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF813200244P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF813200AY4P0IGN ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502XN4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810503114P0IGN ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502VL4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF813102KU4P0IGN ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502W94P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810503F84P0IGN ONLINE 0 0 0

mirror-6 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF8132002L4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502VD4P0IGN ONLINE 0 0 0

mirror-7 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF813102JC4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF8105047S4P0IGN ONLINE 0 0 0

mirror-8 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810503F14P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810502V54P0IGN ONLINE 0 0 0

mirror-9 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF8105031A4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810504W44P0IGN ONLINE 0 0 0

mirror-10 ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF810503FC4P0IGN ONLINE 0 0 0

nvme-INTEL_SSDPE2KX040T7_PHLF727500J34P0IGN ONLINE 0 0 0

logs

nvme-INTEL_SSDPE21K375GA_PHKE751000AW375AGN ONLINE 0 0 0

nvme-INTEL_SSDPE21K375GA_PHKE75100081375AGN ONLINE 0 0 0

errors: No known data errors

Code:

root@dbgp-vsys01:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 91.2G 124G 104K /rpool

rpool/ROOT 82.5G 124G 96K /rpool/ROOT

rpool/ROOT/pve-1 82.5G 124G 82.5G /

rpool/data 96K 124G 96K /rpool/data

rpool/swap 8.50G 131G 1.24G -

tank 2.01T 36.6T 104K /tank

tank/config 14.0M 36.6T 7.34M /tank/config

tank/data 1.99T 36.6T 176K /tank/data

tank/data/migrate 96K 36.6T 96K /tank/data/migrate

tank/data/subvol-100-disk-1 1.32G 6.74G 1.26G /tank/data/subvol-100-disk-1

tank/data/subvol-100-disk-2 515G 36.6T 461G /tank/data/subvol-100-disk-2

tank/data/subvol-100-disk-3 5.24G 7.13G 894M /tank/data/subvol-100-disk-3

tank/data/subvol-104-disk-1 28.9G 36.6T 22.3G /tank/data/subvol-104-disk-1

tank/data/subvol-132-disk-0 930M 7.11G 914M /tank/data/subvol-132-disk-0

tank/data/subvol-172-disk-0 35.3G 70.7G 29.3G /tank/data/subvol-172-disk-0

tank/data/subvol-174-disk-0 53.9G 47.2G 52.8G /tank/data/subvol-174-disk-0

tank/data/subvol-176-disk-0 8.10G 42.2G 7.78G /tank/data/subvol-176-disk-0

tank/data/subvol-196-disk-0 1.66G 6.51G 1.49G /tank/data/subvol-196-disk-0

tank/data/subvol-197-disk-0 2.18G 6.02G 1.98G /tank/data/subvol-197-disk-0

tank/data/vm-105-disk-0 4.68G 36.6T 4.68G -

tank/data/vm-107-disk-0 54.6G 36.6T 53.0G -

tank/data/vm-113-disk-0 10.8G 36.6T 10.5G -

tank/data/vm-118-disk-0 17.0G 36.6T 17.0G -

tank/data/vm-127-disk-0 52.4G 36.6T 41.9G -

tank/data/vm-136-disk-0 12.2G 36.6T 12.2G -

tank/data/vm-139-disk-0 26.6G 36.6T 25.2G -

tank/data/vm-146-disk-0 2.57G 36.6T 2.42G -

tank/data/vm-150-disk-0 18.2G 36.6T 17.1G -

tank/data/vm-150-disk-1 2.30G 36.6T 2.30G -

tank/data/vm-159-disk-0 29.6G 36.6T 28.6G -

tank/data/vm-164-disk-0 5.01G 36.6T 4.20G -

tank/data/vm-168-disk-0 29.2G 36.6T 28.3G -

tank/data/vm-169-disk-0 16.8G 36.6T 16.8G -

tank/data/vm-170-disk-0 30.7G 36.6T 30.7G -

tank/data/vm-171-disk-0 35.2G 36.6T 35.2G -

tank/data/vm-175-disk-0 24.8G 36.6T 17.8G -

tank/data/vm-181-disk-0 16.3G 36.6T 16.3G -

tank/data/vm-215-disk-0 26.0G 36.6T 25.8G -

tank/data/vm-215-disk-1 6.91M 36.6T 6.91M -

tank/data/vm-230-disk-0 24.1G 36.6T 19.3G -

tank/data/vm-280-disk-0 111G 36.6T 92.7G -

tank/data/vm-280-disk-1 209G 36.6T 172G -

tank/data/vm-282-disk-0 185G 36.6T 150G -

tank/data/vm-714-disk-0 42.8G 36.6T 41.7G -

tank/data/vm-715-disk-0 44.2G 36.6T 40.4G -

tank/data/vm-715-disk-1 32.0G 36.6T 32.0G -

tank/data/vm-905-disk-0 241G 36.6T 202G -

tank/data/vm-905-disk-1 83.2G 36.6T 31.6G -

tank/data/vm-999-disk-1 56K 36.6T 56K -

tank/encrypted_data 192K 36.6T 192K /tank/encrypted_data

Code:

root@dbgp-vsys01:~# pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.78-2-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.34-1-pve: 5.4.34-2

pve-kernel-4.15: 5.4-8

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.13-3-pve: 5.3.13-3

pve-kernel-4.15.18-20-pve: 4.15.18-46

pve-kernel-4.15.17-1-pve: 4.15.17-9

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 3.0.0-1+pve3

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-6

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.8-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-5

pve-cluster: 6.2-1

pve-container: 3.3-3

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-5

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

Code:

root@dbgp-vsys01:~# zpool iostat tank 2

capacity operations bandwidth

pool alloc free read write read write

---------- ----- ----- ----- ----- ----- -----

tank 2.01T 37.9T 88 6.87K 944K 66.9M

tank 2.01T 37.9T 0 52.0K 0 473M

tank 2.01T 37.9T 0 2.82K 37.9K 42.1M

tank 2.01T 37.9T 0 12.4K 7.97K 108M

tank 2.01T 37.9T 0 15.3K 0 143M

tank 2.01T 37.9T 0 10.2K 7.97K 99.2M

tank 2.01T 37.9T 0 12.6K 7.97K 108M

tank 2.01T 37.9T 0 9.60K 0 107M

tank 2.01T 37.9T 0 11.7K 5.98K 111M

tank 2.01T 37.9T 2 43.2K 13.9K 379M

tank 2.01T 37.9T 0 6.58K 5.98K 73.5M

tank 2.01T 37.9T 2 822 14.0K 16.9M

tank 2.01T 37.9T 2 57.2K 13.9K 519M

tank 2.01T 37.9T 0 7.28K 5.98K 72.0M

tank 2.01T 37.9T 5 110K 45.8K 976M

tank 2.01T 37.9T 6 72.2K 33.9K 646M

tank 2.01T 37.9T 3 21.0K 17.9K 200M

tank 2.01T 37.9T 0 13.9K 0 124M

tank 2.01T 37.9T 0 9.24K 0 83.4M

tank 2.01T 37.9T 2 8.92K 12.0K 82.0M

tank 2.01T 37.9T 0 7.79K 0 74.2M

tank 2.01T 37.9T 1 6.53K 12.0K 67.2M

tank 2.01T 37.9T 0 389 0 7.71M

tank 2.01T 37.9T 0 14.0K 0 138M

tank 2.01T 37.9T 0 1.16K 3.98K 16.4M

tank 2.01T 37.9T 0 14.0K 5.98K 121M

tank 2.01T 37.9T 5 23.5K 21.9K 209M

tank 2.01T 37.9T 0 4.53K 0 36.0M

tank 2.01T 37.9T 0 2.55K 3.98K 24.9M

tank 2.01T 37.9T 1 2.20K 7.97K 36.6M

tank 2.01T 37.9T 2 3.42K 13.9K 35.0M

tank 2.01T 37.9T 0 7.16K 0 79.7M

tank 2.01T 37.9T 0 0 5.98K 0

tank 2.01T 37.9T 2 0 9.96K 0

tank 2.01T 37.9T 0 9.20K 0 94.5M

tank 2.01T 37.9T 0 35.0K 0 309M

tank 2.01T 37.9T 0 12.8K 1.99K 115M

tank 2.01T 37.9T 0 20.1K 0 182MI have a duplicate/identical system that is sitting idle, so I can do whatever tests necessary to troubleshoot this.

Any help would be appreciated.

Thanks,

Elliot