Hi,

I'm new to the Proxmox world. I've search for an answer on this topic but couldn't work it out.

We would like to buy 4 Dell R760/R770 to run PVE. Right now we have 4 old DELL connected to an old SAN with LVM on top and plain KVM/QEMU. We have ~50 VMs and we are quite static on this.

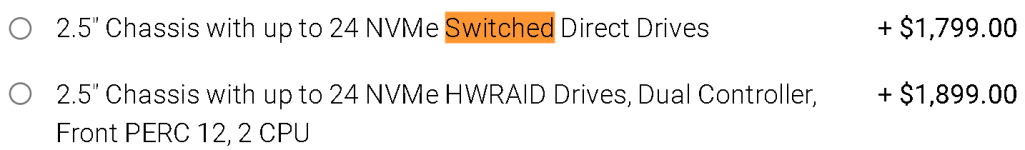

We never used ZFS so we are newbie to this world. We read many times to not use ZFS on top of HW raid for data integrity reason. Still, since our try with Proxmox might not work well we would like to leave the door open to going back to LVM+ext4 and we are undecided between these two options:

16 800GB NVMe direct drives, Smart Flow

or

16 800GB NVMe HWRAID Drives, Smart Flow, Dual Controller, Front PERC 12

With the first option we will have the chance to present all 16 drives as an HBA would do, correct? So we can pool toghether with ZFS and do our stuff directly with it. Some colleague says that there will still be some type of controller (like S160!?), but I read that each NVMe has a dedicated PCIe line to the CPU, so it doesn't make any sense to have something between them.

With the second option we will leave the door open BUT we don't understand if configuring all the disks as "non-raid" will still use some kind of cache on the HW raid controllers. Following this seems that "non-raid disks" will have the "Write-Through" options (good, right?) but will be presented as SAS (and not NVMe) so I don't get it if we will lose some ZFS functionality.

Aside for the fact that PERC will cost us more than 1000 euro more than the "direct drives" options, can the second option be a viable road or it could lead to data loss or corruption as everyone said about ZFS on top of HWRAID?

Also, Proxmox basic (or standard) subscription will cover this type of issue or this is stuff for the ZFS guys?

I'm new to the Proxmox world. I've search for an answer on this topic but couldn't work it out.

We would like to buy 4 Dell R760/R770 to run PVE. Right now we have 4 old DELL connected to an old SAN with LVM on top and plain KVM/QEMU. We have ~50 VMs and we are quite static on this.

We never used ZFS so we are newbie to this world. We read many times to not use ZFS on top of HW raid for data integrity reason. Still, since our try with Proxmox might not work well we would like to leave the door open to going back to LVM+ext4 and we are undecided between these two options:

16 800GB NVMe direct drives, Smart Flow

or

16 800GB NVMe HWRAID Drives, Smart Flow, Dual Controller, Front PERC 12

With the first option we will have the chance to present all 16 drives as an HBA would do, correct? So we can pool toghether with ZFS and do our stuff directly with it. Some colleague says that there will still be some type of controller (like S160!?), but I read that each NVMe has a dedicated PCIe line to the CPU, so it doesn't make any sense to have something between them.

With the second option we will leave the door open BUT we don't understand if configuring all the disks as "non-raid" will still use some kind of cache on the HW raid controllers. Following this seems that "non-raid disks" will have the "Write-Through" options (good, right?) but will be presented as SAS (and not NVMe) so I don't get it if we will lose some ZFS functionality.

Aside for the fact that PERC will cost us more than 1000 euro more than the "direct drives" options, can the second option be a viable road or it could lead to data loss or corruption as everyone said about ZFS on top of HWRAID?

Also, Proxmox basic (or standard) subscription will cover this type of issue or this is stuff for the ZFS guys?

Last edited: