Hello.

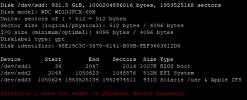

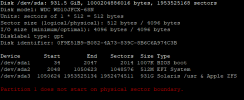

So i just updated one of our servers with zfs in raid 1. One of the disks where REALLY slow, update was stuck for over an hour, the disk had 3 checksum fails in zfs.

Using smartctl took over 10 seconds to post, compaired to the other disk.

Once i took the disk offline in zfs, the update sped up and finnished in 3 minutes.

Rebooted the server, it is now quicker than it used to be (due to the failed/slow disk), boot up just fine.

Now the question, is there anything i need to fix when replacing the disk? Other than these guides i usally use.

https://forum.proxmox.com/threads/disk-replacement-procedure-for-a-zfs-raid-1-install.21356/

https://edmondscommerce.github.io/replacing-failed-drive-in-zfs-zpool-on-proxmox/

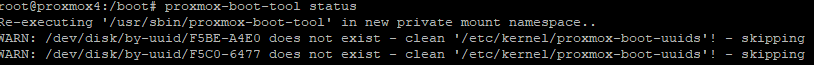

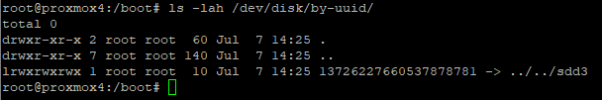

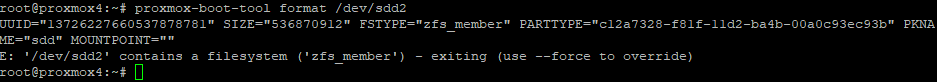

Because it says if cant find the grub directory of any one the disks.

So i just updated one of our servers with zfs in raid 1. One of the disks where REALLY slow, update was stuck for over an hour, the disk had 3 checksum fails in zfs.

Using smartctl took over 10 seconds to post, compaired to the other disk.

Once i took the disk offline in zfs, the update sped up and finnished in 3 minutes.

Rebooted the server, it is now quicker than it used to be (due to the failed/slow disk), boot up just fine.

Now the question, is there anything i need to fix when replacing the disk? Other than these guides i usally use.

https://forum.proxmox.com/threads/disk-replacement-procedure-for-a-zfs-raid-1-install.21356/

https://edmondscommerce.github.io/replacing-failed-drive-in-zfs-zpool-on-proxmox/

Because it says if cant find the grub directory of any one the disks.