Hello

One morning on my server, I noticed that my ZFS pool was degraded, 2 SSDs seemed broken, the LEDs on the disks had turned red.

The disks were Samsung 850 Pro 1 TB, no longer finding any on the market so I decided to upgrade to larger Kingston brand disks (DC600M) 1.92 TB.

When I replaced the failed disks and ran the zpool replace commands, the resilvering was done successfully. All my records were ONLINE again.

After a final reboot of the server, my two disks (although new) returned to red, and the status of my ZFS pool was degraded again.

I tried to move the cage location of one of the two disks to see if the problem was not coming from my backplane, but the red LED follows the disk.

I will find it hard to believe that two new disks are faulty

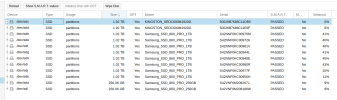

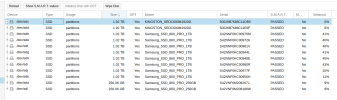

The SMART status of the disks is OK, I don't understand why they are considered faulty by ZFS

Thank a lot

One morning on my server, I noticed that my ZFS pool was degraded, 2 SSDs seemed broken, the LEDs on the disks had turned red.

The disks were Samsung 850 Pro 1 TB, no longer finding any on the market so I decided to upgrade to larger Kingston brand disks (DC600M) 1.92 TB.

When I replaced the failed disks and ran the zpool replace commands, the resilvering was done successfully. All my records were ONLINE again.

After a final reboot of the server, my two disks (although new) returned to red, and the status of my ZFS pool was degraded again.

I tried to move the cage location of one of the two disks to see if the problem was not coming from my backplane, but the red LED follows the disk.

I will find it hard to believe that two new disks are faulty

The SMART status of the disks is OK, I don't understand why they are considered faulty by ZFS

Thank a lot