Hi,

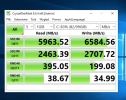

I tried with ZFS raid10 with 12 NVMe Samsung 2TB -> ashift set to 13 compression lz4. Vm host: w2019 standard with Virtio SCSI controller, and results are poor.

Similar configuration with SAS RAID 10 with 8 hard disk and embedded raid controller result esspecialy for write is much better. Any best practice for repair this situation?

I tried with ZFS raid10 with 12 NVMe Samsung 2TB -> ashift set to 13 compression lz4. Vm host: w2019 standard with Virtio SCSI controller, and results are poor.

Similar configuration with SAS RAID 10 with 8 hard disk and embedded raid controller result esspecialy for write is much better. Any best practice for repair this situation?