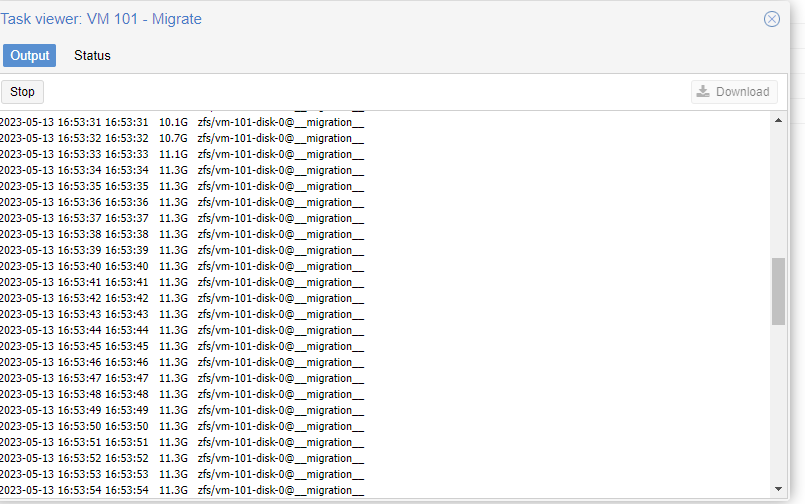

One of my servers is taking a very long time to migrate data. As you see from this screenshot, it does eventually complete

syslog shows the following, is it a sign of a bad disk? nvme2n1p1 & nvme3n1p1 are in the ZFS pool

syslog shows the following, is it a sign of a bad disk? nvme2n1p1 & nvme3n1p1 are in the ZFS pool

Code:

May 13 16:45:16 HOME1 zed: eid=90 class=delay pool='zfs' vdev=nvme3n1p1 size=131072 offset=142885580800 priority=2 err=0 flags=0x40080c80 delay=30450ms

May 13 16:45:48 HOME1 zed: eid=91 class=delay pool='zfs' vdev=nvme2n1p1 size=8192 offset=143091658752 priority=2 err=0 flags=0x180880 delay=30521ms bookmark=2965:1:0:322341

May 13 16:45:48 HOME1 kernel: [ 6270.862011] nvme nvme2: I/O 863 QID 28 timeout, completion polled

May 13 16:46:04 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:46:29 HOME1 kernel: [ 6311.821119] nvme nvme2: I/O 845 QID 28 timeout, completion polled

May 13 16:46:29 HOME1 kernel: [ 6311.821218] nvme nvme3: I/O 234 QID 28 timeout, completion polled

May 13 16:46:29 HOME1 zed: eid=92 class=delay pool='zfs' vdev=nvme2n1p1 size=36864 offset=145545158656 priority=2 err=0 flags=0x40080c80 delay=31650ms

May 13 16:46:29 HOME1 zed: eid=93 class=delay pool='zfs' vdev=nvme3n1p1 size=8192 offset=145607200768 priority=2 err=0 flags=0x180880 delay=31643ms bookmark=2965:1:0:634406

May 13 16:46:32 HOME1 pvedaemon[11992]: <root@pam> end task UPID:HOME1:00081BE5:00096673:645FB03E:qmigrate:100:root@pam: OK

May 13 16:47:17 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:47:33 HOME1 pveproxy[12003]: worker exit

May 13 16:47:33 HOME1 pveproxy[2400]: worker 12003 finished

May 13 16:47:33 HOME1 pveproxy[2400]: starting 1 worker(s)

May 13 16:47:33 HOME1 pveproxy[2400]: worker 664033 started

May 13 16:47:38 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:47:58 HOME1 pveproxy[664033]: Clearing outdated entries from certificate cache

May 13 16:47:59 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:48:13 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:49:02 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:49:09 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:49:55 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:49:55 HOME1 systemd[1]: Started Session 13 of user root.

May 13 16:49:55 HOME1 systemd[1]: session-13.scope: Succeeded.

May 13 16:49:55 HOME1 systemd[1]: Started Session 14 of user root.

May 13 16:49:55 HOME1 systemd[1]: session-14.scope: Succeeded.

May 13 16:49:56 HOME1 systemd[1]: Started Session 15 of user root.

May 13 16:49:56 HOME1 kernel: [ 6518.409249] debugfs: Directory 'zd48' with parent 'block' already present!

May 13 16:49:56 HOME1 systemd-udevd[664945]: zd48: Failed to create symlink '/dev/zfs/vm-100-cloudinit.tmp-b230:48' to '../zd48': Not a directory

May 13 16:49:56 HOME1 systemd[1]: session-15.scope: Succeeded.

May 13 16:49:56 HOME1 systemd[1]: Started Session 16 of user root.

May 13 16:49:57 HOME1 systemd[1]: session-16.scope: Succeeded.

May 13 16:49:57 HOME1 systemd[1]: Started Session 17 of user root.

May 13 16:50:03 HOME1 pmxcfs[1796]: [status] notice: received log

May 13 16:50:29 HOME1 kernel: [ 6551.431866] nvme nvme3: I/O 801 QID 8 timeout, completion polled

May 13 16:50:29 HOME1 kernel: [ 6551.431950] nvme nvme3: I/O 592 QID 83 timeout, completion polled

May 13 16:50:29 HOME1 kernel: [ 6551.431977] nvme nvme2: I/O 638 QID 2 timeout, completion polled

May 13 16:50:29 HOME1 kernel: [ 6551.432033] nvme nvme2: I/O 434 QID 56 timeout, completion polled

May 13 16:50:29 HOME1 zed: eid=106 class=delay pool='zfs' vdev=nvme3n1p1 size=131072 offset=257751900160 priority=3 err=0 flags=0x40080c80 delay=30252ms

May 13 16:50:29 HOME1 zed: eid=105 class=delay pool='zfs' vdev=nvme3n1p1 size=131072 offset=257740513280 priority=3 err=0 flags=0x40080c80 delay=30276ms

May 13 16:50:29 HOME1 zed: eid=108 class=delay pool='zfs' vdev=nvme2n1p1 size=131072 offset=240563412992 priority=3 err=0 flags=0x40080c80 delay=30305ms

May 13 16:50:29 HOME1 zed: eid=107 class=delay pool='zfs' vdev=nvme2n1p1 size=131072 offset=240563675136 priority=3 err=0 flags=0x40080c80 delay=30304ms

May 13 16:51:02 HOME1 kernel: [ 6584.199212] nvme nvme3: I/O 278 QID 7 timeout, completion polled

May 13 16:51:02 HOME1 kernel: [ 6584.199241] nvme nvme3: I/O 15 QID 78 timeout, completion polled

May 13 16:51:02 HOME1 kernel: [ 6584.199289] nvme nvme2: I/O 476 QID 70 timeout, completion polled

May 13 16:51:02 HOME1 zed: eid=109 class=delay pool='zfs' vdev=nvme2n1p1 size=131072 offset=242410397696 priority=3 err=0 flags=0x40080c80 delay=30942ms

May 13 16:51:02 HOME1 zed: eid=110 class=delay pool='zfs' vdev=nvme3n1p1 size=131072 offset=259565223936 priority=3 err=0 flags=0x40080c80 delay=30981ms

May 13 16:51:02 HOME1 zed: eid=111 class=delay pool='zfs' vdev=nvme3n1p1 size=122880 offset=259561218048 priority=3 err=0 flags=0x40080c80 delay=30986ms

May 13 16:51:35 HOME1 zed: eid=112 class=delay pool='zfs' vdev=nvme3n1p1 size=131072 offset=262066233344 priority=3 err=0 flags=0x40080c80 delay=30093ms

May 13 16:51:35 HOME1 kernel: [ 6616.966482] nvme nvme3: I/O 426 QID 20 timeout, completion polled

May 13 16:51:35 HOME1 kernel: [ 6617.076187] debugfs: Directory 'zd64' with parent 'block' already present!

May 13 16:51:35 HOME1 kernel: [ 6617.080991] zd64: p1 p14 p15

May 13 16:51:35 HOME1 systemd-udevd[704553]: zd64: Failed to create symlink '/dev/zfs/vm-100-disk-0.tmp-b230:64' to '../zd64': Not a directory

May 13 16:51:35 HOME1 systemd-udevd[704563]: zd64p14: Failed to create symlink '/dev/zfs/vm-100-disk-0-part14.tmp-b230:78' to '../zd64p14': Not a directory

May 13 16:51:35 HOME1 systemd-udevd[704564]: zd64p15: Failed to create symlink '/dev/zfs/vm-100-disk-0-part15.tmp-b230:79' to '../zd64p15': Not a directory

May 13 16:51:35 HOME1 systemd-udevd[704553]: zd64p1: Failed to create symlink '/dev/zfs/vm-100-disk-0-part1.tmp-b230:65' to '../zd64p1': Not a directory