Hello,

I am getting crazy to put in place ZFS in my server. I am new on ZFS world so now playing a bit to understand how it works before migrate all my data.

I have 3x4TB ZFS raidz1 and created using this command in PVE:

have some questions you may help to bring me some light:

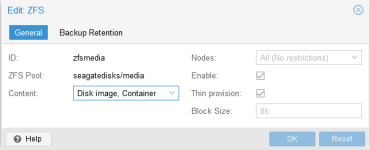

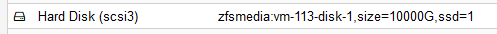

- I don't understand why proxmox allows me to add hard disk bigger than maximum allowed ~7,5TB, for instance 10TB i.e:

I can add several of them and different guest machines identify as 10TB.

How can I manage my free space then?

What will happen once reaching ~7,5TB? some of the guest machine will show still free space but unable to write there?

I run this command to see free space:

I was writing some files on the

but I removed the files, also format the disk on guest machine but still showing used 21.3G

Then, again how can I manage my entire free space? I am really confused.

Sorry if this is not the place to raise this questions but not sure where to do it.

Thank you,

I am getting crazy to put in place ZFS in my server. I am new on ZFS world so now playing a bit to understand how it works before migrate all my data.

I have 3x4TB ZFS raidz1 and created using this command in PVE:

Code:

zpool create zfspool -f -o ashift=12 raidz /dev/disk/by-id/ata-ZZZ /dev/disk/by-id/ata-XXX /dev/disk/by-id/ata-YYYhave some questions you may help to bring me some light:

- I don't understand why proxmox allows me to add hard disk bigger than maximum allowed ~7,5TB, for instance 10TB i.e:

I can add several of them and different guest machines identify as 10TB.

How can I manage my free space then?

What will happen once reaching ~7,5TB? some of the guest machine will show still free space but unable to write there?

I run this command to see free space:

Code:

zfs list -o space

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD

seagatedisks/media 6.98T 26.1G 0B 128K 0B 26.1G

seagatedisks/media/vm-105-disk-0 6.98T 4.78G 0B 4.78G 0B 0B

seagatedisks/media/vm-113-disk-0 6.98T 21.3G 0B 21.3G 0B 0B

seagatedisks/media/vm-113-disk-1 6.98T 74.6K 0B 74.6K 0B 0BI was writing some files on the

Code:

seagatedisks/media/vm-113-disk-0 6.98T 21.3G 0B 21.3G 0B 0Bbut I removed the files, also format the disk on guest machine but still showing used 21.3G

Then, again how can I manage my entire free space? I am really confused.

Sorry if this is not the place to raise this questions but not sure where to do it.

Thank you,