I had a bit of time to look at this again.

TLDR is - there were optimizations both from direct IO and other areas.

Taking one of our PVE8 hosts with the same hardware, and updating the kernel to apt install proxmox-kernel-6.14 which is the same build as the latest PVE9 one, and the performance did increase by 20% for:

But I don't really see a diff with this command toggling direct:

So it could very well be that other optimizations were at play.

Then focusing on a command to expose the difference between direct=0 and direct=1, I do see a 1.24x difference on PVE9 with identical hardware:

7422 MiB/s with ARC

9274 MiB/s with direct

Direct:

Cached/ARC:

So now I'm fairly sure the feature is in PVE9 and it does respond to being enabled. Actual performance gains highly depend on the workload and hardware. In the case above, we're using a ZFS RAID10 array with Samsung PM1743 7.68 TB drives (8 disks) on a Dell R6725 (dual socket) server.

TLDR is - there were optimizations both from direct IO and other areas.

Taking one of our PVE8 hosts with the same hardware, and updating the kernel to apt install proxmox-kernel-6.14 which is the same build as the latest PVE9 one, and the performance did increase by 20% for:

Code:

rm /dev/zvol/rpool/data/test.file

fio --filename=/dev/zvol/rpool/data/test.file --name=sync_randrw --rw=randrw --bs=4M --direct=0 --sync=1 --numjobs=1 --ioengine=psync --iodepth=1 --refill_buffers --size=8G --loops=64 --group_reportingBut I don't really see a diff with this command toggling direct:

Code:

fio --filename=/dev/zvol/rpool/data/test.file --name=sync_randrw --rw=randrw --bs=4M --direct=0 --sync=1 --numjobs=1 --ioengine=psync --iodepth=1 --refill_buffers --size=8G --loops=64 --group_reportingSo it could very well be that other optimizations were at play.

Then focusing on a command to expose the difference between direct=0 and direct=1, I do see a 1.24x difference on PVE9 with identical hardware:

7422 MiB/s with ARC

9274 MiB/s with direct

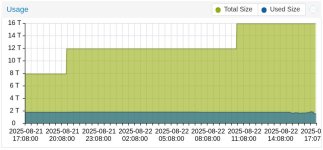

Direct:

Code:

fio --name=directio-benefit \

--filename=/dev/zvol/rpool/data/test.file \

--rw=write \

--bs=1M \

--size=4G \

--ioengine=libaio \

--direct=1 \

--numjobs=4 \

--iodepth=64 \

--runtime=60 \

--loops=64 \

--group_reportingCached/ARC:

Code:

fio --name=cached-comparison \

--filename=/dev/zvol/rpool/data/test.file \

--rw=write \

--bs=1M \

--size=4G \

--ioengine=libaio \

--direct=0 \

--numjobs=4 \

--iodepth=64 \

--runtime=60 \

--loops=64 \

--group_reportingSo now I'm fairly sure the feature is in PVE9 and it does respond to being enabled. Actual performance gains highly depend on the workload and hardware. In the case above, we're using a ZFS RAID10 array with Samsung PM1743 7.68 TB drives (8 disks) on a Dell R6725 (dual socket) server.