I bought a tiny SBC as qdevice, so i thought heck now im going for it i might as well set this up right. I havent tried the qdevice yet, but i changed hostnames(yes stupid i learned) on the two nodes. Im now left with a working setup but weird looking:

what i did thusfar (Proxmox 8.04 on both boxes)

1. Changed hostnames on both nodes. /etc/hosts and /etc/hostnames

2. rebooted node 2 only first, which broke the cluster (with hindsight obviously) with all sorts of webgui problems and such and panicked. SSH worked.

3. changed back /etc/hosts and /etc/hostnames on both nodes and rebooted node2 again.

4. Cluster came back online and stuff worked, including green ticks all is ok. Node1 never had any reboot as of yet.

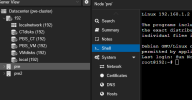

5. BUT while the ssh prompt of node2 was correct like im used to, the prompt of node1 was weird looking:

6. To keep calm, I decided to postpone the reboot of node 1.

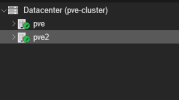

7. Now 2 days later suddenly i see a ghost node popup in my datacenter when i log in via node1. And the green OK-ticks on the 2 nodes are gone. Or was it always there and didnt see? node1 is my default login so i think its new.

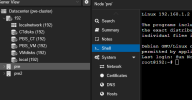

Logging in through node2 give me the correct stats:

remember i never rebooted node1 as of yet., but now im even more scared to to so

The question... Should i reboot node1, or should i check-double-check something first?

what i did thusfar (Proxmox 8.04 on both boxes)

1. Changed hostnames on both nodes. /etc/hosts and /etc/hostnames

2. rebooted node 2 only first, which broke the cluster (with hindsight obviously) with all sorts of webgui problems and such and panicked. SSH worked.

3. changed back /etc/hosts and /etc/hostnames on both nodes and rebooted node2 again.

4. Cluster came back online and stuff worked, including green ticks all is ok. Node1 never had any reboot as of yet.

5. BUT while the ssh prompt of node2 was correct like im used to, the prompt of node1 was weird looking:

root@192:~# I checked both host files and never have i made a typo as far as i know. Though it almost feels like a file is parsed incorrectly?6. To keep calm, I decided to postpone the reboot of node 1.

7. Now 2 days later suddenly i see a ghost node popup in my datacenter when i log in via node1. And the green OK-ticks on the 2 nodes are gone. Or was it always there and didnt see? node1 is my default login so i think its new.

Logging in through node2 give me the correct stats:

remember i never rebooted node1 as of yet., but now im even more scared to to so

The question... Should i reboot node1, or should i check-double-check something first?

Code:

root@192:~# cat /etc/hosts

127.0.0.1 localhost.localdomain localhost

192.168.1.2 pve.local pve

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhosts

root@192:~# cat /etc/hostname

pve

root@192:~# pvecm status

Cluster information

-------------------

Name: pve-cluster

Config Version: 6

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Sun Nov 19 11:08:14 2023

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 0x00000001

Ring ID: 1.328

Quorate: Yes

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 2

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.1.2 (local)

0x00000002 1 192.168.1.3

Last edited: