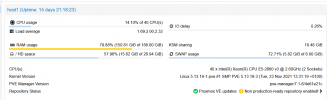

We are running 2 sql server 2017 over windows server 2016. All of a sudden the disk active time becomes 100% and query executions become slow. After sometimes it becomes normal and functioning correctly.

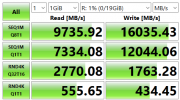

We are also having a 3rd vm running with windows server 2016 on the same storage. When the other vms having 100% disk utilization, i am testing iops on the 3rd vm and gives 130000 iops. I can do other tasks like file copy etc. as well without any issue.

All the servers are having virtio driver installed.

VM1: 80g ram 14vCPU (numa) total 2tb storage

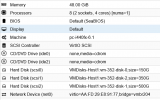

VM2: 48g ram 8vCPU (numa) total 1tb storage

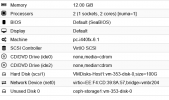

Test vm: 12g ram 2vCPU (numa) 100g storage.

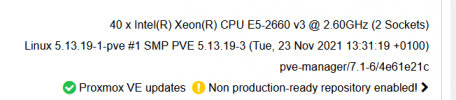

The host is having hardware raid5 with h730 mini perc controller. Intel d3 s4610 ssd x4

All 3 vms are running over proxmox lvm on this raid pool. No lvm-thin pool

Can anyone help me out what is the possible issue?

We are also having a 3rd vm running with windows server 2016 on the same storage. When the other vms having 100% disk utilization, i am testing iops on the 3rd vm and gives 130000 iops. I can do other tasks like file copy etc. as well without any issue.

All the servers are having virtio driver installed.

VM1: 80g ram 14vCPU (numa) total 2tb storage

VM2: 48g ram 8vCPU (numa) total 1tb storage

Test vm: 12g ram 2vCPU (numa) 100g storage.

The host is having hardware raid5 with h730 mini perc controller. Intel d3 s4610 ssd x4

All 3 vms are running over proxmox lvm on this raid pool. No lvm-thin pool

Can anyone help me out what is the possible issue?