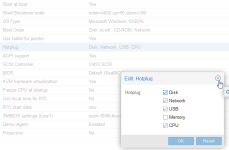

I too had issues with CPU hotplugging on Proxmox inside a Windows Server 2016 guest. Getting it working required fixing some things inside the guest. My digging actually found a bug reported to Redhat for QEMU that is really an ACPI bug in Server 2016 (and Win10). I'll refer to this link -

https://bugzilla.redhat.com/show_bug.cgi?id=1377155. Essentially MS has non-standard compliant ACPI configuration out of the box, but can be fixed fairly easily to work with QEMU/Proxmox. Essentially because of the ACPI issue, Windows is not able to see any CPUs not there at boot, AND actually NO CPU's show up in the device manager. AND you get an error'd "HID Button over Interrupt Driver" device in the device manager. This process fixes CPU hotplugging and fixes the error'd device in device manager.

I actually wrote a script to automate this process as well. Pretty simple bat script. To run the script you need two executables from Microsoft that are not included in Windows. You will need to get psexec.exe from sysinternals to run reg command in the system context to delete the bad regkey's. And you'll need to get devcon.exe from the Windows Driver Development Kit (WDK). To avoid installing the whole kit, which you likely don't need,

follow this link and do the administrative install which just downloads all the kit components. When you get to the point of extracting the MSI, the correct one is Windows Driver Kit-x86_en-us.msi not the one mentioned in the link which actually doesn't exist as the post is pretty ancient.

Run the script or do the steps manually and reboot the guest. CPU hotplugging (at least to add cores) will then work.

Script is here:

Code:

@echo offecho

echo "removing bad device"

\\castlefiles.transmit.local\shares2\Software\Castle_Scripts\fix_acpi_proxmox\devcon.exe remove "ACPI\VEN_ACPI&DEV_0010"

pause

echo "removing 1st regkey"

\\castlefiles\shares2\Software\Downloads\SysinternalsSuite\PsExec.exe /accepteula /s reg delete "HKLM\SYSTEM\DriverDatabase\DriverPackages\hidinterrupt.inf_amd64_d01b78dcb2395f49\Descriptors\ACPI\ACPI0010" /f

pause

echo "removing 2nd regkey"

\\castlefiles\shares2\Software\Downloads\SysinternalsSuite\PsExec.exe /accepteula /s reg delete "HKEY_LOCAL_MACHINE\SYSTEM\DriverDatabase\DeviceIds\ACPI\ACPI0010" /f

pause

echo rebooting

shutdown /r /t 30