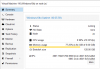

After enabling ballooning and following the wiki (installed drivers and got ballooningservice to run on windows) instead of the RAM Usage going lower inside the proxmox panel, instead the RAM went up inside the VM. Minimum RAM: 2048MB, Total Ram allocated to the VM: 14336MB. However, usage is at 90% now not only on the proxmox panel but on the VM Task Manager too.

Edit: Uninstall the ballooningservice or stopping it didn't helped, the RAM usage was still over 90% all the time (even right after the boot), which is over 12GB out of the 14GB allocated to the VM.

But, after I pressed "Uninstall device" on the Virtio Ballooning from device manager the problem was fixed, current RAM usage is only 2.5GB inside the VM (however the proxmox is not reporting that, that's why I want to use the ballooning service).

Edit2: It seems like there is a memory leak on the ballooning win10 driver. After I installed the driver again I checked task manager, ram usage is +100MB every 3-4 seconds and it won't stop.

Edit: Uninstall the ballooningservice or stopping it didn't helped, the RAM usage was still over 90% all the time (even right after the boot), which is over 12GB out of the 14GB allocated to the VM.

But, after I pressed "Uninstall device" on the Virtio Ballooning from device manager the problem was fixed, current RAM usage is only 2.5GB inside the VM (however the proxmox is not reporting that, that's why I want to use the ballooning service).

Edit2: It seems like there is a memory leak on the ballooning win10 driver. After I installed the driver again I checked task manager, ram usage is +100MB every 3-4 seconds and it won't stop.

Attachments

Last edited: