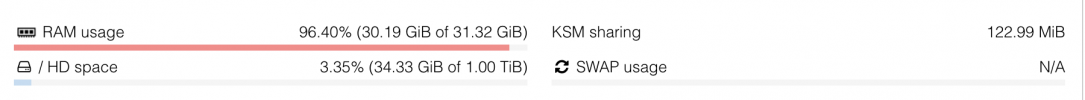

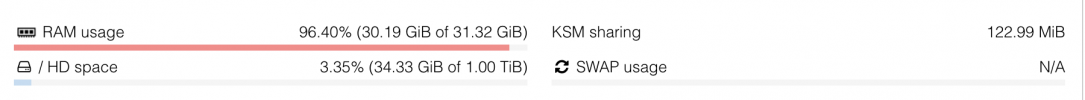

We have a PVE node (7.1) with 32GB RAM

We have only 6 LXC containers running on this node, each (according to PVE WebUI) is using approx. 100MB of ram (max allowed per LXC container is 512MB)

So my question is... Why and where did all the RAM go? yes we run ZFS but seems thats only using 509.6 MiB

We have only 6 LXC containers running on this node, each (according to PVE WebUI) is using approx. 100MB of ram (max allowed per LXC container is 512MB)

So my question is... Why and where did all the RAM go? yes we run ZFS but seems thats only using 509.6 MiB

proxmox-ve: 7.1-1 (running kernel: 5.13.19-4-pve)

pve-manager: 7.1-12 (running version: 7.1-12/b3c09de3)

pve-kernel-helper: 7.1-14

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-5-pve: 5.13.19-13

pve-kernel-5.13.19-4-pve: 5.13.19-9

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-4-pve: 5.11.22-9

ceph-fuse: 15.2.14-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-7

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-5

libpve-guest-common-perl: 4.1-1

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.1-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-2

proxmox-backup-client: 2.1.5-1

proxmox-backup-file-restore: 2.1.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-7

pve-cluster: 7.1-3

pve-container: 4.1-4

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-6

pve-ha-manager: 3.3-3

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.1-2

pve-xtermjs: 4.16.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

pve-manager: 7.1-12 (running version: 7.1-12/b3c09de3)

pve-kernel-helper: 7.1-14

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-5-pve: 5.13.19-13

pve-kernel-5.13.19-4-pve: 5.13.19-9

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-4-pve: 5.11.22-9

ceph-fuse: 15.2.14-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-7

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-5

libpve-guest-common-perl: 4.1-1

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.1-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-2

proxmox-backup-client: 2.1.5-1

proxmox-backup-file-restore: 2.1.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-7

pve-cluster: 7.1-3

pve-container: 4.1-4

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-6

pve-ha-manager: 3.3-3

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.1-2

pve-xtermjs: 4.16.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

Code:

------------------------------------------------------------------------

ZFS Subsystem Report Mon Apr 18 16:28:30 2022

Linux 5.13.19-4-pve 2.1.2-pve1

Machine: elbrus (x86_64) 2.1.2-pve1

ARC status: THROTTLED

Memory throttle count: 90665

ARC size (current): 50.5 % 517.0 MiB

Target size (adaptive): 50.0 % 512.0 MiB

Min size (hard limit): 50.0 % 512.0 MiB

Max size (high water): 2:1 1.0 GiB

Most Frequently Used (MFU) cache size: 45.6 % 195.8 MiB

Most Recently Used (MRU) cache size: 54.4 % 233.4 MiB

Metadata cache size (hard limit): 75.0 % 768.0 MiB

Metadata cache size (current): 15.9 % 122.4 MiB

Dnode cache size (hard limit): 10.0 % 76.8 MiB

Dnode cache size (current): 30.3 % 23.3 MiBoptions zfs zfs_arc_min=536870911

options zfs zfs_arc_max=1073741824

options zfs l2arc_noprefetch=0

options zfs zfs_arc_max=1073741824

options zfs l2arc_noprefetch=0

Code:

total used free shared buff/cache available

Mem: 31Gi 29Gi 854Mi 68Mi 909Mi 373Mi

Swap: 0B 0B 0B