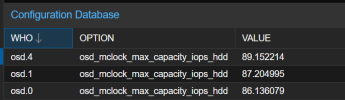

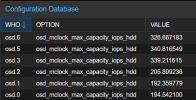

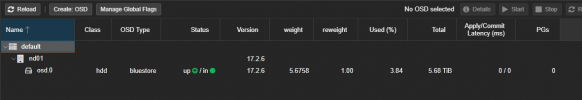

I have been configuring and testing ceph. When OSDs were first added, the performance values of the added osds were automatically added to the configuration database. However, at some point it stopped working. Should it work? Maybe some update came that turned off this functionality?

Running the test manually passes

What is the default test when adding osd?

Please also explain how critical is the presence of this data in the configuration database? Is it possible to operate a ceph cluster without these counters?

Running the test manually passes

Code:

root@nd01:~# ceph tell osd.0 cache drop

root@nd01:~# ceph tell osd.0 bench 12288000 4096 4194304 100

{

"bytes_written": 12288000,

"blocksize": 4096,

"elapsed_sec": 2.2298931299999998,

"bytes_per_sec": 5510577.9889998594,

"iops": 1345.3559543456688

}

root@nd01:~# ceph tell osd.0 cache drop

root@nd01:~# ceph tell osd.0 bench

{

"bytes_written": 1073741824,

"blocksize": 4194304,

"elapsed_sec": 4.1815870310000003,

"bytes_per_sec": 256778542.70157841,

"iops": 61.220775294680216

}What is the default test when adding osd?

Please also explain how critical is the presence of this data in the configuration database? Is it possible to operate a ceph cluster without these counters?

Last edited: