Hello All,

I've been getting the infamous warning about slow reads and ops on bluestore but only from these two (my NEWEST SSDs).

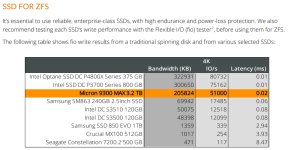

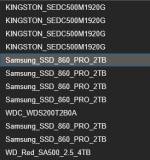

OSD 7 and OSD 2 are 4TB WD Red NAS SATA SSDs. The rest are a mix of Kingston DC SATA SSDs and Samsung 860 Pros @ 2TB.

This is causing IO delays.

Yes before I get all the hate, it's a 2:1 SSD Pool. Yes... Two services with IDENTICAL OSDs (model, size and number) and are exactly the sameon each server.

Yes there is enough RAM (384GB each)

Yes there is enough CPU (Intel(R) Xeon(R) Silver 4210R CPUx2) - 2.85% usage

These are on a 10Gbit DAC connected SAN on a Unifi Agg 10Gbit switch. It's nowhere near network capacity. There are three servers (extra for Q + processing for services), all connected with Intel NICs, jumbo frames (recent troubleshooting) and full logging to Graylog. Nothing is dropping, no errors, it's clean.

Virtual Environment 8.4.8

CEPH: 18.2.7

Now I realize that they have twice the PGs the other SSD drives do which naturally would mean more read and writes. I'm not seeing activity that would suggest it's anywhere NEAR the maximum of even a single SATA SSD.

Short of throwing these expensive ($400 CAD each) things out, is there any other reason why these would be completely beaten down by Ceph?

I've already adjusted mClock and tried several tuning guides. Just these two disks are causing so much IO delay.

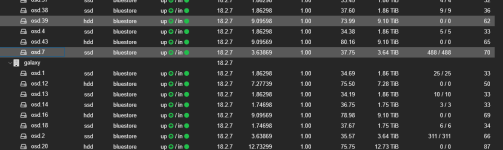

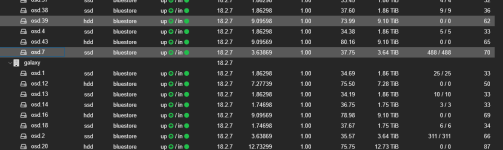

Current ops:

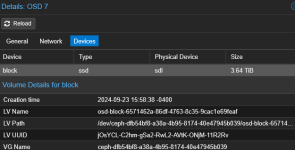

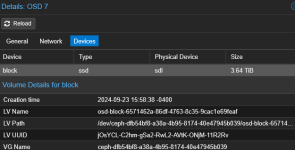

OSD Details:

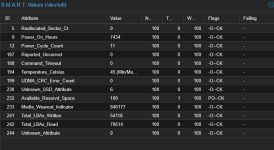

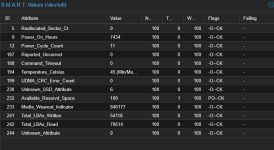

SMART Data

I've been getting the infamous warning about slow reads and ops on bluestore but only from these two (my NEWEST SSDs).

OSD 7 and OSD 2 are 4TB WD Red NAS SATA SSDs. The rest are a mix of Kingston DC SATA SSDs and Samsung 860 Pros @ 2TB.

This is causing IO delays.

Yes before I get all the hate, it's a 2:1 SSD Pool. Yes... Two services with IDENTICAL OSDs (model, size and number) and are exactly the sameon each server.

Yes there is enough RAM (384GB each)

Yes there is enough CPU (Intel(R) Xeon(R) Silver 4210R CPUx2) - 2.85% usage

These are on a 10Gbit DAC connected SAN on a Unifi Agg 10Gbit switch. It's nowhere near network capacity. There are three servers (extra for Q + processing for services), all connected with Intel NICs, jumbo frames (recent troubleshooting) and full logging to Graylog. Nothing is dropping, no errors, it's clean.

Virtual Environment 8.4.8

CEPH: 18.2.7

Now I realize that they have twice the PGs the other SSD drives do which naturally would mean more read and writes. I'm not seeing activity that would suggest it's anywhere NEAR the maximum of even a single SATA SSD.

Short of throwing these expensive ($400 CAD each) things out, is there any other reason why these would be completely beaten down by Ceph?

I've already adjusted mClock and tried several tuning guides. Just these two disks are causing so much IO delay.

Current ops:

OSD Details:

SMART Data