Not sure where to begin...

I have a DELL XPS 8930 i7 64GB RAM.

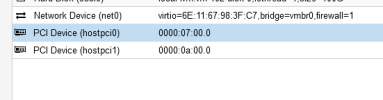

I added a QNAP TL-D800S JBOD with a QXP800eS-A1164 pci card.

Setup a 3x8TB (all 3 drives are brand new) ZFS Pool (mounted as /storage) from the QNAP directly on Proxmox (not in a VM or LXC).

This is extra storage, I don't boot off of this pool or anything like that.

I setup vsftpd on proxmox and ftp'd about 5TB of media files over to the /strorage (actually into a dataset called /storage/media). Setup PLEX on an LXC and used bind mounts from proxmox to the plex LXC everything works fine. Plex works, files are fine, rainbows and unicorns.

Now that I've got the beginning of something working so I decided to start doing backups.

I have two LXC's and one VM.

When I backup the LXCs, no problem.

When I backup the VM, my zfs pool gets corrupted and suspended (every time) write or checksum errors. VM Isn't running.

What's weird though is I'm not backing up to the local zpool (/storage), I'm backing up to an NFS share from my Synology.

The backup always succeeds, and completes, but my zpool gets corrupted every time. Only when backing up the one VM, never when backing up an LXC.

I have to reboot the machine to recover but everytime it reboots, the system comes up fine, zpool is fine everything works.

What could explain my zpool getting messed up when I'm not writing to it? More importantly, how do I fix it?

Happy to provide logs just not sure which ones would help?

I have a DELL XPS 8930 i7 64GB RAM.

I added a QNAP TL-D800S JBOD with a QXP800eS-A1164 pci card.

Setup a 3x8TB (all 3 drives are brand new) ZFS Pool (mounted as /storage) from the QNAP directly on Proxmox (not in a VM or LXC).

This is extra storage, I don't boot off of this pool or anything like that.

I setup vsftpd on proxmox and ftp'd about 5TB of media files over to the /strorage (actually into a dataset called /storage/media). Setup PLEX on an LXC and used bind mounts from proxmox to the plex LXC everything works fine. Plex works, files are fine, rainbows and unicorns.

Now that I've got the beginning of something working so I decided to start doing backups.

I have two LXC's and one VM.

When I backup the LXCs, no problem.

When I backup the VM, my zfs pool gets corrupted and suspended (every time) write or checksum errors. VM Isn't running.

What's weird though is I'm not backing up to the local zpool (/storage), I'm backing up to an NFS share from my Synology.

The backup always succeeds, and completes, but my zpool gets corrupted every time. Only when backing up the one VM, never when backing up an LXC.

I have to reboot the machine to recover but everytime it reboots, the system comes up fine, zpool is fine everything works.

What could explain my zpool getting messed up when I'm not writing to it? More importantly, how do I fix it?

Happy to provide logs just not sure which ones would help?